Andrew Prosikhin has built AI systems where failure means more than disappointed users. It means lives are at risk. As a Senior Generative AI Engineer in medical technology and now CEO of Blobfish AI, he has learned that the gap between an impressive AI demo and a production system is exponentially expensive to bridge.

Most companies celebrate hitting 90% reliability with their AI pilots, but Prosikhin warns this is where the real work begins. For traditional software, moving from prototype to production might require a three-to-one investment. With generative AI, that ratio jumps to twenty-to-one or higher.

His current venture takes an unusual approach to voice AI: training call center operators rather than replacing them. Unlike traditional voice assistants designed to be helpful and predictable, his system deliberately creates difficult customers to train against.

Q: You’ve built AI systems in medical technology where reliability is life-or-death. Now, you’re developing voice AI for call center training. What are the key technical differences between building AI demos and production-ready systems that actually work at scale?

AI demos are easy. You can often spin one up in minutes with tools like Cursor or Codex. The real challenge is turning that demo into a production system. For traditional software, the ratio from prototype to MVP might be three-to-one or ten-to-one. With generative AI, it’s twenty-to-one or higher.

The difficulty lies in moving from a system that works 90 percent of the time, which is relatively easy, to one that works 99 percent of the time or better, which is exponentially harder. On top of that, you need an architecture that allows you to safely swap one model version for another as the technology evolves.

The foundation for all of this is automated testing. Without automated evaluation and metrics, you will not get past 90 percent reliability because every fix risks breaking something else. Implementing these tests is not straightforward. It requires machine learning expertise and specialized frameworks in addition to generative AI skills, but it is the only way to reliably get the system to high reliability.

Q: With your experience as a Senior Generative AI Engineer in medical tech, can you tell us what specific technical safeguards and reliability measures you implemented that you rarely see in typical enterprise AI deployments?

The methods for making software mission-critical are well established. What was unique about working on a mission-critical product with generative AI was translating some of those practices into the AI world.

One major challenge was automated testing of AI components that could only be evaluated by another generative AI system. To make this reliable, we had to write additional tests to ensure that the AI performing the evaluation was itself functioning properly. That extra layer of validation is something that hasn’t yet made its way to most enterprise projects.

Q: Blobfish AI focuses on training humans with voice AI rather than replacing them. Can you walk us through the technical architecture that makes this human-AI collaboration possible and how it differs from traditional voice assistants?

You don’t want your Alexa yelling at you, being unpredictable, or repeating the same question again and again. But that’s exactly the kind of behavior a good customer service trainer needs to simulate!

Since AIs aren’t yet tuned for emotion or for being deliberately difficult, we have to push the technology to its limits to get the behavior we want. This makes it a very exciting engineering and configuration challenge for our team.

Q: What specific voice AI technologies are you leveraging to create realistic training scenarios for call center operators, and what technical challenges arise when designing AI that needs to understand and adapt to human learning patterns?

We use the OpenAI Realtime API. For training applications, reacting to tone of voice is essential, and that can only be done effectively with true voice-to-voice APIs. Most existing voice products still rely on a voice-to-text-to-voice architecture, which by design cannot pick up intonation and is also slower.

Our system is built on a voice-to-voice architecture. It’s newer and can be trickier to work with, but the benefits for the end user are significant. I expect this approach to become the dominant standard within the next two to three years.

Q: Everyone talks about AI hallucinations in chatbots, but you’ve worked on systems where errors have real consequences. What reliability engineering practices from medical AI should every enterprise be adopting when deploying generative AI?

My preferred approach is output guardrailing. This means adding an extra prompt layer designed specifically to catch mistakes from the generative model. The guardrail focuses only on moderating the output and comes with its own set of tests. For sensitive use cases, having these in place makes engineers sleep much better at night.

Another important practice is systematically identifying areas where hallucinations occur and adjusting prompts or data format to reduce the chance of them repeating. This should always be done with metrics and tests in place, so you’re not fixing one part of the system while unintentionally breaking another.

Q: From an engineering perspective, what’s more technically challenging: building AI to replace human tasks or building AI to enhance human performance? Are the specific technical trade-offs involved?

You always start with augmentation, then evaluate how much of the task can be replaced in a cost-effective way. In practice, it’s usually well below 100 percent. Beyond that point, further optimization doesn’t make economic sense. The real goal is to improve the business, not to eliminate a function entirely.

In most cases, generative AI allows a smaller number of people to perform the same function more effectively with AI support. That saves the company money, but I’ve yet to see AI wipe out a department altogether.

Q: You’ve seen AI development from Google, medical tech, and now as a startup founder. What’s the biggest technical misconception enterprises have about deploying AI at scale, and how do you avoid these pitfalls?

Leaders often think in big slogans like “AI first” and create plans that read more like marketing brochures than real product strategies. The reality of successful AI projects is much less glamorous. Most start by targeting a small, specific function inside the company. These projects are often invisible to clients but deliver huge ROI by saving time and money. You probably won’t get a press release out of it, but it will make a real impact on the bottom line.

If you’re a leader considering generative AI, start small and focus on clear, high-ROI use cases. Those early wins will build both confidence and internal expertise, setting the stage for more ambitious initiatives down the line.

Q: How do you technically measure and optimize for human learning outcomes versus traditional AI performance metrics, and what does your feedback loop architecture look like at Blobfish AI?

Generative AI is excellent at producing consistent measurements, and at Blobfish we use it to collect extensive data on our trainees’ progress. At the same time, AI still lacks the context and judgment needed to drive product decisions; that part of the loop is entirely human-led. We analyze and interpret the data and decide how to adopt the product based on it.

Q: What are the three most critical technical decisions companies get wrong when moving from AI pilot to production, based on your experience building reliable AI systems?

The first mistake is moving too fast. Whenever possible, I recommend scaling a new gen AI system gradually rather than releasing it to 100% of users out of the gate. Start by shadowing a small portion of traffic to spot performance gaps, then roll out to 1% of lower-value users. You’ll uncover inevitable mistakes, fix them, and build confidence. As metrics improve, you can expand until you know the system is ready for full deployment.

The second mistake is failing to prepare fallbacks as you scale. Early-stage systems carry too much risk to be left on their own. You need humans ready to handle exceptions, or at least lightweight automation that can step in when things go wrong. It can even be worth lining up a backup AI vendor, since APIs remain notoriously unstable as of 2025.

The third mistake is relying on a single prompt for everything. This works great for prototypes, but it makes it very difficult to safely evolve a production system. I elaborated more on this in the articles here: https://hackernoon.com/this-one-practice-makes-llms-easier-to-build-test-and-scale

Q: You dropped out of theoretical physics to join a startup, then worked your way through the AI industry. What technical evolution in AI development do you think will have the biggest impact on enterprise adoption over the next two years?

Voice AI is going to expand dramatically over the next few years; we’re still very early in that journey. The voice-to-voice architectures only emerged in late 2024, and we’re just now reaching the reliability needed for truly effective voice powered applications. Soon, these systems will sound far more natural, follow prompts with the same consistency as text-based models, and respond at near-human speed. This shift will unlock huge opportunities. Since voice lets us express complex ideas and commands much faster than typing, I expect rapid innovation and a wave of new applications in this space.

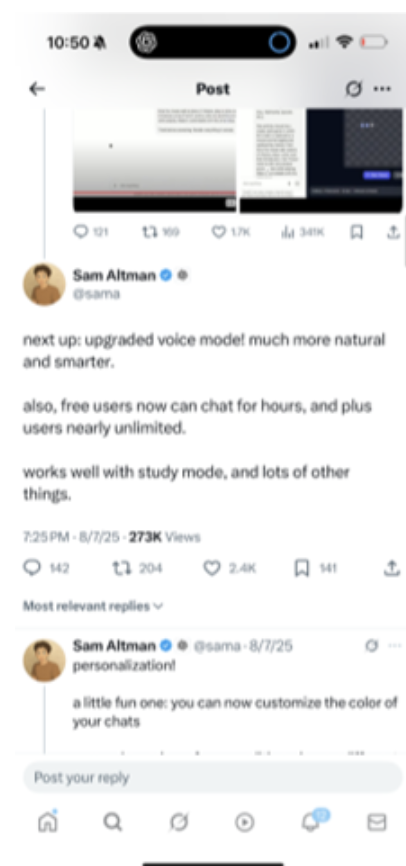

Sam Altman appears to be thinking along the same lines:

Q: Given your unique experience with both medical-grade AI reliability and voice AI for human training, what technical principles should be non-negotiable for any enterprise AI implementation?

The most critical principle is to stay anchored to the user’s pain points rather than being driven by technology for its own sake. With AI, it’s especially tempting to get caught up in the excitement of the technology, but the true measure of value is whether it delivers meaningful improvement for the end user. If AI doesn’t actually solve the problem at hand, it shouldn’t be introduced just for the sake of having it.

The second principle is to avoid expecting too much from AI on day one. Just as you wouldn’t overwhelm a new employee with everything at once, AI systems should be introduced gradually. Start with observation or shadowing, then move to a small pilot group, and expand to a limited percentage of users before scaling. At each stage, surface and resolve issues while continuing to monitor performance. By the time you approach full deployment, most serious problems will be addressed, and overall confidence in the system will be much higher. This stepwise approach significantly reduces rollout risk.

The third and final principle is that if you want high reliability, the system needs to be backed by fully automated tests. This is standard for non-AI systems, but it’s often forgotten when it comes to AI. A big part of the challenge is that many engineers and AI researchers don’t yet have the tools or skills to build these kinds of tests.