The struggle with today’s centralized cloud

Many top AI teams hit roadblocks with infrastructure that’s outdated and that doesn’t meet modern requirements. Companies funnel huge sums into major cloud services built for a previous era; these services often can’t meet the lightning-fast response times current AI models demand. It’s like trying to drive a Formula 1 car through city traffic: possible, but you’re never going to win.

The issue comes down to latency and cost. Most organizations still depend on faraway, centralized data centers, which introduces delays and drives up spending. Years of work on distributed systems show that genuine breakthroughs in AI efficiency depend on flipping this model. Instead of relying on distant hardware, leading companies are shifting to distributed networks to bring high-performance computing directly to the user, wherever they are. This isn’t just another round of technical tweaks; it’s a foundational change that the savviest organizations are already embracing.

The real cost of latency

Latency isn’t merely an inconvenience—it directly impacts the bottom line.

Amazon found that every 100ms of latency cost them one percent in sales, while Google discovered that a 500ms delay in search results caused a 20% drop in traffic. For financial trading, even a five millisecond delay can cost $4 million in revenue per millisecond. In gaming, users start noticing latency at 100ms, significantly impacting their experience.

While specific medical latency data is harder to quantify, the principle remains: in time-critical applications, milliseconds matter. Add these costs to constraints around throughput, consistency and GPU utilization efficiency across the network, and you quickly see the practical benefits for AI teams that are needed to win today.

However, most of these problems are completely avoidable. The computer infrastructure industry has stuck with old cloud models created when we only needed to load static web pages. For mission-critical applications, including fraud detection, real-time control or instant AI-assisted decisions, tolerating that much delay just isn’t workable. Many organizations end up paying three times what they should, just to try and keep performance steady.

Centralized architecture: Yesterday’s solution

Two decades ago, most digital activity was web-based, and brief lags didn’t have the same effects they do today. Hyperscale clouds made sense, clustering advanced hardware in a few spots around the world. But AI changed the equation, and today’s workloads require instant feedback and localized processing. Centralization can’t keep up.

Here’s why centralization falls short:

- Geographic bottlenecks: The most powerful GPUs are concentrated in fewer than 20 global locations, meaning long delays for users elsewhere; or worse yet, some use cases simply aren’t possible, stifling innovation.

- Resource contention: Shared infrastructure can vary in performance by as much as 60% during peak times due to congestion and noisy neighbors, forcing companies to overspend to guarantee results.

- High markups: Traditional cloud providers often charge far more than the actual cost of hardware, padding bills with unnecessary overhead and numerous ancillary charges (death by a thousand cuts).

The distributed model: A new foundation

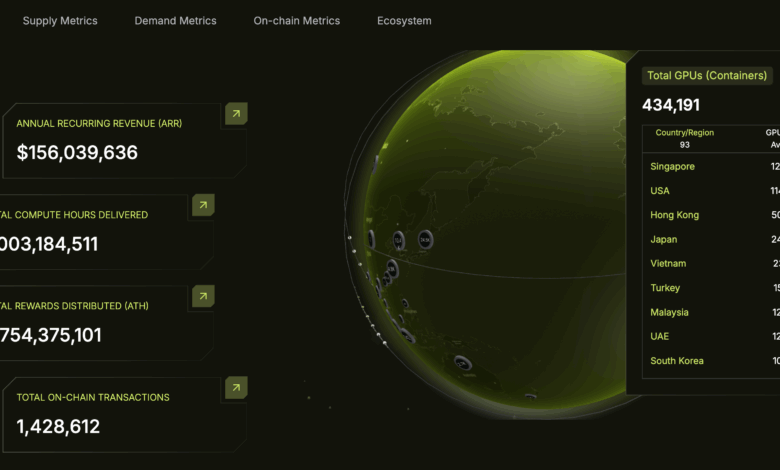

The fix? Rethink from the ground up. Distributed GPU networks connect providers worldwide to create a unified, high-grade computing fabric. This isn’t edge computing with second-tier hardware; it’s enterprise-class infrastructure, decentralized and accessible from virtually anywhere. A distributed model isn’t about compromising on GPU power, but about optimizing its location for demanding AI and gaming workloads.

With distributed networks, organizations can leverage GPU clusters close by, enabling real-time applications—from recommendation engines to autonomous controls—that weren’t practical before.

Some forecasts anticipate that total AI infrastructure and software spending may exceed $30 billion by 2027 (IDC). Meanwhile, AI‑2027.com projects a 10× increase in global AI‑relevant compute capacity by the same year. But no public report currently supports the idea that the majority of new AI spending will target distributed or edge model deployments, nor that such deployments consistently yield ~60% cost savings and <50 ms global latency, both of which are needed to support a continued AI revolution. This is further compounded with the demand for specific, high-end GPUs like H200s or B200s, where there are significant supply chain delays in the centralized models.

Benefits of a distributed infrastructure

There are a myriad of benefits from moving to a distributed infrastructure:

- Localized training: By training models in close proximity to data collection, privacy and compliance can be maintained.

- Intelligence at the edge: Driverless cars, robotics factories and IoT require inferencing in less than 10ms, rendering distributed systems as the only solution.

- Level playing field geographically: Organizations not located near centralized infrastructure clusters can access AI resources to spur innovation.

Technical challenges and how they’re solved

It’s not enough to just aggregate AI infrastructure from multiple locations. Successful distributed AI platforms need orchestration; monitoring; fair and transparent resource allocation; appropriate monetary incentives and rewards for participation; and enforcement of enterprise service levels. In addition, resources need to scale; data sovereignty needs to be enforced; hardware failures need to be detected and resolved; and providers need clear accountability with proper enforcement.

The use of blockchain is a natural fit .It allows a community to police and enforce the distributed network ecosystem and enables new participants to understand the reward flow and return on their investment. Further, the community can contribute and support the scalability and ensure that the entire system is just, transparent and secure. A distributed network can achieve these principles by leveraging dynamic resource allocation or blockchain-verified node performance to ensure reliability and trust in a decentralized environment.

The competitive edge

The shift to distributed infrastructure and AI isn’t optional for organizations pursuing competitive advantage; it’s becoming the new baseline. IBM research found that 59% of enterprises already working with AI intend to accelerate and increase investment in the technology. Early adopters are seeing up to 40% gains in productivity and quality, while two-thirds of executives said the technology has boosted productivity. Nearly 60% have saved costs. Companies that delay risk lose to those that move fast, as organizations that fail to integrate AI risk falling behind. The competitive edge is clear: early adopters are positioned to capture significant efficiencies and cost savings, while those slow to adopt face increasing disadvantages.

The bottom line

Despite business’ significant use of centralized cloud systems over the last 15 years, these systems are prohibitive for AI adoption and expansion. In order to remain competitive, it behooves businesses to embrace locally-accessible systems with a more transparent and ubiquitous business model in order to foster better innovation down the line.