Since the release of tools like ChatGPT in late 2022, AI capabilities have advanced at incredible speed and are now creating opportunities for organisations regardless of their size. We have seen a marked development from custom-built AI applications toward AI-as-a-service systems that can handle a wide range of tasks with minimal technical expertise required. This has accelerated AI adoption across sectors that were previously underserved.

AI operates across borders, but regulation is highly fragmented between countries and regions. This inconsistency creates significant barriers to innovation and adoption. Businesses want to innovate responsibly in an interconnected world but face conflicting rules and standards. The role for internationally harmonised AI standards has never been more apparent.

Diverging approaches to regulation

Major global powers have adopted distinctly different regulatory approaches to AI. The UK has positioned itself with a pro-innovation approach through its AI Regulation White Paper. This framework emphasises sector-led governance with proportionate, context-specific rules rather than blanket regulations. This approach delegates responsibility for governing AI to existing regulators who focus on applications within their regulatory remits.

The UK’s AI Opportunities Action Plan, unveiled at the beginning of the year, further supports this approach by setting out a strategy for harnessing AI to drive economic growth while maintaining ethical safeguards.

A significant development is the UK AI Safety Institute (AISI) – recently renamed the AI Security Institute to reflect an increased focus on addressing security concerns and criminal misuse of AI. This is the first national body specifically tasked with evaluating and testing frontier AI models. It serves as an early warning system for risks and provides technical tools to inform governance and regulation.

The EU has taken a more prescriptive path. It’s AI Act classifies AI systems by risk level and imposes strict requirements on high-risk applications. This horizontal approach applies across all sectors and imposes detailed compliance obligations, including bans on certain AI applications deemed to pose unacceptable risks.

Meanwhile, the US relies primarily on voluntary commitments from tech companies. This non-legislative approach promotes industry self-regulation rather than enforceable rules. In late 2023, former President Biden issued an Executive Order on AI, establishing new standards and directing federal agencies to take specific actions. However, President Trump revoked many of these policies in January, in a move to minimise government intervention, boost innovation, and establish the US as a global leader in AI.

Each jurisdiction is taking fundamentally different approaches to AI governance, resulting in a regulatory landscape with sharp contrasts in philosophy and implementation. Businesses, particularly those operating across international markets, must learn to navigate these conflicting requirements while staying competitive.

The burdens of fragmentation

AI companies can face challenges navigating multiple regulatory frameworks. This problem is especially acute when they export or collaborate internationally. Recent data shows 65% of UK AI companies engage in exports – a 14% rise since 2022. The US accounts for over half of the UK’s internationally headquartered AI companies and more than three-quarters of AI-related employment, revenue, and GVA generated by international companies.

Regulatory inconsistencies can create disproportionate burdens for smaller businesses. While large corporations can dedicate substantial resources to compliance, SMEs often lack the legal expertise and financial capacity to navigate complex, sometimes contradictory requirements across multiple jurisdictions.

Regulatory uncertainty can also discourage R&D investment. Companies may hesitate to develop products that might face regulatory barriers in key markets. This could lead to a troubling scenario where AI development may concentrate in “regulatory havens” with minimal oversight – rather than where technical talent and innovation naturally flourish.

Standards as a common language

A collaborative, international approach to AI governance has an important role to play in preventing this fragmentation and ensuring responsible AI development worldwide. Such an approach would not only level the playing field for businesses but also help establish global best practices for ethical AI development and use.

Standards bodies play a crucial role in creating alignment across borders. Unlike regulations, which are mandatory and vary by jurisdiction, standards provide voluntary yet widely adopted frameworks that businesses can implement regardless of location.

The UK-hosted AI Safety Summit in 2023 marked a significant step toward global coordination. This event produced the Bletchley Declaration, a commitment by 28 countries to manage AI risks through international cooperation while supporting innovation. It also initiated agreements on AI safety testing and increased investment in AI research. These outcomes sparked further international collaboration at summits in South Korea and France.

The AI Standards Hub, launched in October 2022 as part of the UK’s National AI Strategy, is a collaborative effort between The Alan Turing Institute, BSI ( British Standards Institution), and the National Physical Laboratory.

The Hub helps stakeholders navigate international AI standardisation efforts, provides resources such as databases for AI standards and policies, and offers training on AI standardisation.

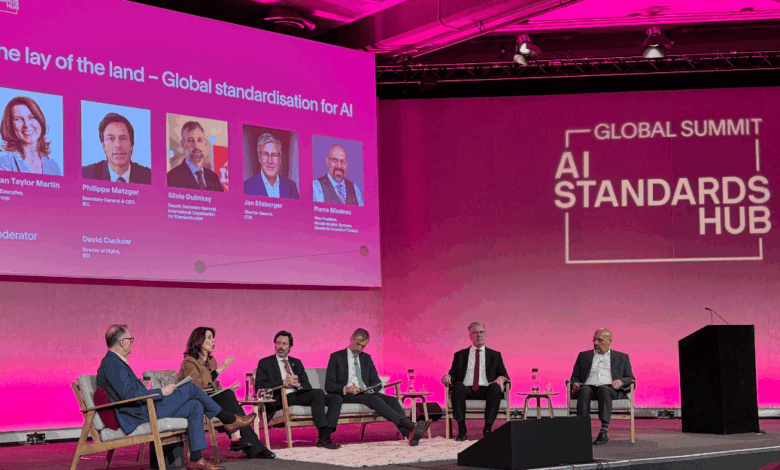

A global summit was held in March 2025. hosted by the Hub’s partners, bringing experts together across the international AI ecosystem to explore the evolving role of standards in relation to AI governance frameworks and emerging regulation globally.

Similarly, the BridgeAI Standards Community, supports UK businesses in adopting AI responsibly and ethically. It provides insights on key standards that support the development/adoption of AI, research, webinars, and case studies, with particular focus on high-potential sectors including agriculture, construction, creative industries, and transport.

Major international standards organisations – ISO, IEC, and ITU – are actively collaborating on AI standards development. They have jointly announced a 2025 International AI Standards Summit in Seoul and are building a comprehensive AI standards database. These efforts mirror successful standardisation in other technologies, such as GPS, which ensured consistent positioning accuracy worldwide and enabled interoperable navigation systems.

At their core, standards create a shared language that bridges different regulatory systems. They deliver four key benefits: compatible technologies, common ethical approaches, clear compliance steps, and stronger public trust. By addressing these fundamentals, standards can help businesses navigate complex regulations while ensuring AI development remains responsible and innovative.

A case for harmonised AI governance

International cooperation on AI standards is a significant opportunity. Without it, fragmentation will continue to impede innovation and limit AI’s potential benefits. The UK’s leadership in this space has already shown results through initiatives like the AI Safety Summit, demonstrating how targeted collaboration can produce tangible outcomes. An EU-UK panel discussion in Brussels, co-hosted by the UK Mission to the EU and AI Standards Hub, which BSI joined, also highlights the conversations centred around practical ideas to support international standards cooperation.

By building effective frameworks that span borders, we can give businesses the clarity they need, encourage responsible innovation, and build trust in AI systems. As AI capabilities continue to advance, our collective decisions about governance will determine whether we successfully balance progress with appropriate safeguards.

By working together on shared standards we can create an environment where AI can deliver its transformative benefits – shaping society by developing a resilient digital future, safely and effectively.