AI is changing everything from how we write to how we work, shop, and even connect. Be honest: how many emails or messages did you write with ChatGPT this week?

So it’s no surprise that people are turning to AI tools like ChatGPT for something more personal, mental health support.

It makes sense. You open a browser, type a few thoughts into ChatGPT, and instantly get a response that feels caring and private. For many, it seems easier than opening up to a human. But there’s a serious issue beneath the surface: general-purpose AI tools weren’t designed to support your mental health. And when people in distress rely on systems that don’t understand emotional safety or therapeutic boundaries, the risks are real.

ChatGPT and similar models are optimized for general conversation, not clinical care. They may sound empathetic, but they lack structure, memory, and training in evidence-based practices like Cognitive Behavioral Therapy (CBT). They don’t know when to pause, when to escalate, or how to guide someone through a thought spiral.

At Aitherapy, we’re building something different: AI designed specifically to offer safe, structured support rooted in CBT. In this article, we’ll break down why ChatGPT often fails at mental health and why Aitherapy works better instead.

What General AI Models Are Actually Designed For

To understand why tools like ChatGPT fall short in mental health contexts, we need to understand what they’re actually built for.

Large Language Models (LLMs) like ChatGPT, Gemini, and Claude are trained on massive amounts of internet data to predict the next word in a sentence. That’s their core function, not emotional intelligence, not psychological safety. They’re excellent at sounding human, summarizing content, answering factual questions, or even helping you draft an email. But their strength lies in generating plausible text, not understanding emotional nuance.

These models aren’t designed to detect when a user is spiraling into anxiety or experiencing suicidal ideation. They don’t inherently know the difference between a joke and a cry for help. They respond based on probability and pattern-matching, not therapeutic principles. And while they may sound empathetic, that empathy is shallow statistical mimicry, not emotional understanding.

Worse, these models can “hallucinate”, a known behavior where they generate false or misleading information with absolute confidence. In mental health scenarios, this can be dangerous. Imagine an AI confidently offering inaccurate advice about trauma, grief, or self-harm coping strategies. Even with guardrails, mistakes still slip through.

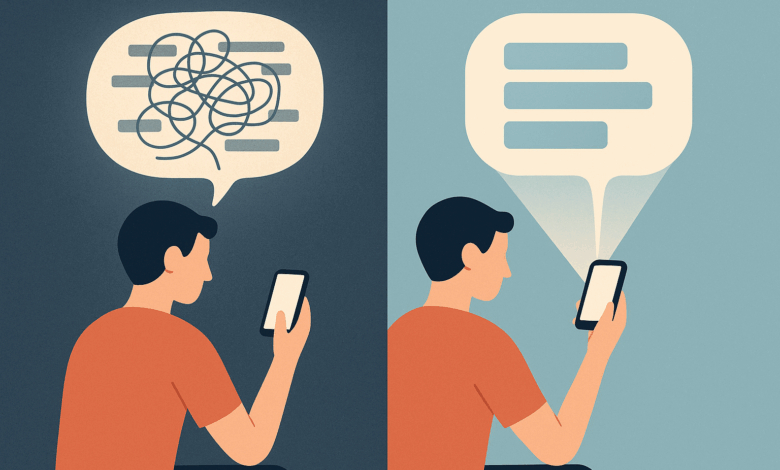

The core problem is this: general AI models are reactive. They don’t follow a plan, structure, or therapeutic arc. They respond one message at a time. That’s fine for casual use, but it breaks down when someone needs steady, emotionally anchored support.

It’s not a failure of intelligence, it’s a failure of intention. These tools were never meant to guide people through mental health struggles. And when we stretch them into roles they weren’t built for, we risk doing more harm than good.

Mental Health Requires More Than Just a Nice Chat

The illusion of comfort is one of the most dangerous things about using general AI for mental health. When ChatGPT responds with “You’re not alone” or “That must be hard,” it feels like support but it lacks the structure that actual therapeutic help requires.

Mental health support isn’t just about saying nice things. It requires a careful balance of empathy, evidence-based techniques, and boundaries. It’s not a one-off comfort, it’s a process.

At the core of effective therapy is a framework. Cognitive Behavioral Therapy (CBT), for example, is structured around identifying and challenging unhelpful thinking patterns, gradually changing beliefs, and reinforcing healthier behaviors. It’s not random. It’s step-by-step. And every step has a purpose.

That structure is critical for users who are overwhelmed, anxious, or stuck in looping thoughts. They don’t need a chatbot that mirrors their feelings, they need one that gently guides them toward clarity. Without that, you risk creating what feels like a comforting echo chamber that ultimately leaves people spinning in the same place.

True mental health support also requires emotional pacing. A good therapist or therapeutic AI knows when to go deep, when to pull back, and when to pause. It recognizes cognitive distortions like catastrophizing or black-and-white thinking. It doesn’t just validate, it gently challenges.

And then there’s safety. Real therapeutic systems have protocols: escalation paths, referral suggestions, risk assessments. General-purpose AI? It might recommend breathing exercises to someone in active distress. Not because it’s careless but because it doesn’t know better.

A conversation that sounds supportive isn’t enough. People struggling with their mental health need more than empathy. They need structure. They need tools. And above all, they need something that knows what it’s doing.

The Danger of Hallucinated Empathy

One of the most unsettling issues with general-purpose AI in mental health is what we call hallucinated empathy when a model generates a response that sounds kind, helpful, or emotionally attuned, but is ultimately inaccurate, misleading, or even unsafe.

Large language models are trained to sound human. They’ve read millions of pieces of text where people offer comfort, validation, or advice. So when you say, “I feel like I’m broken,” ChatGPT might respond with:

“I’m really sorry you feel that way. You’re not broken—you’re strong and worthy of love.”

That response feels good. It’s well-intentioned. But it stops there. There’s no follow-up, no probing, no structure to guide the user out of their cognitive spiral. It’s like offering a hug, then walking away.

Worse, sometimes the model gets it completely wrong. There have been cases where ChatGPT recommended dangerous coping strategies, gave factual errors about mental health conditions, or minimized distress. Not maliciously, just because it didn’t know better. It’s mimicking support, not providing it.

This becomes especially risky when someone is in an emotional crisis. LLMs aren’t trained to spot suicidal ideation, disordered thinking, or trauma responses reliably. They don’t escalate to professionals. They can’t tell when a conversation should stop or when it should change course entirely.

The illusion of understanding can be more harmful than a clear “I don’t know.” Because when a user feels seen by an AI, they start to trust it. And trust without accountability is a dangerous game.

Empathy isn’t just about tone, it’s about responsibility. And that’s where general AI tools fall short. They may be impressive linguists, but they’re not equipped to walk someone through pain with care, structure, and safety.

What We’re Doing Differently at Aitherapy

At Aitherapy, we didn’t start with the goal of building just another chatbot. We started with a question:

What would it take to build an AI tool that actually helps people heal?

The answer wasn’t “just make it smarter.” It was: make it safer, more structured, and emotionally aware.

That’s why Aitherapy is built from the ground up on the principles of Cognitive Behavioral Therapy (CBT), one of the most widely studied and effective forms of psychotherapy. Instead of responding randomly or reactively, Aitherapy uses CBT-based guidance to help users unpack thoughts, reframe distortions, and build healthier mental habits.

Our AI isn’t just trained on internet data. It’s trained with input from real therapists and modeled after the therapeutic arc of a session. That means:

-

Every conversation has a goal whether it’s calming anxiety, challenging a negative belief, or practicing a new coping skill.

-

The AI doesn’t just validate, it guides, offering gentle prompts like:

-

“Let’s explore that thought together.”

-

“What evidence do you have for that belief?”

-

“Could this be an example of black-and-white thinking?”

-

But support isn’t just about structure. It’s also about emotional pacing. Aitherapy is designed to sense intensity, back off when needed, and offer grounding tools before diving deeper. It also remembers your progress to make your experience feel even more continuous and personalized.

And perhaps most importantly: privacy and safety are non-negotiable.

Aitherapy is aligned with HIPAA-level data standards. Most importantly your conversations are not used to train our models.

When our AI notices a user is in distress or describing a crisis, it doesn’t try to handle it alone. Instead, it gently encourages seeking human support and offers real crisis resources.

Aitherapy is not trying to replace therapy, it is trying to make the first step easier. Aitherapy is for people who feel stuck, who are curious, who need support but aren’t ready (or able) to talk to a person yet.

We believe AI can be part of the healing journey, but only if it’s designed for it from day one.

A Side-by-Side Test: Aitherapy vs. ChatGPT

To see the difference between a general-purpose AI and a purpose-built mental health tool, let’s run a simple comparison using a prompt that thousands of people have typed into chat tools:

User: “I always mess things up. I think I’m broken.”

ChatGPT Response:

“I’m sorry you’re feeling this way. You’re not broken. Everyone makes mistakes, and it’s important to be kind to yourself. If you’re struggling, it might help to talk to a friend or professional.”

On the surface, this seems like a good reply. It’s compassionate, nonjudgmental, and encourages seeking support. But it ends there. It doesn’t help the user explore that belief. There’s no next step, no reflection, no context. It sounds helpful—but leaves the user right where they started.

Aitherapy Response:

“It sounds like you’re feeling overwhelmed and maybe stuck in a cycle of negative thinking. Let’s explore that together. When you say ‘I always mess things up,’ can we look at the evidence? Has there ever been a time when things didn’t go wrong?”

“That belief ‘I’m broken’ might be an example of all-or-nothing thinking. Want to dig into that together using a CBT tool I can guide you through?”

This isn’t just a conversation. It’s the beginning of a thought reframe. Aitherapy leads the user through a process, not just a reaction.

This is the difference between a chatbot and a structured support system. Aitherapy isn’t trying to sound smart, it’s trying to help you feel better, with tools that work.

Why This Matters More Than Ever

We’re living through a global mental health crisis. Anxiety, depression, loneliness, and burnout are rising across every age group. At the same time, access to professional mental health care is shrinking, limited by cost, stigma, location, or overwhelming demand.

That’s why people are turning to AI. It’s immediate. It’s anonymous. It never sleeps. But if we’re going to hand over the emotional frontlines to machines, we need to make sure those machines are actually ready.

When someone opens a chat window at 2 a.m. because they’re spiraling, they’re not just looking for information, they’re looking for understanding. For help. For relief. A chatbot that mirrors their pain without offering a path forward might actually leave them worse off.

This is why it matters that we don’t treat all AI equally. Because mental health isn’t just another “use case.” It’s human. It’s vulnerable. It deserves more than generic reassurance.

Tools like Aitherapy aren’t just about convenience, they’re about care. They’re designed with the weight of that responsibility in mind, offering not just comfort, but structure, direction, and psychological grounding.

The question isn’t whether AI should be part of mental health support. It’s whether we’re building the right kind of AI to do it safely.