A decade ago, enterprise cloud computing reshaped how businesses operated. It enabled global deployment with just a few clicks and allowed infrastructure to scale on demand. Today, in mid-2025, that transformative power is no longer novel. It is the baseline. What separates forward-looking enterprises now is not the ability to deploy, but the ability to learn, adapt, and optimize continuously.

Cloud’s next evolution is defined not by elasticity but by intelligence. This is not an incremental enhancement. It represents a structural shift in how systems are designed, secured, and operated. Artificial intelligence has moved from being an enhancement to becoming the foundation.

Consider the retail sector, where AI-enhanced inventory systems can forecast demand, reroute logistics, and dynamically balance supply chains based on predictive models. In healthcare, real-time data pipelines analyze electronic medical records to detect peak diagnostic traffic and preempt disruptions. These are not speculative ideas. They are operational systems. Reliability today is measured by a platform’s intelligence.

From Monitoring to Intelligent Operations

Modern enterprises generate an immense volume of telemetry. Logs, traces, metrics, and signals stream from cloud-native workloads, APIs, containers, and edge devices. Traditional monitoring approaches rely on fixed thresholds. If a metric exceeds a limit, an alert is fired. This leads to excessive noise, slow responses, and missed root causes.

AI changes the fundamentals. Machine learning models can holistically interpret telemetry, identify evolving baselines, and detect subtle, multivariate anomalies before any visible failure occurs. Instead of reacting to known patterns, these systems anticipate deviations and intervene early.

Image: Cloud Computing with AI (Source)

At a cloud-focused enterprise in the e-commerce space, backend services were re-architected to enable real-time invoice validation and message dispatch to tens of thousands of users. These services were designed using serverless components and stateful workflows, evolving dynamically with demand. The result was infrastructure that responded in milliseconds and adapted as operational context changed. Incident frequency dropped, and many issues were resolved before they reached human operators.

Today’s AI-native observability platforms take this further. They automatically correlate related alerts, isolate root causes, and simulate possible remediation steps based on prior outcomes. This dramatically improves mean time to resolution and allows engineering teams to shift their focus from firefighting to building better systems.

From Cost Review to Cost Intelligence

Cloud cost management has traditionally been reactive. Organizations overspend, review dashboards, identify inefficiencies, and implement fixes. Often, this happens too late to recoup losses. AI-native systems reverse this process.

Modern platforms continuously analyze usage data and detect cost anomalies in real time. They scale resources automatically to match demand, suspend idle instances, and refactor services before waste accumulates. The focus is no longer on historical reports. It is a live intervention.

At a technology firm operating in financial services, a re-architecture initiative cut operational cloud costs by nearly half. Idle virtual machines were automatically suspended, backups consolidated, and workloads rescheduled for optimal efficiency. While not fully AI-native, this initiative was a forerunner of today’s intelligent orchestration frameworks, which adapt on the fly to both technical load and business constraints.

Advanced billing tools now integrate predictive capabilities. They model the impact of architectural changes before deployment, forecast usage spikes, and evaluate the cost implications of design decisions. Developers receive real-time alerts for inefficient queries, and CI/CD pipelines block builds that violate budget constraints. Platform teams can simulate cost profiles of new features before they are released, giving finance and engineering teams shared visibility.

This is cost intelligence. It is proactive, automated, and built into the development cycle.

Securing a Perimeterless Cloud

Cloud security in 2025 must address a dynamic, borderless environment. Users connect from multiple devices, across time zones and networks. APIs, containers, functions, and services each create new micro-perimeters. Traditional controls such as IP whitelists or manual port configurations are brittle and ineffective.

Artificial intelligence introduces context-aware defense. Systems trained on normal behavior can detect subtle deviations, including unusual access times, unexpected resource consumption, or geographic anomalies in usage. These deviations become triggers not just for alerts but for mitigation.

At a regulated enterprise managing sensitive workloads, anomaly detection was embedded into background job processing. In one incident, a recurring job began using more resources than usual, without failing outright. Standard monitoring missed it. An AI-powered system identified the deviation and initiated containment before the issue escalated. This saved hours of performance degradation and mitigated risk exposure.

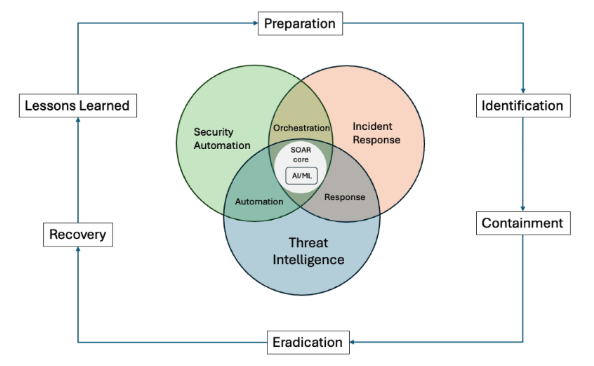

AI-enhanced security platforms now operate across identity, access, application, and infrastructure layers. They prioritize incidents based on contextual risk, not just signature matching. They can also integrate with orchestration tools to automatically initiate user verification, restrict access, or isolate workloads.

Image: Integration of AI/ML into SOAR Platforms (Source)

Beyond detection, these platforms improve transparency. AI tracks how policies are enforced, explains decisions, and generates auditable logs that simplify compliance reviews. Trust becomes traceable.

Engineers as Curators of Intelligent Systems

The role of cloud engineers is no longer confined to provisioning infrastructure. They are now responsible for shaping intelligent systems. These systems learn from feedback, adapt autonomously, and make decisions with minimal human input.

Modern engineering involves integrating anomaly detection, identifying data drift, and building safeguards into AI-influenced workflows. Engineers must understand both how the system functions and how it evolves.

At firms operating in fintech and digital commerce, teams transitioned from procedural workflows to feedback-driven architectures. In one example, system optimization and modular refactoring improved backend performance by over 95 percent. These gains were not driven by new frameworks. They resulted from intentional design for adaptability, observability, and resilience.

This transition also demands new skills. Engineers must now manage data pipelines, monitor inference paths, and debug models in production. They need fluency in streaming architectures, a grasp of statistical concepts, and familiarity with model lifecycle management. Infrastructure as code is only part of the job. Infrastructure as intelligence is the emerging standard.

Engineers are not just building systems. They are curating dynamic ecosystems.

What Defines AI-Native Infrastructure

AI-native infrastructure is not created by layering intelligence atop traditional systems. It is designed from the ground up to support continuous learning, contextual behavior, and dynamic adaptation.

Key characteristics include:

- Predictive scaling that responds to forecasted demand rather than current metrics

- Explainable telemetry that clarifies what failed, why it failed, and how to resolve it

- Embedded decision support that evaluates risk before any deployment

- Adaptive service levels that adjust based on business-critical priorities

In these environments, operational data is not just monitored. It is converted into insight and action. Logs become training data. Feedback loops operate continuously. System performance is defined by the ability to improve over time, not just to stay online.

Resilience is measured by foresight, not by the speed of recovery.

Guardrails and Governance for Autonomous Systems

Autonomy without constraint introduces risk. Intelligent systems can misinterpret edge cases, block legitimate access, or silently introduce regressions. Guardrails are essential for safe deployment.

AI-enhanced systems must start with defined constraints. These include understanding failure modes, identifying escalation triggers, and determining when human judgment is necessary.

Examples of embedded safeguards include:

- Confidence thresholds for sensitive decisions

- Human approval for high-risk operations

- Full audit logging of automated actions and model predictions

These safeguards must be integrated into system logic, configuration, and learning models. Engineers must not only define these limits but also monitor them continuously to prevent unintended outcomes.

When automation is governed thoughtfully, it becomes a powerful amplifier of human insight rather than a blind operator.

From Elastic to Predictive: The New Cloud Mandate

Elasticity defined the first phase of cloud computing. Agility is defined as the second. In 2025, foresight defines the third.

Modern infrastructure does not simply respond. It anticipates. It prevents failures before they happen, avoids inefficiencies before they cost money, and guides decisions before the business feels an impact.

Intelligence is not an overlay. It is the architecture itself.

Organizations that build for this future are not just faster. They are smarter, more adaptable, and more resilient. They embed AI in every layer. They transform operations into learning systems. And they prepare for a world where real-time optimization and continuous improvement are not advantages. They are expectations.

AI-native cloud architecture is more than a trend. It is the strategic foundation for modern enterprise.

About the Author

Sonali Suri is a software developer with experience in designing fault-tolerant, scalable, and distributed systems for high-throughput, real-time platforms. Her work spans customer registration pipelines, messaging integrations, and distributed pricing engines. Sonali is also a published researcher on Byzantine fault-tolerant systems and scalable IoT infrastructure.

The insights I share here reflect my personal perspective and do not represent the views of any company, employer, or affiliated organization.

References:

Nama, P., & Rani, A. (April, 2024). AI-driven innovations in cloud computing: Transforming scalability, resource management, and predictive analytics in distributed systems. ResearchGate. https://www.researchgate.net/publication/385215156_AI-DRIVEN_INNOVATIONS_IN_CLOUD_COMPUTING_TRANSFORMING_SCALABILITY_RESOURCE_MANAGEMENT_AND_PREDICTIVE_ANALYTICS_IN_DISTRIBUTED_SYSTEMS

Unalp, A., & Demir, M. (May, 2024). AI-driven predictive analytics: Shaping the future of strategic decision making. ResearchGate. https://www.researchgate.net/publication/387307075_AI-Driven_Predictive_Analytics_Shaping_the_Future_of_Strategic_Decision_Making

Paul, C. (June, 2024). AI-driven cybersecurity frameworks for cloud-native applications. ResearchGate. https://www.researchgate.net/publication/391495585_AI-Driven_Cybersecurity_Frameworks_for_Cloud-Native_Applications

Architecture & Governance. (March, 2023). AI-powered enterprise architecture: A strategic imperative. Architecture and Governance. https://www.architectureandgovernance.com/artificial-intelligence/ai-powered-enterprise-architecture-a-strategic-imperative

Architecture & Governance. (January, 2024). The augmented architect: Real-time enterprise architecture in the age of AI. Architecture and Governance. https://www.architectureandgovernance.com/applications-technology/the-augmented-architect-real-time-enterprise-architecture-in-the-age-of-ai

McKinsey & Company. (February, 2024). Charting a path to the data- and AI-driven enterprise of 2030. McKinsey Digital. https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/charting-a-path-to-the-data-and-ai-driven-enterprise-of-2030