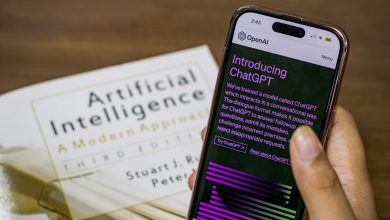

In today’s UK cybersecurity landscape, a complex and ever-expanding digital universe has many organisations looking for a magic bullet. Artificial Intelligence (AI) is often portrayed as the transformative force poised to solve modern cybersecurity challenges. Its speed, scale and ability to analyse patterns are unparalleled. But there’s one uncomfortable truth: AI on its own is not enough.

To build true cyber resilience, especially as threats become more sophisticated, the most effective approach appears to be a partnership between AI and human expertise.

AI is a force multiplier, not a replacement

We all know that security teams are inundated with data from a sprawling web of sources: endpoints, cloud systems, mobile devices, and third-party integrations. Burnout, stress and overwork are very real problems in the sector.

This is where AI really shines. It helps manage that deluge by automating basic threat detection, flagging anomalous activity, and speeding up the initial response. For instance, deep learning models can flag suspicious login patterns or unexpected system behaviour, while machine learning algorithms can distinguish between routine activity and potential compromise.

We’ve also seen that generative AI is finding a role in automating some of the more time-consuming aspects of incident documentation and reporting.

However, AI tools are not set-it-and-forget-it solutions. The effectiveness of these tools depends entirely on how they are configured and maintained. AI systems need regular retraining and contextual tuning. Left unchecked, they may misinterpret data, act on outdated signals, or generate false alarms that contribute to alert fatigue rather than reducing it.

The Missing Link: human context

Where AI struggles is in understanding nuance. While an algorithm might identify an unusual spike in outbound data from a server, it takes human judgement to assess whether that’s a breach or a scheduled backup. Analysts bring a wealth of experience, cultural awareness, regulatory understanding, and the ability to cross-reference events with operational context.

This human oversight becomes critical in sectors such as finance, government and healthcare, for example, where decisions carry legal and ethical weight, and where missteps can have costly consequences. Analysts also play a central role in refining AI systems by providing feedback that sharpens detection models over time.

The Power of Synergy: Why a hybrid approach makes sense

A hybrid cyber security model aims to get the best from both machines and people. AI handles routine analysis; filters low priority alerts and accelerates triage. The human analysts step in to investigate complex scenarios, manage incident response and provide strategic input.

This collaboration offers several benefits:

- Less Noise, More Signal: By filtering out the background noise, analysts can focus their energy on the threats that genuinely matter.

- Faster, More Accurate Responses: Automation kicks off the process quickly, but human oversight ensures the decisions are sound. This keeps things moving without sacrificing quality.

- A Way to Scale: With so many security teams stretched thin, this model helps multiply the value of limited human resources, freeing them up for higher-impact work.

- Improved Team Morale: It lets analysts spend less time on repetitive tasks and more time on challenging investigative work that truly uses their skills and brain power.

Adapting to the risk landscape

The UK cyber landscape is shaped by a mix of industry-specific regulations, emerging legislation and evolving threat vectors. From supply chain risk to state-backed attacks and ransomware-as-a-service, the threat landscape continues to widen. Organisations are under growing pressure to show they’re not just capable, but also accountable.

Security strategies must reflect this complexity. A hybrid model provides flexibility it can be tailored to organisational size, sector-specific needs, and compliance requirements, for example, while also responding to day-to-day operational realities.

Adopting a hybrid model also helps address the skills shortage facing the UK cybersecurity sector. By automating time-intensive processes, human capacity can be reserved for where it’s most valuable, not just in incident response, but threat modelling, proactive hunting, and internal security advocacy, to name a few.

Resilience needs speed and sense

While it’s clear that AI will continue to reshape cybersecurity, rather than seeing it as a silver bullet, we should view it as simply one part of a wider defensive strategy. Algorithms can identify patterns and generate signals, but they don’t understand impact, intent or consequence. Only people can.

The future of cybersecurity isn’t about choosing between humans and machines. It’s about working in partnership, where each strengthens the other. In an era where threats are faster, more targeted, and increasingly powered by AI themselves, such a hybrid approach offers a realistic, achievable and pragmatic path to resilience.