Executive Summary

As artificial intelligence (AI) becomes a routine part of creative production—across music, visual design, software development, and branding—questions of ownership, attribution, and licensing have become increasingly complex. Existing copyright systems, built around human authorship, offer limited guidance when creative output involves machine-generated elements.

This paper investigates the legal, operational, and ethical implications of AI-human co-creation. It synthesizes insights from case law analysis, expert interviews, and licensing model reviews to evaluate how current frameworks are adapting to the realities of generative AI. Particular attention is given to challenges in fair use interpretation, attribution standards, and the enforceability of licensing agreements in AI-involved workflows.

Key findings include:

- The legal test for “transformative use” remains inconsistently applied across jurisdictions, creating uncertainty for global copyright compliance.

- Most current licensing agreements lack explicit clauses for AI involvement, leading to ambiguity in ownership and rights attribution.

- New licensing models—such as bespoke AI agreements and co-creation frameworks—demonstrate greater alignment with the technical and legal realities of hybrid authorship, especially when supported by blockchain and metadata tools.

The report concludes by recommending hybrid licensing systems that integrate conventional IP principles with AI-specific structures. These systems should prioritize transparent attribution, updated contract language, and shared royalty models that reflect the collaborative nature of modern content creation.

Abstract:

The integration of artificial intelligence (AI), particularly generative AI, into creative and commercial environments has introduced pressing questions about intellectual property (IP) rights, licensing practices, and the ownership of co-created content. AI systems now generate music, images, literature, and software with increasing proficiency, relying heavily on large-scale datasets that often include copyrighted materials (Tedre et al., 2023). This raises legal and ethical challenges concerning fair use, consent, data ownership, and attribution.

Simultaneously, as AI tools become collaborative partners in the creative process, the concept of authorship becomes blurred. Who owns content that is generated jointly by humans and machines? How should licensing frameworks evolve to address hybrid authorship and AI’s growing role in ideation and execution?

This article examines the legal, technological, and ethical challenges posed by AI-generated creativity to help shape the future of copyright governance. Drawing on the Utilitarian Theory of Copyright and the Fair Use Doctrine, it explores how to balance creative protection with access to knowledge. It also incorporates principles from Human-Computer Interaction (HCI) and Co-Creation Theory to analyze the evolving dynamic between human input and AI-generated output, raising critical questions around authorship, attribution, and ownership.

Hypothesis:

Current copyright frameworks are inadequate for AI-human co-created works. Implementing hybrid-licensing models that combine traditional copyright protections with AI-specific attribution mechanisms will better support fair ownership, legal certainty, and innovation in the creative industries.

Key Research Questions:

- To what extent does the use of copyrighted materials in AI training qualify as fair use or copyright infringement under international legal frameworks?

- How can licensing models be structured to attribute and compensate both original creators and AI collaborators fairly?

- What technological and policy mechanisms can ensure transparent attribution and legal use of copyrighted data in AI systems?

The complexity of these questions calls for an interdisciplinary approach. This study combines legal analysis, case law review, and expert insights to assess how copyright frameworks and licensing models are adapting, or failing to adapt, to the realities of AI-human co-creation

Methodology:

This article applies a qualitative, interdisciplinary research approach:

Primary data was collected through expert interviews with IP lawyers, AI developers, licensing managers, and policymakers. Secondary sources included case law, licensing templates, and academic and policy literature. A comparative legal analysis was conducted to explore regional differences in copyright interpretation, particularly between the U.S. fair use doctrine and the EU’s more restrictive text and data mining (TDM) exceptions.

- Legal Analysis: Review of copyright statutes, recent high-profile litigation (e.g., OpenAI cases), and evolving international IP treaties

- Expert Interviews: Insights from professionals, including IP lawyers, AI developers, licensing managers, and policymakers, selected through purposive sampling

- Licensing Model Review: Examination of contract language in Creative Commons, open-source, and proprietary frameworks, focusing on attribution and rights allocation

- Textual Analysis Tools: Identifies licensing gaps and attribution inconsistencies through coding of licensing clauses, contract language, and court documents

Data-collection instruments include a semi-structured interview guide, a licensing framework analysis checklist, and document coding templates. All instruments were piloted with two industry professionals to ensure clarity and relevance.

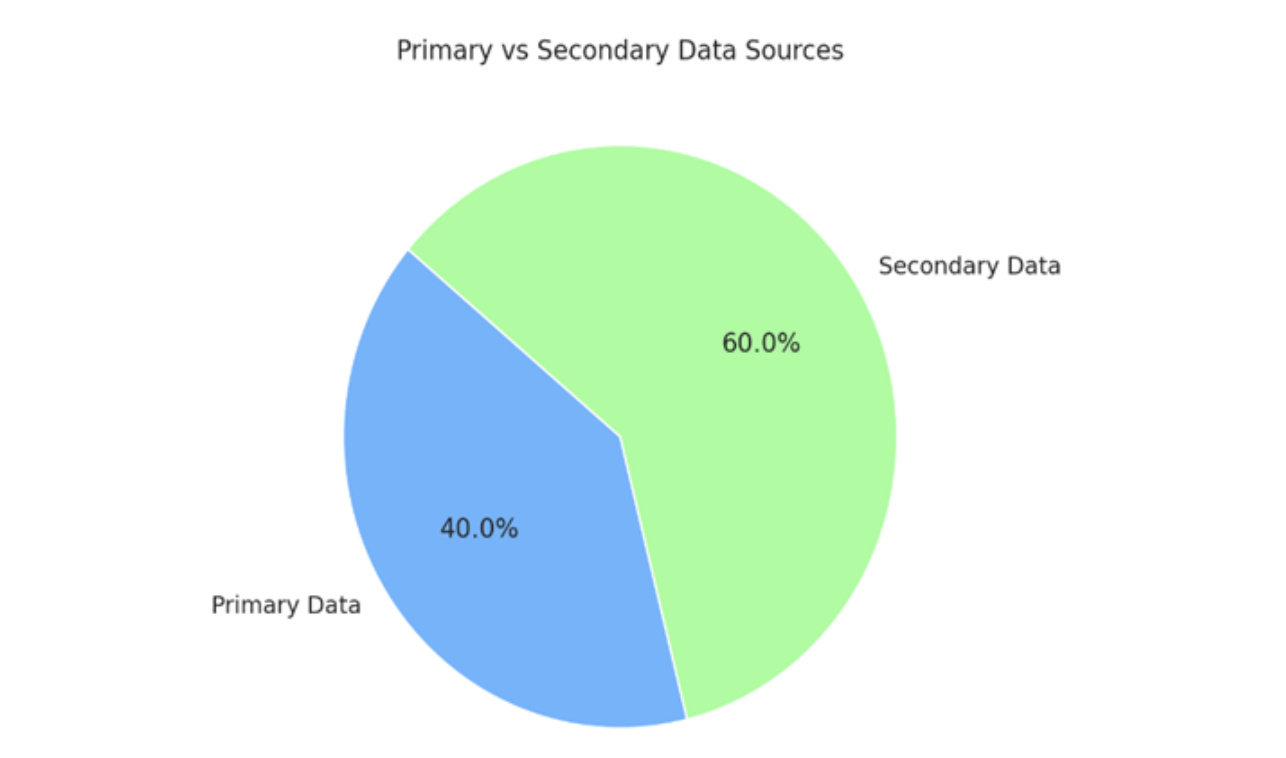

Figure 1. Primary vs Secondary Data Sources

This pie chart illustrates the data composition of the study: 40% primary data (expert interviews) and 60% secondary data (case law, literature, and policy documents), following a precedent from methodologies such as those used in Healthcare Bulletin (2023), ensuring a grounded, multifaceted analysis of AI copyright and licensing frameworks.

Limitations:

- Small, purposive samples of interviewees might introduce bias or limit generalizability.

- Legal interpretations may vary across jurisdictions, which can impact the consistency of analysis.

- Rapid technological change could render some findings less relevant over time.

- Limited access to proprietary licensing contracts may constrain the comprehensiveness of the review.

Literature Review:

Recent legal scholarship and regulatory publications have provided critical guidance on emerging copyright challenges in the era of AI. Reports from the US Copyright Office (2025) and WIPO (2024) provide foundational guidance on legal questions related to AI training data, authorship, and fair use, supporting both policy development and practical application.

Additionally, the 2024 Status of All Copyright Lawsuits v. AI report gives detailed case updates that shape emerging legal standards, especially regarding authorship, consent, and hybrid licensing in generative AI.

Scholarly work has also contributed to understanding the role of AI in creativity. Wilkens, Lupp, and Urban (2024) examine agency in human–AI co-creation, focusing on ethics and value negotiation.

Research by Zhang et al. (2021) and Kabir (2024) study how human input improves AI outputs through emotion, cognition, and context. These findings favor viewing AI as tools, emphasizing human attribution and authorship.

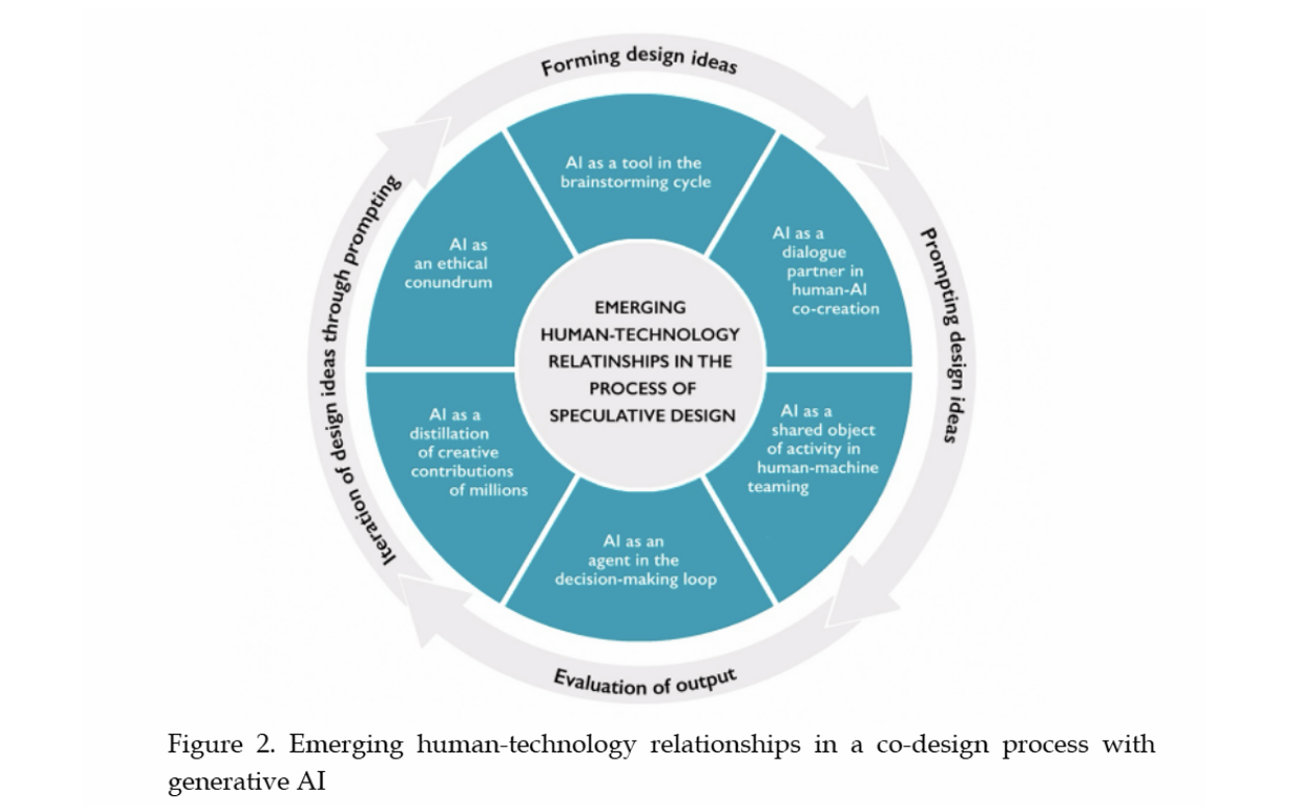

In the context of collaborative design, Vartiainen, Liukkonen, and Tedre (2023) introduce a “mosaic” perspective of co-creation, emphasizing the iterative role of generative AI over a deterministic one. At the same time, Zheng et al. (2025) explore embodied AI agents that can adapt to human emotions and personality traits, indicating a move toward more responsive and context-sensitive AI systems within creative environments.

Together, this body of literature underscores a growing consensus: while AI tools are reshaping creative industries, human authorship and transparent attribution must remain central to any legal or licensing framework. The convergence of legal, technical, and ethical scholarship points toward the urgent need for hybrid licensing models that reflect the shared nature of human–AI creativity.

Copyright and Generative AI: The Legal Battleground

Most modern generative AI models rely on datasets containing millions, or even billions, of copyrighted works scraped from the internet. This has triggered debate over whether such use qualifies as “fair use” (or “fair dealing” in the UK), and whether it requires permission and a license.

Recent legal disputes have brought these issues to the forefront. According to the US Copyright Office’s 2025 report, courts are currently examining whether using protected works without authorization for AI training reduces opportunities and value for creators. The “transformative use” standard remains central, but courts have yet to draw clear boundaries. Creators say unlicensed use destabilizes licensing markets, while developers claim model training has public benefits (U.S. Copyright Office, 2025)

Legal approaches vary internationally. Some regions are moving toward explicit licensing for training data, while others allow broad exceptions for text and data mining (TDM). For example, the UK’s approach to TDM is more defined than the US’s less formal fair use doctrine.

The lack of consistency makes it difficult for rights holders and brands to rely on current contracts. Many agreements lack language covering the use of datasets and authorship attribution, even as relevant laws are rapidly changing. This calls for licensing models that can accommodate both human and machine involvement, establish more transparent practices for attribution and compensation, and provide guidance as the legal landscape develops.

Figure 2. Legal Complexity and Policy Impact of Key AI Copyright Cases

| Case Name | Legal Complexity | Policy Impact | Citation Frequency |

| New York Times v. OpenAI | High: Involves fair use, reproduction of protected content, and news licensing frameworks. | Very High: Could transform how journalistic content is licensed for AI training. | Very High: Widely cited across academic, legal, and media discussions. |

| Authors Guild v. OpenAI | High: Focuses on moral rights, derivative works, and author compensation. | High – May influence legislation on AI, author collaboration, and creative ownership. | High: Frequently referenced in publishing, legal scholarship, and policy papers. |

| Getty Images v. Stability AI | Medium: Centers on image rights, trademarks, and dataset integrity. | High: Has implications for visual media, synthetic image generation, and brand protection. | Moderate: Referenced in visual IP discourse, especially in UK/EU contexts. |

This table is a legal focus analysis on the status report of copyright lawsuits against AI, indicating that issues related to AI training data and litigation have the most significant impact and frequency across academic and policy discourse (Status of All Copyright Lawsuits V. AI, 2024). Notably, the U.S. cases OpenAI v. Authors and NYT v. OpenAI rank highest in both legal complexity and policy significance, underscoring their pivotal role in shaping the evolving legal framework surrounding generative AI.

Business and IP Risks: Organizational Realities in the Age of AI

While much public discussion focuses on the legal status of AI-generated work, the commercial risks for organizations run deeper and touch daily operations. AI tools are built on datasets that mix public domain material with protected works and personal information. This raises concerns about confidential data, tracing data sources, and defining enforceable rights for AI-generated content.

The recent factsheet from the World Intellectual Property Organization (WIPO) breaks down these risks in detail, helping organizations understand and anticipate both legal and practical implications (WIPO, 2024):

| Risk Area | Challenge | Implication |

| Use Cases | Defining and limiting AI’s business role | Unexpected or misused outputs |

| Contractual Terms | Lack of standardized agreements with AI vendors | Ownership gaps and liability issues |

| Training Data Issues | Unlicensed or unclear data sources | Exposure to intellectual property and privacy claims |

| Output Issues | Generation of infringing or inappropriate content | Risk of liability for downstream users |

| Regulatory Landscape | Rapidly changing rules | Increased compliance burdens |

The most pressing issues are not limited to the question of who “owns” AI-assisted work, but rather the everyday uncertainty in operations. Misuse of trade secrets or confidential information, as well as inconsistent vendor contracts and disputes over ownership or royalties, are increasingly common when AI and human contributions are not clearly documented.

From my experience leading licensing negotiations for global entertainment brands, the rise of AI in creative processes has added new layers to questions of attribution and royalty structure. As regulations shift and technology progresses, the organizations that respond most effectively will be those that maintain clear internal policies and consistently document AI’s involvement in their workflows. While such measures cannot eliminate risk entirely, they provide a solid basis for responsible and compliant adoption of generative AI.

To mitigate these challenges, this study recommends a multi-layered strategy:

- Digital watermarking, content hashing, and metadata tagging to trace the use of copyrighted materials

- Blockchain-based systems to ensure tamper-proof records of training data and attribution

- Standardized licensing frameworks and policy mandates requiring transparency in dataset documentation

These mechanisms, combined with updated legal structures, can help align creative innovation with ethical and enforceable business practices.

Ethical Complexity: Shadowed Agency and Relational Accountability

Image: Summit Art Creations | Shutterstock

Legal risk is not the only challenge. As AI evolves from a passive tool to an active collaborator, it becomes increasingly complex to determine the extent of influence that belongs to the human contributor versus the algorithm.

The idea of “shadowed agency” helps explain this complexity. In many cases, both visible and unseen actors form the final result. Data used to train AI models may originate from numerous sources, and the goals or preferences of software developers and organizations are often embedded deep within the system’s design (Wilkens et al., 2024).

This uncertainty prompts new questions for organizations:

- How can they reliably trace the origins of ideas or materials produced with AI assistance?

- What qualifies as meaningful human input deserving recognition or attribution?

- When disputes over credit or royalties occur, how should responsibility be determined?

Organizations are more likely to avoid disputes by establishing clear methods to record the involvement of both human and AI contributors at each stage of a project. Defined protocols for documentation and attribution help ensure transparency and can reduce confusion as AI tools become a regular part of business processes.

The discussion on “shadowed agency” presents compelling ethical insights. To strengthen this section, add a more explicit framework, such as a matrix or model, to help operationalize the concept and clarify how responsibility and influence are distributed between human and AI contributors.

Commercial Impact: Who Profits from AI Co-Creation?

The label “AI-made” and “human-made” now affects consumer trust, purchase decisions, and asset value. A field experiment with over 128,000 consumers demonstrated that voluntary participation in AI-powered co-creation increased engagement by 6% and purchase conversion by 22%, particularly for complex, customizable products and experienced users (Zhang et al., 2021).

These findings indicate that the commercial value of AI in creative workflows is closely tied not just to technical capability, but to the level of transparency and structure within collaboration. Organizations that clearly disclose human and AI involvement and distinguish between them in licensing and attribution are better positioned to keep consumer trust and business value.

Human-AI Co-Creation Frameworks: Structuring Collaboration

Licensing frameworks must adapt to the realities of hybrid authorship. Research on augmenting co-creation with generative AI proposes a structured six-stage process, each supporting different levels of control and attribution within an R&D context (Kabir, 2024):

- Preparation: Project scoping and tool selection aligned with both objectives and team skills.

- Exploration: Data collection and idea generation with generative AI.

- Collaboration: Iterative collaboration, where humans and AI refine concepts together.

- Development: Testing of prototypes by both parties.

- Implementation: Finalization and launch, optimizing for the intended context.

- Evaluation: Ongoing review and improvement based on feedback.

As organizations explore licensing strategies for AI-generated content, hybrid models that combine legal certainty, ethical safeguards, and flexible attribution are emerging as the most adaptable solution.

Throughout this process, the human-AI relationship remains dynamic. Field research on design workshops further demonstrates that professionals often view AI as an active partner, capable of prompting new ideas and sharing responsibility for the final product(Vartiainen et al., 2023).

A comparative analysis evaluates licensing models across five key attributes: flexibility, attribution, legal certainty, ethics compliance, and AI compatibility. Bespoke AI licenses rank highest for legal and ethical alignment in hybrid authorship scenarios, while Creative Commons remains strong in terms of flexibility but lacks provisions specific to AI.

Maintaining transparent documentation throughout the co-creation process is essential for accurate attribution and effective rights management. As AI-human collaborations become more central to both creative and commercial workflows, embedding such practices into licensing protocols will help reduce uncertainty and build resilience.

By embedding transparent attribution protocols throughout the co-creation process, organizations can create fairer systems for recognizing contributions, reduce uncertainty in rights management, and adapt more readily to future changes in technology and regulation.

Getting the IP Ecosystem Ready for What’s Next

As human-AI co-creation moves forward, the emergence of embodied AI agents capable of recognizing and adapting to human emotion and personality in real time is expected to shift creative authorship into new territory. These systems, which integrate emotion recognition and dynamic modeling of individual preferences, allow for more interactive and context-aware collaboration. This increased complexity raises important questions around attribution, authorship, and fair compensation (Zheng et al., 2025).

To address these developments, priorities should include:

- Attribution models that reflect the contributions of both humans and AI

- Standardized metadata to document authorship, tools, and intent

- Royalty-sharing mechanisms for multi-agent collaboration

- Transparent disclosure of generative processes

Legal reforms around AI copyright should be developed through open, multi-stakeholder dialogue and move beyond binary debates over AI inventorship. By considering flexible attribution and modern licensing models, policymakers can build frameworks that are both effective and equitable for hybrid human-AI creativity.

Future Research Directions:

- Explore real-time, emotionally intelligent AI systems and their impact on co-authorship and agency

- Examine the effectiveness of cross-jurisdictional licensing standards, particularly in the Global South

- Investigate user-centered licensing interfaces powered by explainable AI

- Develop policy frameworks for embodied AI and generative agents in creative workflows

This research further validates the need for hybrid licensing systems, those that blend traditional copyright protections with AI-specific attribution and compensation mechanisms. It also recommends incorporating metadata standards and smart contract-based royalty systems into licensing templates to automate attribution and payment at scale.

Conclusion

The expanding role of AI in creative production is prompting a reconsideration of how authorship, rights, and compensation are defined. As human and machine contributions increasingly overlap, ownership questions are less about exclusive control and more about recognizing a range of inputs that form creative outcomes.

The research findings validate the hypothesis by demonstrating clear shortcomings in existing IP frameworks and widespread support for hybrid-licensing solutions. Legal analysis and expert interviews consistently point to the need for attribution models that acknowledge the shared nature of AI-human creativity.

This study contributes to the evolving discourse on AI copyright and licensing by proposing hybrid legal and technological models for attribution and compensation. As AI continues to shape the future of creativity, IP frameworks must evolve to reflect collaborative authorship, cross-border innovation, and the ethical use of training data.

Ultimately, the research reinforces that existing copyright systems are outdated and inadequate for governing AI-assisted creativity. By combining legal precedent, practitioner insight, and a review of licensing models, this study highlights the urgent need for adaptive, transparent, and ethically aligned solutions in the age of co-creation.

List of Abbreviations and Glossary

AI – Artificial Intelligence

A field of computer science focused on creating systems capable of performing tasks that normally require human intelligence, such as learning, reasoning, and generating content.

IP – Intellectual Property

Legal rights that protect creations of the mind, including inventions, literary and artistic works, designs, symbols, names, and images.

TDM – Text and Data Mining

A process of analyzing large datasets by extracting useful patterns, often used in AI training; subject to specific legal exceptions or licensing requirements in different jurisdictions.

HCI – Human-Computer Interaction

An interdisciplinary field studying how humans interact with computers and digital systems, relevant here in the context of human-AI collaboration.

WIPO – World Intellectual Property Organization

A United Nations agency responsible for promoting the protection of intellectual property worldwide.

USDM – United States Digital Millennium Copyright Act

Referenced indirectly in discussions of licensing and copyright compliance in AI contexts.

Co-Creation

A collaborative process in which humans and AI systems jointly contribute to the development of creative or functional outputs.

Fair Use Doctrine

A legal principle in U.S. copyright law allowing limited use of copyrighted material without permission under specific conditions, such as commentary, criticism, or research.

Transformative Use

A subset of fair use where the new work adds something substantially new or different from the original, altering its purpose or character.

Licensing Model

A legal framework that defines how content can be used, shared, or monetized—examples include Creative Commons, open-source licenses, and bespoke AI licenses.

Attribution

The act of acknowledging the original creator or source of content, critical in hybrid authorship scenarios involving AI-generated materials.

Shadowed Agency

A concept referring to the blurred or distributed influence of human and machine contributors in AI-generated work, making attribution and responsibility more complex.

Metadata Tagging

Embedding structured data within digital content to provide information about authorship, creation tools, licensing terms, etc.

Blockchain

A decentralized, tamper-proof digital ledger technology used to verify transactions or maintain transparent records, including for licensing and attribution in AI workflows.

References:

- United States Copyright Office. (2025, May). Copyright and Artificial Intelligence Part 3: Generative AI training [Report]. https://www.copyright.gov/ai/Copyright-and-Artificial-Intelligence-Part-3-Generative-AI-Training-Report-Pre-Publication-Version.pdf

- World Intellectual Property Organization. (2024). Generative AI: Navigating intellectual property. WIPO. https://doi.org/10.34667/tind.49065

- Wilkens, U., Lupp, D., & Urban, A. (2024, November 28). Ai in Co-Creation – Shadowed Agency, Responsiveness, and Beneficiaries in Entangled Human-Ai Value Systems. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.5037487

- Zhang, M., Sun, T., Luo, L., & Golden, J. (2021, September 24). Consumer and AI co-creation: When and why human participation improves AI creation. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3929070

- Kabir, M. (2024, December 4). Unleashing Human Potential: A Framework for Augmenting Co-Creation with Generative AI. Proceedings of the International Conference on AI Research, 4(1), 183–193. https://doi.org/10.34190/icair.4.1.3074

- Vartiainen, H., Liukkonen, P., & Tedre, M. (2023, November 2). The Mosaic of Human-AI Co-Creation: Emerging human-technology relationships in a co-design process with generative AI. https://doi.org/10.35542/osf.io/g5fb8

- Zheng, J., Jia, F., Li, F., & Fu, Y. (2025, January 20). Embodied AI Agent for Co-creation Ecosystem: Elevating Human-AI Co-creation through Emotion Recognition and Dynamic Personality Adaptation. https://doi.org/10.31234/osf.io/krxp6

- Status of all copyright lawsuits v. AI. (2024, April 25). Chat GPT Is Eating the World. https://chatgptiseatingtheworld.com/2024/04/25/status-of-all-copyright-lawsuits-v-ai-april-25-2024