Cybersecurity has traditionally evolved through steady, deliberate steps. Organizations invested in firewalls, endpoint protection, Security Information and Event Management (SIEM), and compliance frameworks to safeguard digital assets. For years, the battle between attackers and defenders remained largely symmetrical: exploit and patch, breach and respond, adapt and counter. However, AI and machine learning (ML) are shifting that balance. Today, anomaly detection, behavioral analytics, and real-time threat scoring are essential tools, accelerating both response and resilience.

But with the rise of generative AI (Gen AI) and with the rapid advances in agentic AI, the playing field is permanently altered. The leading Large language models (LLMs) from both proprietary and open-source communities have brought contextual, human-like reasoning into the mainstream. In just over a year, millions—from developers and marketers to cybercriminals—have harnessed Gen AI. This democratization of AI has unleashed a dual-use dilemma: the same technology that drives productivity also arms cyber attackers.

Agentic AI compounds this risk. These AI agents are not just generating content; they are pursuing goals, making decisions, executing tasks across APIs, and interacting with systems and environments autonomously. This new breed of AI has the potential to automate attack chains—scanning for vulnerabilities, crafting phishing vectors, exfiltrating data, and even adapting defensive responses in real time.

This article explores strategies to redesign cyber defenses using AI, both to counter Gen AI misuse and prepare for the rise of Agentic AI.

The Evolving Threat: From Gen AI Misuse to Malicious Agents

Between 2022 and 2023, cybercriminals began using Gen AI to create polymorphic malware, a code that continuously alters to evade signature-based detection. Around the same time, a new malicious model, WormGPT, emerged on hacker forums. Trained on malware repositories and designed without the ethical safeguards present in LLMs, WormGPT enabled automated, context-aware phishing campaigns.

Threat actors even use Gen AI for impersonations. A notable incident took place in Hong Kong, where scammers used deepfake technology to replicate the likeness and voice of a company’s CFO during a video call. The deception was so convincing that it led a finance employee to transfer over $25 million to the fraudsters.

Compounding the risk is the internal use of Gen AI within enterprises. This has intensified the issue of “Shadow AI,” which often leads to data exposure due to the unsanctioned use of Gen AI tools by employees without the explicit knowledge, approval, or oversight of the organization’s IT or security teams. In 2023, a global electronics firm’s engineers inadvertently leaked confidential source code and meeting transcripts into a public Gen AI tool while debugging and summarizing notes. This incident prompted the company to ban such tools internally and invest in building secure, company-specific LLMs.

Such incidents are no longer rare. Attackers now use AI to build malicious payloads, develop adaptive ransomware, and exploit LLMs through jailbreaking, prompt injection, and prompt hijacking.

Attacks have progressed from prompt-level attacks to workflow injection. Agentic AI agents can now crawl systems, find vulnerabilities, trigger code execution, and adapt to access levels—turning a single prompt vulnerability into an orchestrated attack path.

Rethinking Cyber Defense: AI as a Force Multiplier

These challenges are forcing organizations to rethink not just their tools but their security philosophy. Traditional perimeter defenses and signature-based detection models are inadequate against dynamic, contextual, and increasingly automated attacks. In this environment, security teams must act on multiple fronts.

- Turning Gen AI and agentic AI into cyber defense allies: Defenders must embrace AI not just as a productivity tool, but as a force multiplier in cyber defense. Leading security platform vendors have integrated LLMs to automate log analysis, summarize alerts, reduce false positives, and prioritize incidents based on business risk.

For instance, AI copilots integrated in XDR can write Kusto Query Language (KQL), a query language designed for fast data exploration and analysis across large datasets, on behalf of analysts, generate incident reports, and recommend remediation steps. This was implemented by college students at the university in the state of Oregon to boost automation capabilities and quickly learn how to write more advanced KQL queries for threat hunting and build additional workbooks, accelerating both learning and operational impact.

Beyond LLMs, autonomous agents are entering defense architecture. A conversational AI assistant integrated with a security platform, for instance, enables analysts to investigate threats using natural language queries, cross-reference threat intelligence, and autonomously answer investigative questions. Through agent workflows, it can outline attack paths, suggest containment actions, and guide response within guardrails.

Startups offer AI agents that automatically triage alerts and generate investigation reports. These agents are pre-integrated with security tools and wired into collaboration platforms and enterprise productivity suites.

Others go further. Some AI agents not only identify software vulnerabilities but also automatically recommend remediation code, reducing time from detection to fix.

- Securing the AI life cycle: As AI systems become integral to enterprise workflows, security must be embedded from development to deployment and day-to-day operations. Enterprises developing internal copilots or customer-facing AI apps must treat these models as sensitive infrastructure. Threat modeling for AI systems—identifying risks such as data leakage, adversarial prompts, and model manipulation—must be standard practice. Red-teaming should include LLM-specific scenarios, while access controls must be fine-grained, with all model interactions logged and monitored.

For instance, a global financial institution has deployed an internal LLM suite for productivity and compliance while enforcing stringent internal controls with clear policies on AI use cases and tightly governed access based on business division, use-case risk profile, and policy requirements. The firm has also set up an AI governance committee to oversee AI model development and deployment.

As agent-based copilots emerge, firms will also need mechanisms to simulate agent behavior across scenarios, similar to penetration testing, but for AI logic chains.

- Extending governance from prompts to agents: Gen AI governance often focuses on prompt filtering and hallucination risks. Agentic AI governance adds another layer of intent and execution management. With LLMs often trained on enterprise data or connected to sensitive APIs, the risk of exposing proprietary information through unmonitored prompts is high.

Organizations are now beginning to tokenize or redact sensitive content in real time before it enters an LLM pipeline, and they are increasingly using governance and guardrail tools to impose content policies at inference time. These tools also enable action restriction, ensuring AI agents only operate within sanctioned workflows.

- Mitigating risk in the AI supply chain: The model supply chain itself is emerging as a new frontier of cyber risk. In early 2024, an open-source platform for sharing AI models, datasets, and applications became a focal point for such risks when over 100 malicious models were discovered on its platform. Some of these models contained backdoors capable of executing code on users’ machines, granting attackers persistent access. In contrast to traditional software bugs, model backdoors are behaviors introduced during training, fine-tuning, or via unverified dependencies, which remain dormant until activated by certain inputs.

With agent-based architecture increasingly relying on external APIs, plugins, and open tool chains, the attack surface is expanding. The equivalent of “dependency hell” now includes unverified agent tools or chainable scripts embedded in orchestration frameworks.

To address such challenges, CISOs must now track AI Bills of Materials (AIBOMs)—just like software BOMs—to document every component of their AI systems, not only for models and datasets, but also for agent tools, routing logic, memory stores, and execution permissions.

Policy Response Catches Up: Global AI Governance Accelerates

Reflecting the growing recognition of AI’s systemic impact, governments worldwide are moving from exploratory guidelines to enforceable mandates. As noted in Avasant’s Responsible AI Platforms 2025 Market Insights™, over 1,000 AI-related regulations have been enacted across 69 countries, with the US alone introducing 59 in 2024—marking a 2.5x increase over 2023. Figure 1 below presents the timeline of recent Gen AI governance policies introduced by various countries.

Figure 1: A timeline of recent national policy actions on Gen AI governance

These frameworks are becoming increasingly sector-specific and risk-tiered, emphasizing transparency, safety, and accountability. For instance, the EU AI Act, finalized in August 2024, introduces a tiered risk framework and designates general-purpose AI (GPAI) models, such as those used for image/speech recognition, audio/video generation, pattern detection, and generative applications, as posing systemic risk. These models are subject to stricter obligations, including robust documentation, incident reporting, risk and performance evaluations, adversarial testing, and detailed transparency on training data to ensure accountability and mitigate misuse.

In September 2024, China’s National Technical Committee 260 on Cybersecurity introduced an AI Safety Governance Framework, incorporating risks arising from model architecture, data misuse, and adversarial attacks, with a strong focus on national security and algorithmic transparency. Similarly, in October 2024, Japan’s METI updated its national AI strategy to enforce strict data privacy and algorithmic audit requirements for industrial AI applications.

Together, these developments—spanning both advanced and emerging economies—highlight a shared urgency to align AI innovation with societal safeguards, fairness, and responsible deployment, with many of these mandates taking effect through 2024 and 2025. Future regulations will likely require declarative transparency on what agents are allowed to do, how actions are gated, and how execution traces are logged and audited.

MSSPs Scaling Intelligent AI Across Security Functions

Managed Security Service Providers (MSSPs) are evolving rapidly to integrate intelligent automation into their offerings. Some providers have introduced a multi-agent AI system that orchestrates several specialized agents to simulate cyberattacks, detect anomalies, implement defenses, and even conduct business continuity testing.

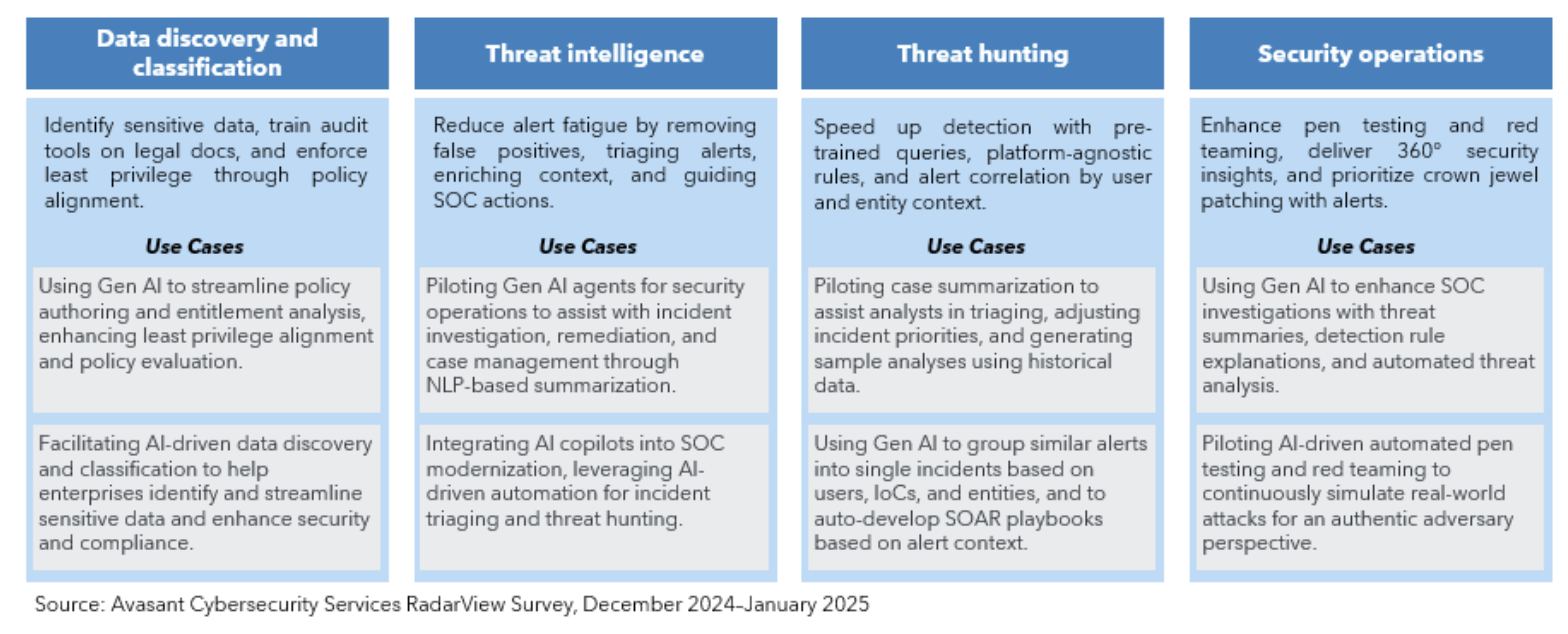

As highlighted in Avasant’s Cybersecurity Services 2025 RadarView™, leading MSSPs are leveraging Gen AI and Agentic AI across four key cybersecurity functions—data discovery and classification, threat intelligence, threat hunting, and security operations—each at varying levels of maturity, ranging from pilot initiatives to full-scale production deployments, as shown in Figure 2.

Figure 2: MSSPs advancing Gen AI and Agentic AI adoption across cybersecurity functions—from POC to production

For example, some firms are using Gen AI to streamline sensitive data classification and entitlement analysis, strengthening compliance and policy alignment. In threat intelligence, others are piloting Gen AI agents to automate case summaries and incident triage, reducing alert fatigue and accelerating SOC response. In threat hunting, AI-driven context generation and SOAR playbook automation are being adopted. On the operations side, providers are incorporating AI into penetration testing and red teaming to simulate real-world adversaries and bolster threat detection.

These use cases signal a transformative shift where Gen AI and agentic AI are no longer experimental but foundational to next-generation cybersecurity services.

Toward a Cyber-Aware, AI-Native Culture

Perhaps the most important transition is not just technological but cultural. Security teams must stop viewing AI as an external problem to defend against and start treating it as an internal capability to master. Whether it’s Gen AI shaping content or agentic AI executing tasks autonomously, the most cyber-resilient organizations will be those that build strong internal model governance, enable human-in-the-loop oversight, and train their workforce on prompt engineering, data-driven reasoning, and agent behavior validation.

AI won’t replace cybersecurity professionals. But those who harness it responsibly, at scale, and with ethical guardrails will replace those who don’t. Just as zero trust redefined network security, AI is redefining what it means to be cyber-aware, data-responsible, and digitally trusted.

We are entering a new era where defenses must be adaptive, AI-native, and human-aligned. The future of cybersecurity will not be shaped by hackers or agents but by the choices leaders make today.