AI is getting better, more visible, and more regulated, but it’s not about to take over the world.

What we’re seeing in 2025 is a mix of technical improvements, productization, real economic impact, meaningful regulatory action, and a messy social conversation about trust, safety, and jobs.

Businesses are rapidly embracing AI in workflows where they can; governments are racing to write rules; and every new model release sparks a debate about productivity gains and fair AI usage.

Despite not being foolproof, AI’s effects are now widespread enough to impact the lives of ordinary people, companies, and democracies alike.

In this article, I’ll focus on the forces that are most likely to shape outcomes related to AI, including technology, business, and policy, and why all three of these are so important.

AI in 2025 & What It Means for You

Foundational models are the baseline. Companies released new foundation models in 2024–2025 that are better at reasoning, code, and domain tasks. OpenAI’s GPT-5 landed in summer 2025 and was rapidly integrated into consumer and enterprise products. Early reactions called it an important step, not a leap into science fiction’s artificial intelligence.

AI prototypes and specialized tools are the new way forward. Firms aren’t just experimenting. Enterprises are deploying AI in customer service, legal review, finance, and clinical workflows, often via retrieval-augmented systems that pair models with trusted data sources. This is the reason business forecasts huge productivity potential in the coming decade due to the diversification of these models.

Job loss is a myth. Jensen Huang, in conversation with Fareed Zakaria at GPS, has fittingly answered this modern dilemma.

“In a world of zero-sum games, if you have no more ideas and all you want to do is this, then productivity drives down, drives — you know, it results in job loss. Remember that A.I. is the greatest technology equalizer we have ever seen. It lifts the people who don’t understand technology.”

“And if you’re not sure how to use it, you ask ChatGPT, how do I use you? And how do I formulate this question? It’ll write the question nicely for you, and then you ask it to answer the question.

And so, A.I. empowers people. It lifts people. It closes the gap, the technology gap. And as a result, more and more people are going to be able to do more things. I’m certain, a hundred percent of everybody’s jobs will be changed. The work that we do in our jobs will be changed.”

Connectivity and the internet are becoming more important than ever. As AI moves from cloud prototypes to fast, responsive apps running on local devices, network performance becomes a critical factor. The right connectivity and edge strategy can make or break those use cases. Since many AI applications rely on low-latency connections, it’s worth checking how your current internet holds up. TVInternetUSA offers a practical comparison of network types and their impact on your preferred tools and applications’ performance.

Key Trends Shaping AI in the Next 2–5 Years

1) Productization (AI as an Everyday Assistant)

AI won’t replace whole professions overnight. Instead, it will be embedded into tasks like drafting, summarizing, coding, and handling support tickets.

Businesses that use AI to boost their team’s productivity (training staff & reworking processes) will see the most benefit.

2) Safety is Moving from Lab to Law

Governments are passing concrete rules. The EU’s AI Act is already in force with staggered application dates that require governance for high-risk systems. The G7, Canada, and the US are moving cautiously without regulatory frameworks to boost the development of AI technologies against intense competition from China.

Expect companies to chase compliance just as they chase features and to design models that can be audited and enumerated for risk and wide deployment.

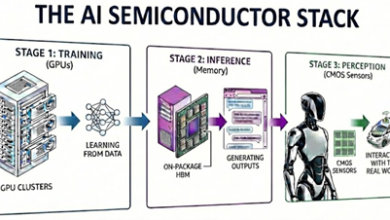

3) Specialized Models & Edge Computing

Not every application needs an enormous general model. We’ll see more specialized, smaller models optimized for tasks (legal research, radiology, supply-chain forecasting), sometimes running closer to the user on edge infrastructure.

This practice not only protects data and democratizes access but also fragments the AI ecosystem. Partnerships between compute providers, universities, and governments (e.g., public–private hubs) are crucial here.

4) Guardrails & Fact-Checking Tools Improve Slowly

Hallucinations and brittle reasoning. Models still invent facts and fail in predictable ways when asked outside their training distribution. The research community is advancing ways to reduce hallucinations and flag errors (retrieval augmentation, agent frameworks, verification layers).

Practically, we should expect incremental improvement rather than a sudden fix.

5) Regulation will Steer Business Behavior

Expect compliance costs, official approval processes for ‘high-risk’ systems (healthcare, hiring, critical infrastructure), and more disclosure requirements.

This will alter incentives: companies may prioritize compliance over marginal gains in raw performance. The FDA and other agencies are already issuing guidance for AI in medicine and devices.

Ethical & Societal Implications

Bias, Fairness & Access

AI systems inherit biases from data. That’s a moral issue and a practical liability because biased outputs can harm people and spark litigation or regulatory penalties.

The practical response is better data, plus ongoing monitoring, but building such a framework is costly money and resources-intensive.

Misinformation & Deepfakes

The tools that make convincing text, audio, and video are getting cheaper and easier to use. This heightens risks for elections, public health, and trust in media.

Countermeasures like authentication, provenance standards, watermarking, and legal liability are needed to protect the public from misinformation, propaganda, and distrust.

Concentration of Power

Powerful models remain resource-intensive to train and costly to run, which is only viable for large companies and nations that can afford the infrastructure.

Because training and integration at scale require vast capital and infrastructure, a handful of organizations control much of the most capable AI today. This centralization of power shapes who builds, who benefits, and who sets norms within the AI race.

Jobs & Skills

AI will automate tasks, not entire jobs, but for many workers, that still means reskilling and disruption. Some sectors will see quick change, including customer support, content production, and basic coding, while others, like creative direction and complex clinical decisions, will remain human-led but augmented.

Policymakers must pair AI adoption with workforce transition programs to reduce the chances of mass unemployment.

Realistic Future Scenarios

Think of these as ‘what the next 3–7 years might look like,’ depending on choices by companies, regulators, and society:

1) The Productivity Lift

Companies embedding AI into workflows and day-to-day operations will see huge gains in productivity, particularly in knowledge gathering and simple routine operations.

McKinsey estimates that generative AI alone could add the equivalent of $2.6 trillion to $4.4 trillion in value annually to the global economy, with 75% value creation in just four disciplines: customer operations, marketing and sales, software engineering, and R&D.

2) The Fragmented Safety Landscape

As regulation varies across regions, the EU has strict rules, the US is flexible, and other countries are moving at their own pace. This creates a patchwork of compliance requirements and product differentiation problems. Here, safe and auditable models will come in play for regulated markets and faster, looser models elsewhere.

It’s confusing for global companies and raises questions about controls and enforcement. So, what’s needed? Effective and centralized regulation to avoid problems at all fronts.

3) The Concentration Trap

If computing and data stay tightly concentrated and regulation lags, a few companies or states could dominate capabilities, setting norms to favor their commercial model. That risks reduced competition, privacy erosion, and governance by private actors rather than democracies.

It’s avoidable, but not impossible.

What Business Leaders & Policymakers Should Do

If you’re building, buying, or regulating AI, here’s a smart, actionable plan that balances risk while embracing the opportunity:

For business leaders:

- Start with problems, not models. Identify high-value tasks you can measurably improve.

- Invest in human + AI workflows. Restructure jobs so people supervise, validate, and own outcomes.

- Measure & monitor. Track accuracy, bias, and user harm as operational metrics.

- Plan for compliance. Expect rules that require documentation, testing, and sometimes third-party auditing.

For policymakers:

- Prioritize safety & transparency. Rules should require risk assessments, provenance, and human oversight for high-risk uses.

- Support Public-Private R&D. Shared public–private research partnerships can reduce concentration. Recent initiatives are already moving in this direction.

- Fund reskilling. Pair AI growth with worker transition programs and predictable support.

What You (The Consumer) Should Do

- Always double-check important facts. AI can be extremely helpful, but not completely trustworthy. Use it like an assistant, not an oracle.

- Check source information. Prefer services that show sources or provide verifiable outputs, especially for health, finance, or legal advice.

- Expect more disclosure. Good companies will tell you when AI is used and how decisions are made.

Final Verdict: Tempered Optimism is the Key

AI is not a singular phenomenon to fear or worship. It’s a suite of technologies that is shaped by investment and infrastructure, and at the same time is constrained by policy and human judgment.

The most plausible near-term outcomes are meaningful productivity gains and convenience for many, with real and addressable risks in specific domains, and a shifting regulatory landscape that will increasingly shape how productivity, capability, and convenience are delivered.