We’ve been relying on AI in cybersecurity for a while now. It runs quietly in the background—scanning patterns, flagging anomalies, and helping us spot things faster than we ever could on our own. Most days, it just works. But every now and then, it doesn’t give us the full story.

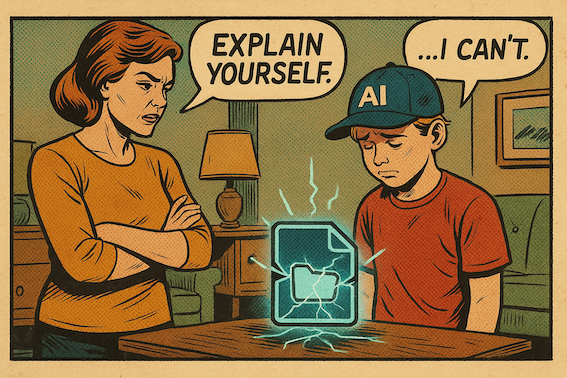

For instance, recently, a file got flagged with no signature match, no known threat — just a system alert marked as suspicious. When I asked why, no one could explain. “It looked off to the model,” someone replied. That was it. And the model probably was right. But the moment we can’t explain why something is flagged, we lose a piece of what makes AI genuinely useful. This is because if I can’t explain it, I can’t trust it — not fully, not in front of a customer, and not in a crisis. This is where explainability comes in — not as a buzzword or feature request but as a way to keep us connected to the tools we already depend on.

What Changed When We Gave Up the Rules

Unlike traditional security tools that have followed hard-coded logic, modern AI models infer patterns from vast datasets, making their internal reasoning difficult to trace.

Until now, classic rule-based systems were easy to follow. You could look at the logic, line by line, and understand why something was flagged. But with modern machine learning, especially deep learning, the logic is buried inside layers of statistics. It’s not just that we didn’t write the rules, it’s that no one did. The model learned them on its own.

So, when we ask, “Why did the system flag this?” it often can’t tell us. The model may be statistically accurate, but the rationale behind its decision remains inaccessible without post-hoc tools.

Security teams have always needed to justify their actions, whether to customers, auditors, or even to themselves. Now, we might have a system that sees more than we do but offers no way to explain what it saw.

Building transparency isn’t about resisting automation; it’s about maintaining accountability for the systems we operate.

Why Explainable AI Was Never Optional

Explainable AI didn’t appear because ChatGPT made headlines. While the term became official around 2016, when DARPA launched its Explainable AI (XAI) program, its roots go back much further. In the 1980s, researchers in human-computer interaction emphasized the need for systems to reflect how humans think to foster understanding and trust.

In cybersecurity, that need surfaced early. Once AI systems began making real-time decisions, including flagging threats, blocking access, and triggering responses, analysts needed more than just output. They needed rationale.

Some teams responded by using inherently interpretable models like decision trees or rule-based engines. Others used post-hoc tools like SHAP and LIME to explain predictions from complex models. SHAP assigns contribution scores to each input using game theory while LIME creates a simplified model around a specific prediction.

These tools won’t reconstruct the model’s full logic, but they do highlight the factors that most influenced a given outcome, enabling faster triage and accountability.

And in cybersecurity, that’s often enough.

What AI Explanations Actually Look Like in Security Tools

Let’s go back to that flagged file that didn’t match any known malware signature. With explainability built in, the alert becomes more than just noise. It becomes a lead.

A tool like SHAP might show:

- API call pattern (+0.42): Rare sequence similar to ransomware.

- Unauthorized access (+0.31): Attempted write to protected directories.

- Binary structure (+0.18): Matches known obfuscation patterns.

- Valid certificate (–0.05): Slightly reduced model confidence.

These aren’t rules anyone wrote. They’re patterns the model learned and revealed, just clear enough to investigate, explain, or challenge. With this kind of context, you can make decisions faster and with more confidence. After all, you’re not trying to audit the full system, you’re trying to make a call.

While explainable AI doesn’t remove uncertainty, it does give your team a way to move forward instead of sitting in doubt.

What You Can (and Can’t) Trust in AI Models

Most people associate AI with generative tools like ChatGPT. These fast, fluent, and opaque models are built for output, not for clarity. Even their creators can’t fully trace how they arrive at a particular sentence or suggestion.

Explainable AI is different. It doesn’t generate content but, rather, helps clarify decisions made by systems that classify, detect, and predict. And in cybersecurity, that difference matters.

Because the question isn’t just what did the system decide? It’s why?

If you’re not building models but, instead, leading a security team, managing operations, or evaluating vendor tools, here’s what you can still do:

- Assume generative AI is a black box. Don’t rely on it for decisions where traceability is required.

- Ask your vendors real questions. Do their models expose confidence scores or explanation layers? If not, why not?

- Explore built-in explanations. Microsoft Sentinel, Splunk, and other platforms now include these features, though you might need to dig.

- Push for explainability when the stakes are high. This matters in compliance, customer-facing alerts, and post-mortems.

- Start small. Even basic access to feature attribution can accelerate investigations and justify response decisions under pressure.

You don’t need to become an AI expert to use it responsibly. But you do need to keep asking the right questions.

Case Study: Vastav AI — Enhancing Trust through Explainable AI

In early 2025, Zero Defend Security introduced Vastav AI, India’s first deepfake detection platform. Designed to verify the authenticity of digital media, Vastav AI combines deep learning and forensic techniques to assess image, audio, and video files.

Though not formally categorized under academic explainable AI (XAI), Vastav exemplifies what it looks like in practice. Its core strength lies in making its assessments understandable and actionable for human users. Its key features include:

- Heatmap Visualizations: Highlight manipulated regions in a file, providing visual cues that pinpoint where anomalies occurred.

- Confidence Scoring: Assigns a likelihood score to each file, quantifying the system’s assessment of authenticity.

- Metadata and Forensic Insights: Surfaces inconsistencies in timestamps, digital signatures, and file attributes to support traceable conclusions.

- Technical Reporting: Breaks down entropy analysis and manipulation probabilities so analysts can clearly see what influenced decisions.

By delivering transparent outputs in a format that security teams can interpret and trust, Vastav AI shows that practical clarity doesn’t always require formal frameworks to be effective. It offers the kind of visibility and context that are essential in high-stakes scenarios, reinforcing the role of interpretability in real-world cybersecurity applications.

Where Explainable AI Falls Short—and Still Helps

On paper, explainable AI promises a trifecta: transparency, faster decision-making, and increased operational trust. But in practice, it comes with trade-offs.

SHAP and LIME approximate the model’s thinking; they don’t replicate it. The result is a simplified story, not the full internal logic. While this can be enough, it may also give a false sense of clarity.

The bigger risk is confidence without understanding. If users misread an explanation—or assume it’s absolute truth—it can lead to overreliance. And technically, explanations can also slow real-time systems or leak details that adversaries might exploit.

Still, that doesn’t mean we should walk away from such transparency. It just means we should treat explainable AI like any other security control: with clear goals, known limits, and thoughtful design.

Because what we’re really building isn’t perfect understanding. It’s better questions, asked earlier.

A More Informed Way to Work With AI

AI isn’t going anywhere. In cybersecurity, it’s already an indispensable tool, sorting through noise, detecting patterns, and moving faster than we can.

But speed and power aren’t enough. We need systems we can understand. More importantly, we need to know when we can trust them—and when to stop and ask why.

That’s what explainability offers: not full transparency, not perfect reasoning. Just a way back into the loop.

It’s not about replacing AI. It’s about keeping humans in the room when it matters most. Because if we can’t explain what the system did, we can’t defend it. And in cybersecurity, that’s not a risk worth taking.