For a long time, the different types of artificial intelligence have been the main things that define the field. Text-based Large Language Models (LLMs) that can write prose and code with amazing fluency have made amazing progress. At the same time, computer vision models have gotten very good at recognizing things, making realistic images, and analyzing video with almost human-level accuracy. These single-mode systems have changed the world, but multimodal AI is now coming out.

This isn’t just a little change; it’s a big one in the way AI sees and interacts with the world. When multimodal models learn about the world around them, they begin to behave like people. They do this by getting data from sensors, pictures, sounds, and text, among other things, and then looking at it. AI’s future isn’t about being good at one thing; it’s about being smart and aware of what’s going on around it.

What Multimodal AI Can Do

Multimodal AI is powerful because it can combine different data streams to get a better picture of the whole thing. Picture a person trying to figure out a complicated situation. We don’t just believe what we read or see; we also use spoken words and context to help us understand. The same thing is what multimodal AI models are made to do. People are already using this in real life. A self-driving car can do more than just see other cars and read road signs. Some more features are the ability to use data from LiDAR and radar sensors to figure out distance, use audio signals to find sirens, and process GPS data in real time. This mix helps it make better and safer decisions.

A multimodal system in healthcare can look at a patient’s medical history, X-rays or MRIs, and even how they sound when they talk to a doctor. The AI can give a better and more detailed diagnostic recommendation by using these different pieces of information together instead of just one. Also, customer service is getting better. A chatbot could not only answer text questions, but it could also look at pictures or videos that a customer sends. A user could send a picture of a broken part and explain the problem at the same time. This would let the AI quickly figure out what was wrong and offer a specific fix, which is much better than a text-only interface.

The Next-Generation Business Advantage

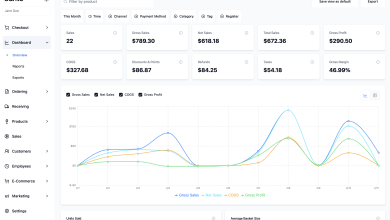

Business leaders may get ahead of the competition by using multimodal AI. Companies that use these systems can connect social media posts, consumer reviews, and product usage statistics to acquire more information from unstructured data. This would help them understand market trends and public opinion. They will also help things run more smoothly. Businesses can benefit from AI technologies that give them a clear, actionable picture of complicated information, much like customers look for the best online casino that has an easy-to-use design, helpful content, and dependable services.

AI can make predictive maintenance more accurate in manufacturing by combining sensor data from a machine with video feeds of how it works and maintenance logs. As AI becomes more common in everyday life, multimodal interfaces will also make the user experience more natural.

The Challenges Ahead

There are some problems on the way to widespread use of multimodal. To properly combine different types of data, these systems need a lot of computing power and advanced data alignment methods. It also gets harder to make sure that AI is used ethically when there are many possible sources of bias. Even with these problems, the path is clear. As researchers improve the underlying architectures and the cost of computing goes down, multimodal AI will go from being a specialized tool to a basic technology. We are moving beyond the age of AI models that only use text or images. It’s not enough to just know a few facts; you need to understand the whole web of information that makes up our world.