AI is reshaping industries and placing data centre infrastructure under intense pressure to adapt. McKinsey projects that data centre capacity will nearly triple by 2030, with AI workloads accounting for approximately 70 percent of that growth. Meanwhile, Bain & Company predicts that the market for AI-related hardware and software could grow between 40 and 55 percent annually, reaching between $780 billion and $990 billion by 2027.

While these figures illustrate the scale of the transformation underway, not every promise of AI is rooted in operational practicality. To move past hype, we must identify the areas where AI is already delivering real benefits and understand what infrastructure upgrades are needed to support its continued adoption responsibly.

From Monitoring to Meaning

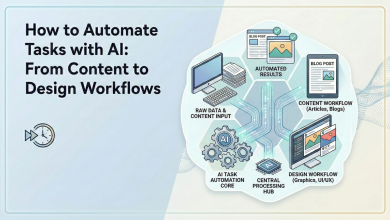

AI holds clear potential to improve how digital infrastructure is managed, especially when applied with intention. One of the most immediate benefits lies in how it simplifies access to complex information. By enabling natural language interactions within Data Centre Infrastructure Management (DCIM) platforms, operators can now query system status, retrieve metrics or model scenarios without navigating dense dashboards or relying on technical search commands. This not only improves usability but also accelerates decision-making in time-sensitive environments.

At the same time, data centres are experiencing a surge in instrumentation and data velocity, with more devices streaming telemetry more frequently than ever before. Sifting through this constant flow of information in near real time is a natural fit for machine learning models. Here, large language models (LLMs) and generative AI act as intuitive query layers, helping operators cut through data noise and surface the insights that matter most.

Another area where AI is showing real value is in anomaly detection, incident and alert management. Machine learning models trained on sensor and historical event data are helping teams triage alerts more efficiently, reduce false positives, and identify anomalies earlier. These capabilities support predictive maintenance strategies, which in turn reduce downtime, extend asset life and allow engineering teams to shift focus from reactive troubleshooting to proactive optimisation.

Laying the Groundwork for Predictive Planning

Despite recent advances, some of the more ambitious claims, such as autonomous workload orchestration or AI-led capacity planning, remain out of reach. These use cases require deep, real-time awareness of the physical environment, including how workloads affect power draw, cooling load and spatial constraints. Without accurate, up-to-date infrastructure data, AI-generated recommendations can become incomplete or misleading. This is particularly risky in high-density environments where rack power consumption can exceed 60 kilowatts and the margin for error is exceptionally narrow. In these scenarios, explainability becomes essential. Teams need to trace how AI reaches its conclusions and confirm that its outputs align with actual operating conditions. Until this level of transparency is delivered consistently, human oversight remains a necessary safeguard.

Another challenge is data completeness. AI may only have access to part of the relevant inputs, and legal restrictions on data processing can further limit the range of recommendations. Data inertia also creates constraints. Systems that operate with petabytes of information cannot easily move workloads without significant cost and complexity. On top of this, health and safety considerations add further limits in mission-critical environments. For these reasons, AI is best applied as a tool that performs limited, well-tested actions, while larger interventions with safety, data, or legal implications remain under human oversight. Workflows that keep people in the decision loop will remain essential

Why Visibility Still Comes First

Before AI can deliver insights, it needs a strong foundation of accurate data. Clean inventories, precise environmental monitoring and up-to-date connectivity records are prerequisites for intelligent analysis. DCIM platforms that offer real-time visibility into infrastructure health allow AI to surface the right insights, highlight potential issues earlier and reduce the need for manual investigation.

This type of visibility also supports teams in increasingly hybrid and distributed environments. High staff turnover and varied experience levels make it difficult to rely on institutional knowledge alone. When DCIM tools incorporate intuitive workflows, in-platform assistance and context-sensitive guidance based on documented best practices and tailored standard operating procedures, they empower users to operate more independently and confidently.

The Energy Cost of AI

AI conversations often focus heavily on capability, with far less attention paid to cost, particularly environmental cost. The growth of generative models is driving a sharp increase in power and cooling requirements, with some next-generation racks drawing over 100 kilowatts. Many older facilities were not designed to handle these thermal and electrical loads, which makes upgrades both urgent and expensive.

What was once a technical problem has now become an environmental one. If the energy demands of AI are left unchecked, they risk putting sustainability targets out of reach at a time when regulatory pressure is increasing. Across the EU, UK and North America, operators are being asked not only to maintain uptime but also to demonstrate compliance with energy efficiency and emissions goals.

Using AI to Support Sustainability Goals

Despite these challenges, AI can also become a powerful ally in sustainability efforts. Intelligent monitoring can detect underused or “zombie” servers, airflow bottlenecks or inefficient cooling zones that consume power without adding value. These adjustments may seem minor individually, but they deliver meaningful savings when applied across entire facilities.

AI can also streamline compliance by automating reporting and benchmarking energy performance. As disclosure requirements grow more detailed, operators will need instant access to metrics like Power Usage Effectiveness (PUE), thermal performance and idle capacity. In this context, DCIM platforms are evolving into operational engines for sustainability governance, bridging the gap between technical insight and environmental accountability.

Moving Forward with Purpose

AI is not a silver bullet for the data centre’s most pressing problems, but it is a valuable tool for improving how existing systems are used. Its greatest impact today lies in refining day-to-day workflows, speeding up access to information and making planning decisions more precise.

Looking ahead, the biggest gains are likely to come not from fully autonomous facilities, but from hybrid approaches that pair intelligent systems with skilled human oversight. As AI tools become more embedded in infrastructure operations, success will depend on how clearly they support visibility, how effectively they reduce operational friction and how well they balance performance with sustainability.

Meeting rising demand will require smarter infrastructure, not just more of it. The path forward is not about chasing complexity but about implementing intelligence in ways that are explainable, trustworthy and grounded in real-world constraints. That is the kind of AI the data centre industry truly needs.