As large language models (LLMs) grow smarter and AI agents gain more tools and capabilities, the responsible development of these technologies becomes paramount. Having worked extensively in both industry and academia, I have observed a significant divide in how responsible AI is prioritized.

Introduction: Academia vs. Industry Perspectives on Responsible AI

Academia tends to approach responsible AI with an idealistic mindset. Students and researchers are taught to embed ethical, legal, and safety considerations into AI systems from day one and throughout the development process. This approach is valuable and ensures the highest standard is achieved. However, it is often impractical in industry settings, where financial pressures and product deadlines demand rapid development and deployment.

In contrast, industry practitioners view responsible AI through a more pragmatic lens. They often see it as a checkbox or a compliance exercise—something to be addressed only when explicitly required by customers, investors, or partners. This can lead to the neglect of critical ethical and safety concerns until after a product is released, sometimes resulting in harm or loss of trust.

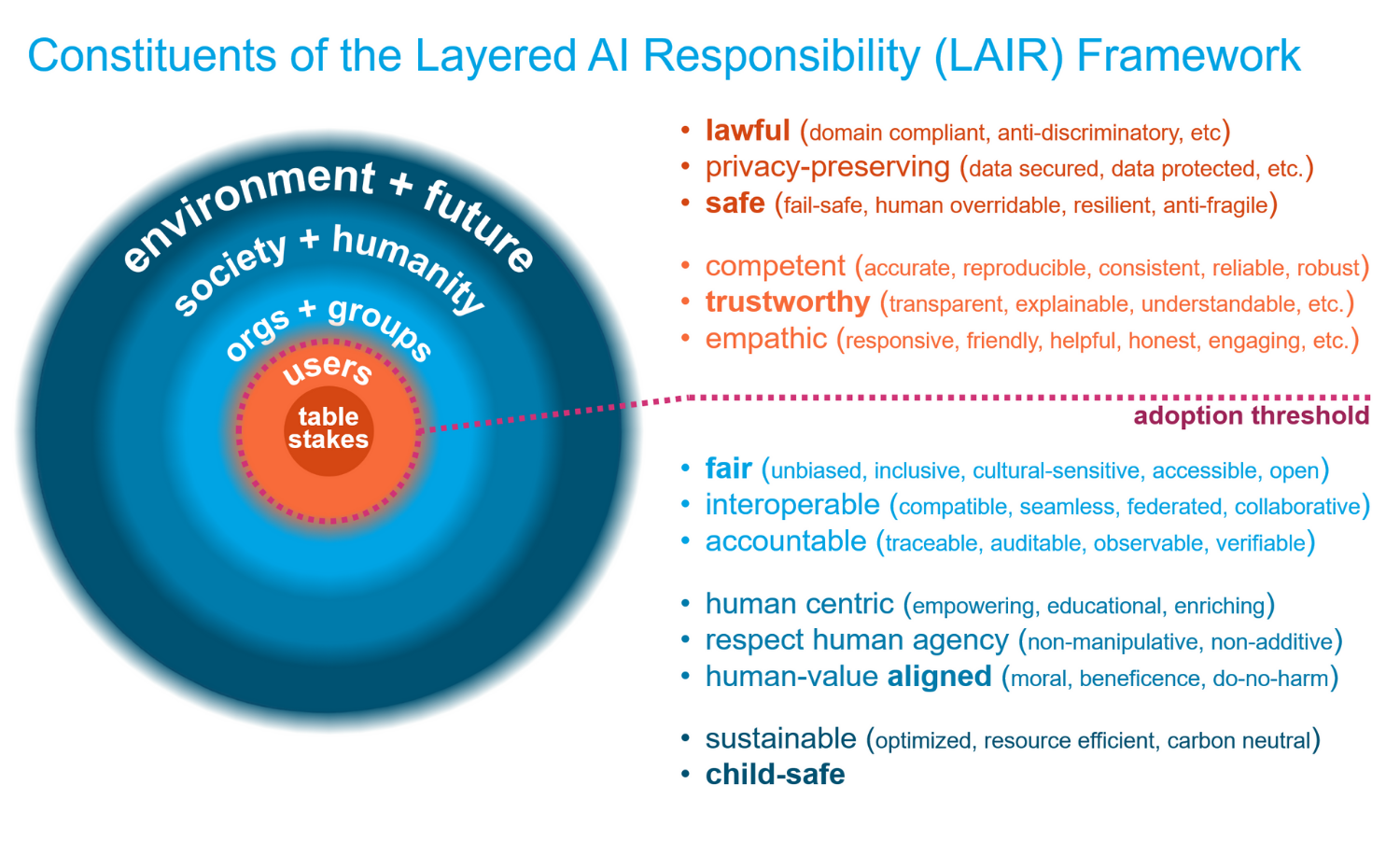

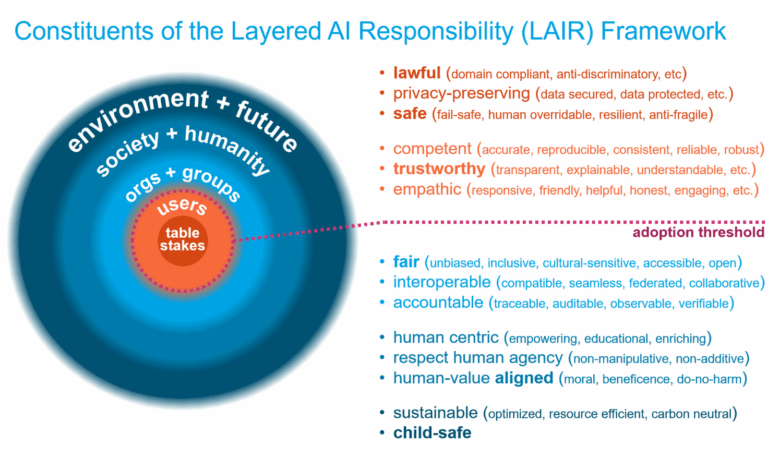

To bridge this gap, I developed the Layered AI Responsibility (LAIR) Framework. It is a practical guide designed to help AI vendors focus on the right responsibilities at the right time. By providing a layered, staged approach, we can achieve the best of both worlds. Industry teams can allocate resources efficiently while still attaining academic-level standards of responsible AI for their products.

The Layers of the LAIR Onion

The LAIR Framework is structured like an onion, with layers representing different levels of responsibility that AI developers should address at different stages. This approach enables teams to focus on foundational requirements early on and progressively take on broader responsibilities as the AI matures and its scope expands.

The framework divides AI responsibility into five layers:

- Layer 1 (the core layer): The Table Stakes

- Layer 2: Responsible for the Users

- Layer 3: Responsible for Organizations and Groups

- Layer 4: Responsible for Society and Humanities

- Layer 5: Responsible for the Planet and Our Future

The inner layers (layers 1–2) consist of requirements needed before reaching the adoption threshold. These must be met before an AI product is considered viable and adopted by users. Beyond that point, the outer layers (layers 3–5) address responsibilities that grow with the AI’s impact and scope.

Since every reputable AI company has its own responsible AI framework, it is important to recognize that the LAIR framework aligns well with existing models. They may use slightly different terms to describe the components, but many of the core ideas are essentially the same. In the figure above, the words in parentheses are terms that other frameworks have used to describe similar requirements.

Layer 1: The Core Table Stakes

At the core of the LAIR are table stakes. These are non-negotiable requirements that every AI product must satisfy:

- Lawful: Responsible AI must abide by the governing laws wherever they apply. Other frameworks might use terms like compliance, legality, or legitimacy. Some might even explicitly highlight anti-discriminatory, as most of the free world has laws that prohibit discrimination. These specifics are covered when we ensure our AI is lawful.

- Privacy Preserving: AI systems must protect user data and respect privacy. This involves data security, anonymization, and compliance with privacy standards. Other frameworks have describe this requirement using terms like data security, data protection, anonymity, or more generally, privacy and security.

- Safe: Responsible AI must be safe. While safety can mean different things in different contexts, here it refers to the presence of guardrails that prevent catastrophic failure modes. This could mean having human overrides, robust backup systems when AI fails, or designing AI to fail gracefully. Terms like fail-safe, resilience, and anti-fragility capture this idea.

AI products that fail to meet these core requirements cannot be considered minimally viable. No user or customer would trust or pay for AI that is illegal, invades privacy, or unsafe. Practically no one will use it, so the rest of the framework doesn’t even matter.

Layer 2: Responsible for the Users

Once the core table stakes are met, AI developers must focus on responsibility toward the users. This layer includes three key requirements.

- Competent: To be responsible to the user, AI must perform well at its intended task. Whether predicting outcomes or recommending actions, the AI’s output must reach a performance threshold that delivers real value. While perfection is not required, the AI must be reliable enough to justify continued use.

Deploying an AI tool that is not competent at its intended task is not being responsible to the user. Many users could be using these AI tools to help them make important decisions at work or in their personal life. Some of these decisions may have high-stakes or may even be mission-critical. When the AI is not competent enough, the resulting suboptimal decisions could lead to job loss or degradation of livelihood.

- Trustworthy: Many factors contribute to trust in AI. Even competency is one of them. If an AI performs poorly, users naturally won’t trust it. Since trust is crucial for adoption, without it, none of the benefits stemming from the AI’s competency will ever materialize.

Beyond competency, most trust-building factors revolve around understanding how the AI produces its output. Since many cutting-edge AI leverage highly complex neural networks that are essentially black boxes, making these models more transparent or explainable goes a long way to building trust.

- Empathic: The last requirement for an AI to be responsible to its user is empathy. This requirement primarily concerns user experience and is also intended to drive adoption. The same AI output can be presented in many different ways. An AI that is empathic will present the output in a way that is conducive to human adoption. There are many ways to accomplish this, including being friendly, helpful, honest, responsive, and even engaging.

Meeting these user-focused requirements marks the adoption threshold. This is the point at which AI gains widespread use, augmenting or automating decisions, leading to mass adoption eventually.

Layer 3: Responsible for Organizations and Groups

Beyond the adoption threshold, individual users will adopt AI for self-interest—seeking convenience, performance, or efficiency. However, individual user’s interests may not always align perfectly with those of the organizations or groups they belong to.

That’s why AI intended for organizational use (e.g. companies, universities, government departments) must meet three additional requirements to ensure it is responsible to the group. Layer 3 mandates that AI must serve the organization and provide value to the entire group:

- Fair: In an organizational setting, responsible AI must be fair. That means the AI must be unbiased, inclusive, culturally sensitive, accessible, and open. If the AI is biased in favor of a select group within the organization, it would be unfair to others. If it is only accessible to a few, it would not be fair to the rest, and so on.

- Interoperable: Since AI within an organization is potentially used by different teams and departments, they also need to be interoperable. This means the AI must be well-integrated, mutually compatible, and operate seamlessly and collaboratively across different teams and departments. Such AI is typically designed and engineered to be modular and composable with federated operations.

- Accountable: Lastly, when multiple parties use one or more AI systems in conjunction, accountability becomes crucially important. Whether within a team, a department, or the entire organization, various AI systems may collaborate and culminate in a decision to take some actions. If these decisions are suboptimal, the respective teams, departments, and organization must be able to audit and trace these decisions and hold constituents accountable.

By addressing these organizational responsibilities, AI tools can support group goals and maintain trust within institutions. This ensures organizational constituents are well-aligned and achieve a result greater than the sum of their parts.

Layer 4: Responsible for Society and Humanities

If the AI has a larger scope of applications and is intended to be used by large populations spanning societies around the globe, it must take on even greater responsibility. Such AI must be responsible for society and humanity as a whole. Beyond benefiting organizations, these AI systems must serve and benefit our society and humanity. And the three requirements in layer 4 of the LAIR framework will help us accomplish this.

- Human-Centric: To be responsible for society and humanities AI should aim to empower humans rather than replace them. Every interaction with this AI should be designed to amplify human contributions rather than diminish human value. AI should be educational and enrich those who are using it. It should foster confidence and instill in its users a greater sense of control and self-assurance.

- Respect Human Agency: The second requirement of this layer requires AI to respect human agency and endow its users with greater autonomy. AI should not manipulate humans or create addictive behavior. Sadly, many technologies do not respect human agency. For example, social media is highly manipulative, and many online gaming apps, including gambling, are highly addictive. These technologies not only polarize our society, but are also strongly linked to mental, cognitive, and physical health issues. We must avoid making the same mistake again with AI.

- Human-Values Aligned: The last requirement of layer 4 requires AI to align with human values. That means AI must be trained to understand the moral and ethical standards that matter to humans, and be able to act on these values to prevent harmful, malicious, or unintended outcomes. However, values differ across cultures and change over time. AI alignment must also involve continuous stakeholder engagement, human oversight, and independent audits that enable AI to learn and adapt to new values as they emerge. Together, these practices aim to keep AI behavior predictable and beneficial while averting reward-hacking or other unintended consequences that could compromise human welfare.

Layer 4 of the LAIR framework emphasizes AI’s role as a beneficial societal force that respects diversity and human dignity.

Layer 5: Responsible for the Planet and Our Future

If an AI system achieves global adoption, we need to consider its impact at the planetary scale. Many powerful AI systems today are trained and deployed in massive data centers running GPUs that require both energy and water for cooling. The final layer (Layer 5) of the LAIR framework requires AI to be responsible for our planet, the environment, and our future. This layer imposes two additional requirements.

- Sustainable: To be responsible for the environment, AI must be architected and engineered to be sustainable. Computationally, it must be optimized and use its limited computing resources as efficiently as possible. AI should aim to be carbon neutral to minimize their negative impact on the climate and our planet. Using AI today that leaves the environment less habitable for our future generations is not being responsible to our planet or those who come after us.

- Child Safe: To be responsible for our future, AI must also be child-safe. We have already seen that excessive use of calculators at a young age can erode fluency with basic arithmetic. Early access to social media has led to the underdevelopment of emotional regulation, impulse control, and real-world social skills, contributing to the loneliness epidemic we see today.

It’s easy to imagine that excessive AI usage could also significantly impair learning and cognitive development in young children. As AI is introduced in schools and becoming more accessible at an ever earlier age, it is crucial to ensure these tools are child-safe. It is paramount to ensure that AI usage won’t interfere with normal child development. We must not forget the children are our future. Deploying an AI that is not child-safe today is not being responsible for the generations to come.

By addressing these environmental and generational concerns, AI development can contribute to a sustainable and equitable future.

Conclusion: Embracing Responsibility at Every Stage

“With great power comes great responsibility.” This saying applies profoundly to AI. As these technologies grow in power and reach, they must take on increasing responsibilities. The LAIR Framework offers a practical guide for AI developers, helping them focus on the most relevant responsibilities at different stages of AI maturity.

At the very beginning, AI developers should only focus on the core layer (layer 1), which consists of table stake requirements. Before reaching the adoption threshold, AI teams must focus on layer 2, being responsible for the users of the AI under development. Once the AI has met all the inner layers (layer 1-2) requirements, these AI tools will be adopted within their intended scope of application.

Subsequently, AI builders need to focus on the outer layers (layer 3-5) of the LAIR framework and take on more responsibilities depending on the scope of the AI’s impact and reach. If the AI tool is intended to be used by groups of people within some organization, they only need to focus on layer 3 and be responsible for the organization and the group. If AI has a broader scope and is intended to be used by a larger population, then it must also focus on layer 4 and be responsible for society and humanity. At this scope, AI vendors must examine layer 5 and be responsible for the plant and our future by considering the environmental impact and child-development impact of their AI.

The LAIR Framework provides a strong foundation for building and deploying AI responsibly. This layered approach prevents developers from being overwhelmed by all the requirements at once. It helps engineers and scientists stay focused on a few responsibilities at a time, ensuring that each is addressed properly. By following the LAIR Framework, the AI community can build technologies that are not only powerful but also beneficial for all.