When I joined OPAQUE, I believed deeply in our mission: to drive human progress by helping organizations to adopt AI responsibly and safely unlock the value of their most sensitive data through confidential AI. But, to be honest, I wasn’t sure if the market was ready — I thought we might be too early.

I was concerned that most companies would spend years experimenting with low-risk pilots, such as basic chatbots, internal productivity tools, and models trained only on public data. Then, they’d slowly inch into production for low-value use cases that didn’t involve proprietary and sensitive data. I was concerned that it could take three or more years for companies to build meaningful, differentiated value with AI applications using sensitive and confidential data.

I was wrong.

At the recent All Things AI conference with around 1,800 attendees, we asked who, as a show of hands, was experimenting. Some said “yes.” But when we asked how many had already moved AI into production, nearly every attendee raised their hand without hesitation. Wow. However, when asked if they were using AI with their intellectual property, sensitive customer information, and confidential data — well, there were only a handful of people.

That’s when it became clear: companies are struggling to trust AI. As a result, AI’s production deployments have limited business value. The companies that figure out how to move AI into production with sensitive and confidential data will create tremendous, differentiating value and disrupt their markets.

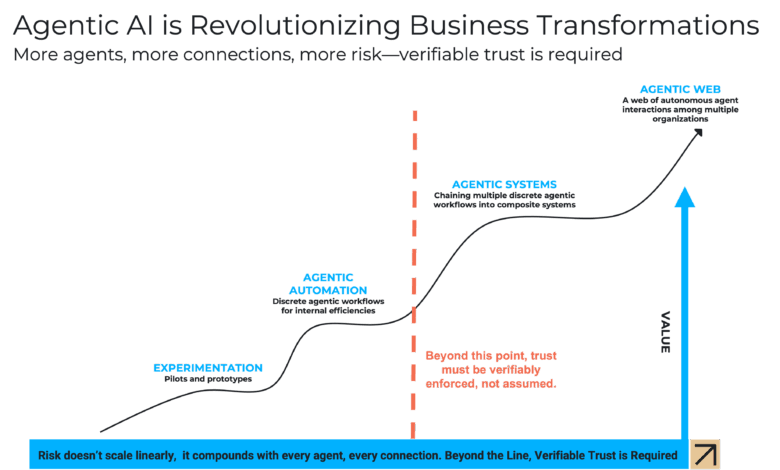

Enterprises today face an inflection point: adopt AI with confidence, or fall behind in markets being rewritten by it. Here’s what we’re seeing emerge as four distinct waves of AI Adoption.

Wave 1: AI Sandbox

This is the testing and experimentation phase. Organizations run pilots to explore what generative AI and AI agents can do before rolling anything into production. Projects are small, low-risk, and often built in isolation from core systems.

Sometimes, companies use generic data like public HR policies, internal onboarding manuals, or IT troubleshooting guides. Other times, they test with sensitive data in a safe, controlled sandbox. Either way, the goal is learning what’s possible, not scaling.

These experiments are usually led by innovation teams who are working on the vanguard of AI potential. Early wins include productivity bots, simple chat tools, and basic automation that don’t touch critical workflows or production systems.

And a CIO from a public financial services company shared:

“We ran a pilot with a GenAI vendor last quarter, but we had to strip out the actual customer data for compliance reasons. The result? A flashy demo — zero business value. We burned seven months and walked away with nothing we could scale.”

The results? A growing internal appetite for what’s possible.

Wave 2: The AI Plateau

In this phase, AI pilots move into production, but the returns stall. Companies deploy AI into real workflows like customer support, employee onboarding, or knowledge search. But there’s a catch: it’s all surface-level. The data fueling these systems is public, sanitized, or non-sensitive, so the outcomes remain shallow and non-differentiated.

Think: HR chatbots pointing to policy documents. Sales assistants limited to public collateral. Dashboards pulling from basic CRM records. It’s AI, technically — but not transformational.

This is when the drag of compliance, risk, legal, and InfoSec kicks in. At this phase, enterprises are forced to confront the right – and hard – questions:

- What if the model leaks data?

- How do we ensure it doesn’t retain sensitive information?

- How do we know our data is being kept private and access controls are being enforced?

These aren’t hypotheticals. They’re enterprise blockers. To move forward, teams dilute the use case, strip out sensitive inputs, and hope for safe impact. The result? Vanilla AI — high effort, low ROI, and zero strategic edge.

Many companies get stuck here, especially in industries like finance, insurance, and enterprise SaaS. There’s appetite for more, but no clear path to trust AI with the data that actually matters.

As one CTO told us:

“We’re using AI — but only for things that wouldn’t hurt us if they failed. That’s the problem. Low-risk data is of little value.”

Wave 3: The AI Powerhouse

This is the inflection point — where AI starts delivering clear revenue impact and operational advantage.

In Wave 3, AI moves beyond experiments and becomes embedded in the business. Enterprises begin safely leveraging proprietary, sensitive data — customer records, financials, internal strategies — to power AI systems that create real, differentiated value.

AI isn’t just answering questions anymore. It’s transforming how the business runs: enhancing customer experiences, streamlining operations, and surfacing insights that guide executive decision-making. And it all happens without ever exposing sensitive inputs to the model provider.

At OPAQUE, one enterprise client built a secure sales commission agent using confidential RAG. Questions that used to take four days to resolve now take four seconds — with complete data privacy. Another use case involves a CFO assistant that analyzes sensitive financials and customer metrics — the kind of information that, if leaked, could move markets.

Another client, deeply integrated across global credit, lending, and collections systems, handles billions of consumer data records. With OPAQUE, they’ve deployed confidential agents that automate workflows involving hundreds of employees — improving margins and, critically, deepening trust with issuers and consumers.

There are endless use cases across industries. Banks, for instance, can create a financial forecasting agent using data from proprietary revenue models, confidential investment plans, and internal cost structures. High-tech companies can build customer-facing AI agents that provide personalized insights or automation — powered by proprietary usage data, behavioral analytics, and engagement history — all while ensuring that customer data remains private, protected, and provably unexposed.

This level of performance isn’t possible without the proper infrastructure. To keep data safe, companies need confidential computing, runtime policy enforcement, and cryptographic attestation.

The result? A durable competitive edge. These AI systems are built on your unique operations, customers, and history — and that makes them impossible to replicate, unless you leak your data.

As one product leader put it:

“Coordinating with legal, infosec, and data science has become standard. In Wave 3, building AI products means embedding policy compliance and data protection at the design layer. That’s the only way to scale AI with executive trust.”

Wave 4: Agentic Architecture at Scale

This is where we’re headed.

In this wave, AI agents evolve into the next generation of enterprise architecture. Built on the principles of microservices, these agents don’t just assist workflows: they are the logic that drives applications. They compose tasks, call APIs, and make real-time decisions.

This isn’t IT automation. It’s how the next era of applications will be built.

Today’s microservices are reactive. They wait for a request, follow hard-coded logic, and return a result. Agents offer much more functionality and operate autonomously. They can observe their environment, maintain internal memory, plan toward goals, optimize outcomes, and learn from experience.

Imagine a world where agents can reconcile accounts, respond to support tickets, update databases, and communicate with vendors — all with minimal human oversight. That’s the promise of Wave 4.

Our head of engineering put it this way:

“These agents aren’t sidecars to our systems; they are the system. They plan, act, and learn, and they’re reshaping how we think about enterprise software.”

But with that power comes risk. Enterprises can’t just rely on traditional access controls or firewalls. They need confidential AI infrastructure that:

- Verifies agent identity and code before execution

- Encrypts data during runtime in safe, trusted environments

- Enforces organizational policies in real-time

- Produces a tamper-proof audit trail

That’s how enterprises can safely build and scale agent-based systems — without sacrificing security, sovereignty, or compliance.

Final Thoughts

Foundational models are table stakes. Your competitive edge — the kind that drives market disruption, deeper customer trust, and defensible revenue — will come from activating your proprietary data.

But here’s the problem: that data is now the biggest attack surface. It’s easier than ever to scrape, leak, or misuse, especially in multi-tenant, AI-augmented environments. And most enterprises aren’t holding back out of fear; they’re holding back because the infrastructure to trust AI with that data hasn’t existed.

Until now.

OPAQUE was built for this exact moment. We help high-tech and financial enterprises move beyond stalled pilots and low-risk bots — and into production-grade, Confidential AI systems that offer verifiable guarantees at runtime, without sacrificing velocity or compliance.

If you’re ready to stop experimenting and start building the future, we’re here to help.