This article was co-authored by Clement Chenut, senior consultant and Kary Bheemaiah, CTIO at Capgemini Invent

“At a time of rising population and climate change, we need both organic solutions that promote sustainability and the technological approaches that increase productivity—and there is no reason we can’t have them both.” — Bill Gates

The above statement, expressed by Bill Gates in 2010, is having increasing resonance in the field of AI. The hype around the development of artificial intelligence (AI) in business operations has been exponential over the past 10 years due to its promising performance results on process automation, image recognition, rapid application development, or machine translation. Today, with spending increasing at a CAGR of 20.1% for the 2019-2024 period (IDC, 2020), AI systems are quintessential for any company’s technology investment strategy.

However, a point in AI conversations that receives less limelight, is the economic and environmental costs of creating, deploying and maintaining AI applications. AI is not cheap. There are cost structures that have to be considered and addressed, as not doing so is not just harmful to a company, but to the overall field (especially R&D activities). If AI applications come at a high price point and become too expensive to engage in, then the first group of actors that get impacted is the R&D teams who are pushing forth this technology, thus creating a self-inflicting-harm loop in the process. Hence, moving forward, having a perspective on the economics of AI will be as important as the technological perspective of its capabilities.

The Economic costs of AI

Any value creation, especially in tech, has a price tag and AI is no exception. In the case of AI, the value comes from the data it is trained and applied on, as the value of AI models dwells in their capacity to compute data.

Models + Data are the first cost area in the economics of AI. While it is exciting to hear about 175B parameter models (GPT-3) and even trillion parameter models, these models are feasible today as we are awash with data. But while the cost of accessing data has gone down, this does not mean that it has made training these multi-billion parameter models cheap.

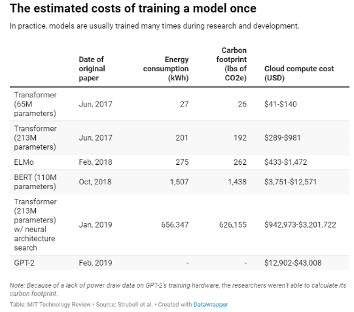

Training costs. According to the Allen Institute, the average cost to train an AI model is at $1 / 1000 parameters, resulting in the following ballpark figures (based on model complexity, training data availability, existing libraries, and running costs):

| Number of parameters | 110 million | 340 million | 1.5 billion |

| Training cost | $2.5K – $50K | $10K – $200K | $80K – $1.6M |

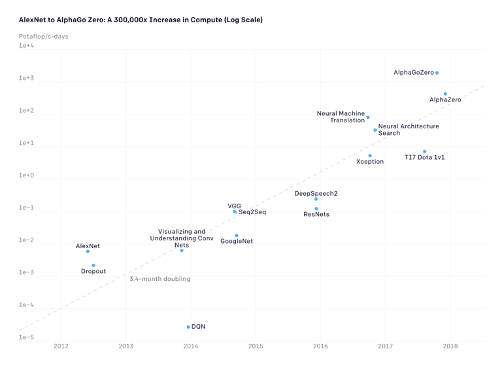

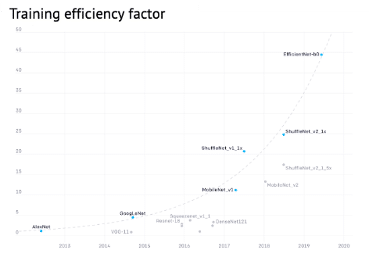

Their analyses revealed that there is a proportional relationship between AI models computing capabilities, and the time and energy they consume – As seen in the image below, while AI’s computing power has increased by 300,000x since 2012, the training costs double every few months!

Running costs. This brings us to the second cost center – running these AI applications. Firstly, these applications require dedicated capability units and cloud solutions with enormous storage capacity – especially if you aim to compute your data through the 175B parameters that GTP-3 offers.

Secondly, once setup, these models don’t remain static. As the models are used in products, end-users will generate new data sets that the training model was not fed on. Consumers will also make varying demands of the application which means the AI needs to be retrained to perform tasks that the original model was not trained to do.

This is a common occurrence in any product. As user adoption increases, so does product evolution and version control. However, with AI, this means more development costs. As new data comes into the picture, it causes “data drift” and AI developers are constantly tweaking their models, as while their original model can automatically respond to 90% of user requests, the last 10% remains an elusive automation target.

As a result, the models have to be trained continuously/at certain intervals, which makes the AI application’s running cost a lot more expensive than the initial training one (i.e. 80-90% of the overall cost according to Nvidia). For this reason, AI firms can sometimes look like regular service firms, with a significant portion of their revenue being directed to product cycle management and customer helplines, as personnel has to intervene and respond to client demands in areas that the AI is incapable of doing so.

Cloud computing costs. Finally, increasingly our AI applications today work with dense data – videos, high-quality images, etc. These data sources need more storage and compute, which further adds to the running costs.

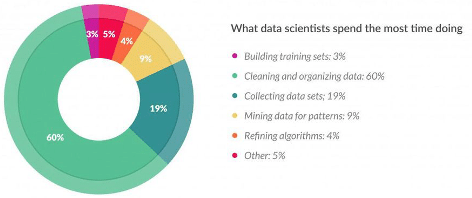

This means that the cost for data acquisition, storage, and processing via the cloud, is drastically augmented, in order to achieve the business objectives. Not only does the new data need to be acquired, but it also needs to be prepared – a time-consuming task that can take up to 80% of the AI team’s time.

Furthermore, we observe diminishing returns to the model performance as the model size grows. For example, the release of ResNeXt in 2017, an improved version of the computer vision model ResNet, required 35% more computational resources for only 0.5% accuracy improvement. While larger models with a higher number of parameters might need less data than the smaller ones for the same performance, the actual performance improvements achieved by these models is questionable from an economic standpoint. And when we compare it to the training, re-training and cloud costs, the benefits seem debatable.

The ecological costs of AI

If the above costs were not enough, we’re also faced with environmental costs when it comes to deploying AI. Today, we cannot be ignorant of the carbon footprint when developing new solutions. Information and communications technology (ICT) energy consumption is expected to represent 20% of the global electricity consumption by 2030, hence increasing the sector’s carbon footprint – ICT currently accounts for 2% of global emissions.

According to a study from the University of Massachusetts in 2019, the carbon footprint from NLP models’ training equals the CO2 emission production of 5 American cars over their lifetime or 300 round-trip flights between San Francisco and New York over a year. (Note: The carbon emission measure is calculated from the required amount of energy to run algorithms, including a blend of renewable and fossil sources).

Why do AI models consume so much energy? Think again of AI as an ecosystem. AI is considered as a high energy consuming technology as it depends on large data volumes, servers, and data center that needs to be powered on and cooled.

Data management. Companies’ hunger to improving decision-making by leveraging data is growing. Therefore, AI models are being trained on datasets that balloon in size, resulting in an exponential need for compute and energy. In addition, more data doesn’t mean good data. And bad data implies that storage capacity is not optimised and that the model training cycle is extended.

Model training and run. To build efficient models, practitioners need to experiment with multiple neural architectures and parameters. This creation of different versions of the target model is extremely time and power consuming, until an optimal design is reached. Once deployed, AI models will consume even more energy to continue to train and perform inference in real conditions.

Data center. With 200 terawatt hours consumed each year, data centers amount for 0.3% of the overall carbon emissions (i.e. more than some countries). Room for improvement in their daily operations has been identified, notably regarding cooling systems that use water instead of air conditioning. Such change could permit to save 100 billion liters of water just for the US each year.

Thus, the financial and environmental bills keep increasing as we train and deploy models, requiring immediate actions to avoid going into a dead-end.

Is AI development model sustainable?

If AI is expensive and highly energy demanding, what makes everyone continue to invest in it?

The good news is, there is an optimistic trade-off that justifies the investments. AI does bring tremendous opportunities for businesses to optimize their operations whilst actively contributing in favor of the planet (e.g., error reduction, process enhancement, optimized raw material consumption).

However, a prerequisite to this consists in improving AI’s cost/benefit ratio. Hence, the stakes resulting from economic and ecological costs analysis permit to elicit overarching weaknesses impacting NLPs performance: long training and running cycles, poor data management, and weak strategic partnerships. These can be mitigated in the near future as the 3 following trends are increasingly becoming a reality.

Coherent technology advances. Since 2012, the technological progress in computing divides the training effort for AI systems by 2 every 16 months. This performance can be elevated further by enhancing data collection, processing, and management (e.g. Labelbox delivers bad data mitigation services). Finally, by following a “train the trainer” logic, smarter use of AI on existing NLP models has the potential to duplicate systems efficiency – as illustrated in the table below. The compound effect of that being the greater the efficiency during the training time, the less energy is consumed. Hence reducing both money and electricity bills.

| Solutions | Description | Benefits |

| Pattern-Exploiting Training | Deep Learning training technique enabling Transformer NLP models (223M parameters) to outperform GPT-3 NLP models (175bn parameters) | Increasing NLP models’ effectiveness with fewer parameters |

| TinyML | Machine learning performed directly on sensor devices and microcontrollers at low energy power | Less energy consumed, reduced costs, and no internet connection requirement |

| AI for AI Devs | NLP solutions automating code translation from one programming language to another | Eased building and re-building model development |

| ML Emissions Calculator | Using machine learning to calculate models’ real-time carbon footprint from hardware, cloud provider, or geographic location | Improved software and hardware energy consumption |

Rationalized ecosystem management. Improving AI’s impact also results from optimizing the performance of the internal and external actors composing its ecosystem. Firstly, empowering AI developers leveraging advanced tooling enables them to industrialize the workflow via leaner processes. Amongst the existing solutions, a cloud-based platform like Floydhub provides AI Devs with tools to increase workflow productivity. Moreover, KubeFlow, a machine learning toolkit, enables Devs who run Kubernetes to build their AI models in the same way they build applications. Then, AutoML, Google’s low-cost SaaS offering, helps Devs with limited machine learning expertise to develop and train high-quality models that are specific to their business needs.

A second actor to monitor closely is your Cloud partner. Today, almost all providers act to reduce their carbon footprint. For instance, Google lowered its data center’s energy consumption by 35%, Microsoft aims at becoming carbon negative by 2030, and Amazon targets to use only renewable energy in its global infrastructure (including AWS). Therefore, engaging with a responsible partner becomes an easy fix.

Established economic model. As the technology gets mature and adopted by more users, demand for service customization is increasing. This leads to a decentralization effect as players from startups to multinationals are emerging to match their supplies, bringing AI more and more to the status of a commodity. By making deep learning systems more affordable, new AI applications are created at a lower cost (e.g. email fishing prevention, policy and compliance monitoring, science discovery, etc.).

Consequently, the more agile organizations institutionalize AI in their structure like any other department to foster innovation. There, the ultimate competitive advantage is to minimize the innovation cycle for new product development. And of course, the shorter the time-to-market, the lower model training and energy costs.

The role of AI for the greater environmental good

Over the past 10 years, endogenous factors from society, government bodies, and financial institutions have forced companies to transform their business model in a more sustainable manner (see BlackRock, Goldman Sachs, or even Apple). More specifically, the record-breaking amount of CO2 reported in 2019 marked climate issues as a top priority. Now, any companies’ investment must not only boost their performance but more importantly meet the environmental expectations. Hence, the full value of AI is legitimized on whether its usage in output offsets its gargantuan development requirements by a significant margin.

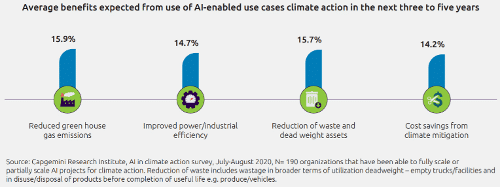

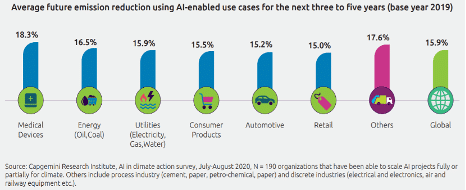

Deploying more sustainable AI models can be realized by (1) building green AI through low-carbon algorithms, and (2) developing AI-enabled use cases that improve both greenhouse emissions and productivity. As seen in the previous section, the first option in itself may not suffice, requiring further investigation for the latter one to achieve sustainable results at scale. In fact, organizations that developed AI-enabled use cases have already reduced their greenhouse emissions by 13% and their energy consumption by 11%. Yet, larger climate-friendly outcomes are expected across industries, as the technology evolves.

However, due to the recent advances in NLP/AI, over-ambitious organizations that set unrealistic targets risk do not attain any results. That’s why, although less attractive, focusing on smaller AI-enabled applications in the short-term is more likely to deliver long-lasting outcomes.

| Sectors | Sustainable applications examples | Companies |

| Agriculture | – Enhance monitoring and management of environmental conditions and crop yields – Reduce both fertilizer and water | Blue River Technology, Harvest CROO Robotics, Trace Genomics |

| Energy & utilities | – Manage renewable energy’s supply and demand – Use accurate weather prediction for greater efficiency, cutting costs, and reduced carbon pollution | Stem, ClimaCell and Foghorn Systems |

| Transportation | – Help reduce traffic congestion – Improve transport and supply chain logistics – Enable autonomous driving capability | Nutomony, Nauto and Sea Machines Robotics |

| Manufacturing | – Reduce waste and energy use during production – Improve precision with robotics – Optimize system design | Drishti, Cognex Corp, Spark Cognition |

| Facilities management | – Maximize the efficiency of heating and cooling – Optimize energy use (people detection in a room, renewable energy management) | Aegis AI, IC Realtime, IBM’s Tririga |

Since we are running against the clock, how to accelerate the industries’ sustainable transition with AI?

To me, there are two segments to address: those who pay and those who build. This means first raising awareness of C-Suite members who own the companies’ cash, but also convincing them that their sustainable strategies can drive better financial results – measured as Return on Sustainability Investment (ROSI).

Remember, value creation has a price tag.

The second population to keep on the hook is the R&D community who codes the algorithms of today and tomorrow. As AI is disrupting traditional ways of doing, it is the companies’ role to set guidelines and ethics-by-design policies helping them embrace that change.

AI can be a remarkable weapon to help drive positive environmental change, while businesses can continue to enjoy better results with frugality. Though, implementing a sustainable approach in conceiving and using this technology requires all the stakeholders to embark on the same journey: institutions, companies, and people. To finish, Geoffrey Hinton, the godfather of AI said:

“The future depends on some graduate student who is deeply suspicious of everything I have said … My view is throw it all away and start again”.

It’s time perhaps to apply more human sense rather than mechanical power to steer this change… with purpose.

Kary Bheemaiah is the CTIO of Capgemini Invent, where he helps define the business and sectorial implications of emerging technologies that are of strategic importance to the Capgemini group and clients. With global mandate across all of Capgemini Invent, his role is to define and connect the world of emerging technology to all Invent practices, clients and sectors, along with the Group’s CTIO network.