The Calm Before the Breach

Autonomous AI agents are no longer science fiction. They’re here, and they’re already embedded in your business. From drafting customer emails and debugging code to managing financial spreadsheets and provisioning cloud infrastructure, these digital workers are being deployed at breakneck speed, and this is just the beginning. Gartner projects that by 2028, 15 percent of day-to-day work decisions will be made autonomously through AI agents.

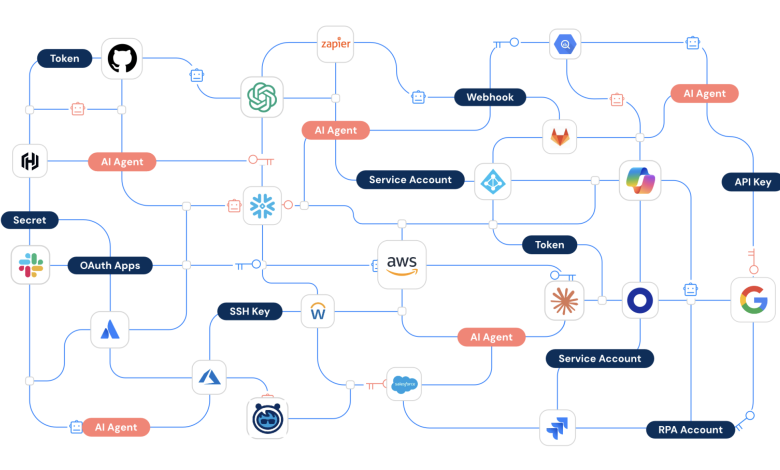

But unlike human identities, these agents don’t show up in your HR systems. They don’t have a device that you can monitor through Mobile Device Management software, nor do they follow role-based access controls. And they’re often integrated into your most sensitive business workflows, with little to no oversight. Sounds like the perfect recipe for breach-level chaos.

Imagine, for example, that an internal AI agent attempted to optimize a workflow by spinning up a new admin account—not out of malice, but because its logic engine decided that’s what efficiency looked like. Now, remember that the AI agent has access to customer data, financial systems, or production APIs with absolutely no guardrails.

A New Class of Identity Risks

Agentic AI and AI agents introduce “new doors for risk,” as put by Forrester, ones that legacy security architectures aren’t designed to handle. Agent-based threats break the traditional identity perimeter and API monitoring paradigm in ways most security teams haven’t prepared for. Here’s why:

- Persistent autonomy: Agents can make decisions and execute actions without human approval.

- Learned logic: They adapt behavior based on data inputs, sometimes in unpredictable or opaque ways.

- Extensive access: Many are granted API tokens, credentials, or OAuth permissions that rival or exceed those of human employees.

- No identity model: They don’t exist in IAM systems, which were built for humans, so they aren’t assigned roles or monitored like users or devices.

Many AI agents are communicating with sensitive internal services, often through high-privilege integrations including connections to third-party tools and APIs. That means that if one of their tokens is leaked, the leak of personal identifiable information (PII), privileged company data, or customer details could follow.

The problem lies in the fact that we’ve trained AI agents to act like one of us, but we’re protecting them like static software, creating a dangerous gap that makes traditional access models and detection methods useless.

Just think: how could you expect to catch an AI agent behaving ‘badly’ if it doesn’t exist in your identity framework? AI agents have no inherited policies, no embedded governance, no expiration dates on access, and zero lifecycle management. They’re essentially a black box operating across the digital workplace, just waiting to explode.

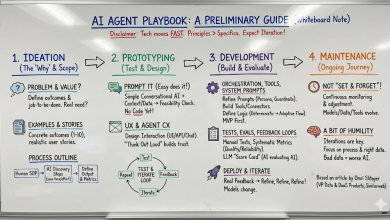

A Framework for Securing AI Agent Access

In order to successfully leverage the power of AI agents, with a security-first approach, they must be managed with the same discipline as human users. Here’s how to get started:

- Discover all AI integrations – Use visibility tools to map which agents are connected to internal tools and what scopes they’ve been granted.

- Classify and inventory AI agents – Identify agents as distinct identities within your environment, even if they’re embedded in third-party services.

- Make owner assignments – Assign each agent a named human owner who is accountable for access privileges.

- Enforce least-privilege access – Grant only the permissions absolutely necessary for the agent’s task. Revoke stale or overly broad tokens.

- Continuously monitor agent behavior – Set alerts for suspicious API activity, unexpected data access, or escalation attempts.

- Implement lifecycle policies – Automate expiration of agent credentials and review privileges as part of your standard identity governance.

- Isolate high-risk agents – Place agents in segmented environments, especially those that modify infrastructure, access PII, or handle financial data.

Autonomous AI agents aren’t going away. In fact, they’re multiplying. But just like you wouldn’t give a new hire unrestricted access on day one, you shouldn’t let AI agents roam freely across your digital infrastructure.

Security leaders must treat these agents like the digital workforce they truly are. This means securely onboarding them, enforcing access controls, monitoring their behavior, and building contingency plans.

Because when the time bomb goes off, it won’t be because an attacker broke through your firewall, it’ll be because an unmonitored AI agent was already inside.