For all the attention on trillion-parameter models and cloud-based APIs, the next meaningful leap in AI might be unfolding in quieter, more localized environments: not in the cloud, but at the edge.

Edge AI agents—lightweight, task-specific models running on local devices—are emerging as the pragmatic counterweight to massive foundation models. These agents prioritize responsiveness over scale, privacy over connectivity, and reliability over generalization. Crucially, they offer something the cloud often cannot: autonomy.

This pivot reflects a growing tension in AI deployment. While large models dominate benchmarks and developer demos, real-world integration remains uneven. Many industrial and public service contexts—factories, rural clinics, logistics hubs—lack the compute infrastructure or bandwidth to host cloud-dependent AI. What they do have are frontline users with immediate, repetitive needs: translate a form, flag an error, summarize an update, plan a route. And those needs don’t require a general-purpose model; they require one that simply works—offline, instantly, and predictably.

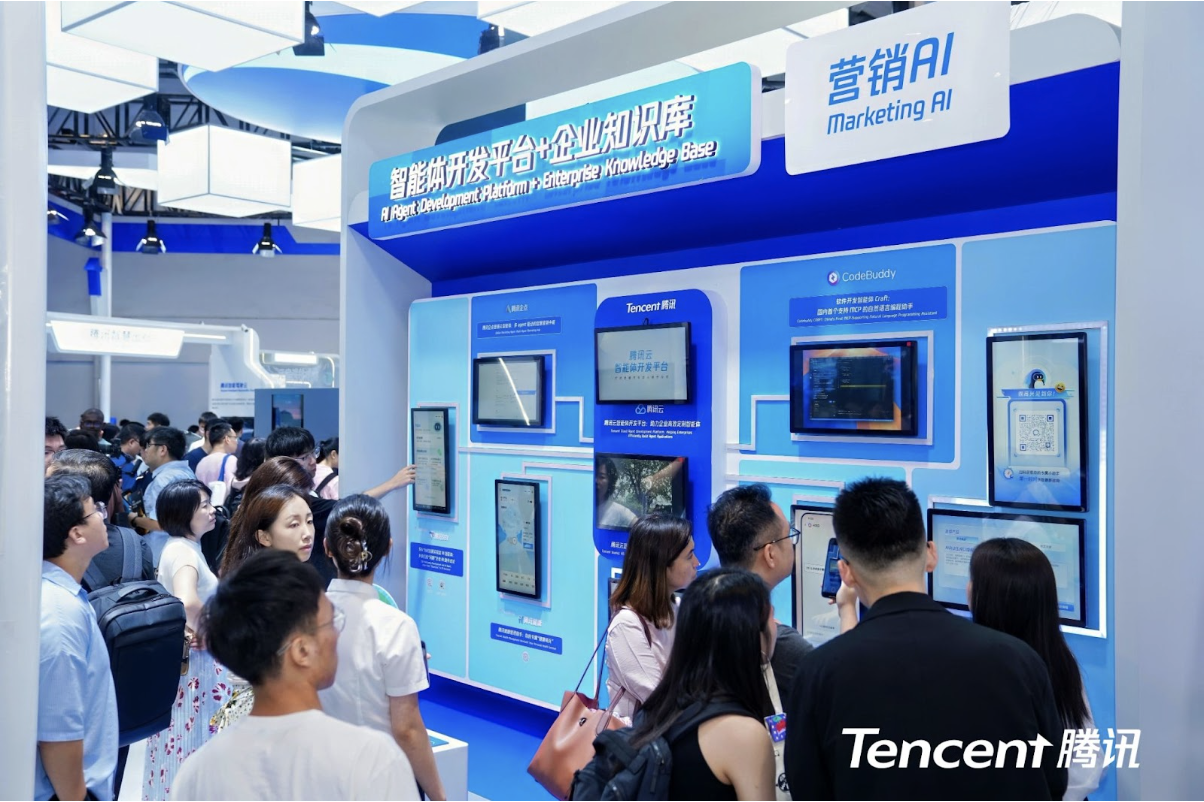

Companies across sectors are responding. Open Interpreter is enabling local scripting interfaces; Apple recently moved key LLM functionality on-device with GPT-4o; and in Asia, a handful of tech firms have begun integrating AI assistants into low-bandwidth frontline environments. Among them, Tencent has started embedding lightweight AI agents into municipal kiosks and factory-facing systems—designed less for conversational brilliance than for quiet utility. These deployments offer voice-controlled summaries, document processing, and navigational help, optimized for environments where connectivity is intermittent or unavailable.

Tencent Presents Latest Advances in AI Agent Development at WAIC 2025

The appeal isn’t just cost-efficiency or privacy—though both matter. It’s contextual intelligence: AI that recalls a shift schedule, tracks recent interactions, or understands the specific workflow of a rural post office. This embedded familiarity enables a more reliable class of interaction—one less about novelty, and more about function.

And that changes how AI is built. Instead of designing monolithic systems, developers now look at modular stacks, built to serve specific micro-tasks. A suite of small, local agents, each fine-tuned for purpose, may outperform one general model in both speed and relevance. These systems are also easier to maintain, audit, and deploy—especially in constrained environments.

At this year’s WAIC, edge-native approaches are drawing growing interest from platform builders and policymakers alike. In a world increasingly shaped by energy constraints, privacy regulation, and decentralization, edge agents offer a vision of AI that doesn’t rely on always-on cloud connections—but still delivers meaningful assistance.

In that light, the most impactful AI in the coming years may not be the one that wins benchmarks. It may be the one users forget is even there—until they need it.