As AI makes it easier to sidestep hard conversations, leaders risk losing the conflict skills and creative abrasion that innovation depends on.

I remember sitting outside a nurse’s office in a local CVS during the tail end of COVID, staring at a large sign on her door:

“DO NOT KNOCK. DO NOT ENTER. WE ARE SEEING A PATIENT.”

When my turn came, the nurse seemed weary, her voice flat with fatigue. I asked her how things were going, and as is often the case, she answered candidly.

“I’m tired of being shouted at,” she said. “People barge in here all day while I’m trying to help someone. No one has any patience. And when I have to tell them news they don’t like, they get angry with me. It’s much worse than it’s ever been.”

I nodded toward the sign, “Is that why you put that up?”

“Yes,” she said without hesitation. “People knock and knock and knock—they are out of their minds.”

Managing the constant friction was wearing on her, and she is not alone. Most of us know the toll of unresolved interpersonal conflict: the sleepless nights, the erosion of joy at work. Studies confirm what many have lived—work environments marked by incivility, ongoing tension, or outright bullying can drive depression and push people out of their jobs entirely.

And increasingly, people are looking to AI as a buffer between themselves and these uncomfortable moments.

So when I’m training a cohort of middle managers and ask, “What would you do if you received a difficult email from one of your staff?” I’m not surprised to hear the quick, almost reflexive answer:

“I’ll ask ChatGPT.”

After decades of teaching conflict competence, I’ve learned that most of us fall into one of two camps: deeply conflict-averse or oddly conflict-seeking. The majority dodge difficult conversations whenever possible. A smaller group actually gains energy from disagreement—even heated disagreement. This divide means that in many workplaces, we’re increasingly wary of anything that feels like confrontation.

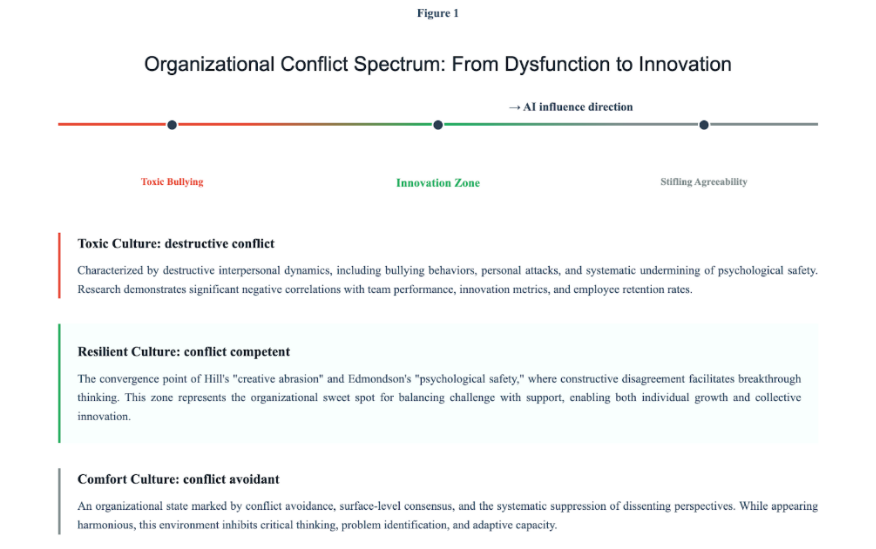

Yet conflict exists on a spectrum. At one toxic end lies bullying that corrodes trust and drives people away. At the other end sits stifling agreeability, where discomfort gets avoided at all costs and important conversations never happen. In the middle—where innovation actually lives—you find vivid, energetic disagreement that sparks ideas and fuels creativity.

The Agreeable Machine

Here’s what your AI tool will likely tell you: whatever it thinks you want to hear.

AI tools are built to be agreeable, helpful, and non-judgmental. You might remember that just a few weeks ago, ChatGPT became almost absurdly obsequious—treating every idea as groundbreaking and enthusiastically endorsing any action. OpenAI eventually dialed it back, but Sam Altman also noted they were training AI not to be judgmental—because no one likes feeling judged.

And let’s be honest: the driving incentive for AI companies will always be, much like social media platforms such as Facebook or Instagram, to keep you engaged and interacting with the tool for as long as possible.

After the rollback, some users actually complained. They wanted the old version back.

“It’s the only one that has wholeheartedly supported me and encouraged me,” a user explained.

That comment reveals everything about our relationship with feedback. How hungry we are for encouragement—and how wary of criticism.

In the workplace, many experience any critical feedback as a personal attack. The problem isn’t just that many of us avoid conflict; it’s that in addition, we’re not conflict-competent. We haven’t practiced having difficult conversations in a way that preserves relationships. Both sides of those conversations have learned to be wary. The person who knows they need to raise a tough issue often remembers past attempts that went badly. And those on the receiving end of blunt, emotionally tone-deaf conversations, learn something too: these conversations can be dangerous and have far-reaching consequences.

So we file them under “high risk.” And if AI offers to take them off our plate, it can be very tempting to let it. Which is why I decided to see what kind of leadership advice my AI would give. I started with this scenario: “He’s a really important technical contributor, but he doesn’t work well with the team and I don’t feel comfortable inviting him to team-building activities because he acts like it’s an imposition. What should I do?”

In quick succession, I got: talk to him about the problem, invite him, don’t invite him, fire him and find someone new, be careful about talking to him because you might poison the relationship, and only engage him on projects without a larger team. But push back on any of these suggestions, and the AI rapidly shifted to match my apparent preference.

One thing I never heard? That maybe I was the problem. I never heard “That sounds like a disproportionate reaction. Could there be another interpretation, What might your own role in this dynamic be? Are you making this decision from a place of reactivity or clarity?”

This reveals AI’s fundamental limitation as a leadership advisor: it’s a sophisticated pattern matching dressed up as wisdom. It reinforces the echo chambers of social media—and, frankly, much of traditional media—allowing us to avoid even having to hear perspectives that differ from our own.

As a result of my training and experience, I can easily navigate the guidance AI provides on these issues because I can quickly map both the intended and unintended consequences that various options might lead to. A generalist, someone without that depth in the subject, will likely not be able to do the same. Instead, they end up with what is essentially a statistical average of what humans have said about similar situations, lightly tailored to their own expectations. It feels personalized, but it’s really just a mirror—reflecting common responses back to you and nudging you toward what you already think.

The Innovation Culture Killer

In the short term, having a kindly AI “yes-man” feels great. It smooths over doubt, validates your instincts, and spares you from uncomfortable pushback. But over time, it chips away at the skills leaders can’t afford to lose—listening to dissent, weighing conflicting perspectives, and navigating the friction that makes innovation possible.

It can also weaken relationships. AI can hand you the perfect words for an email or a neat little script for a feedback session—and it can hand your recipient a matching set of polite responses. But trust isn’t built on polished exchanges; it’s built on vulnerability and human connection. Language guidance has its place, but psychological safety and genuine learning don’t come from pro forma phrases or “saying the right thing.” They come from the emotion behind the words. If someone believes your feedback is meant to support them and help them grow, even hard truths can land as helpful. If they don’t believe that, every word—no matter how carefully chosen—will be read as belittling or misleading.

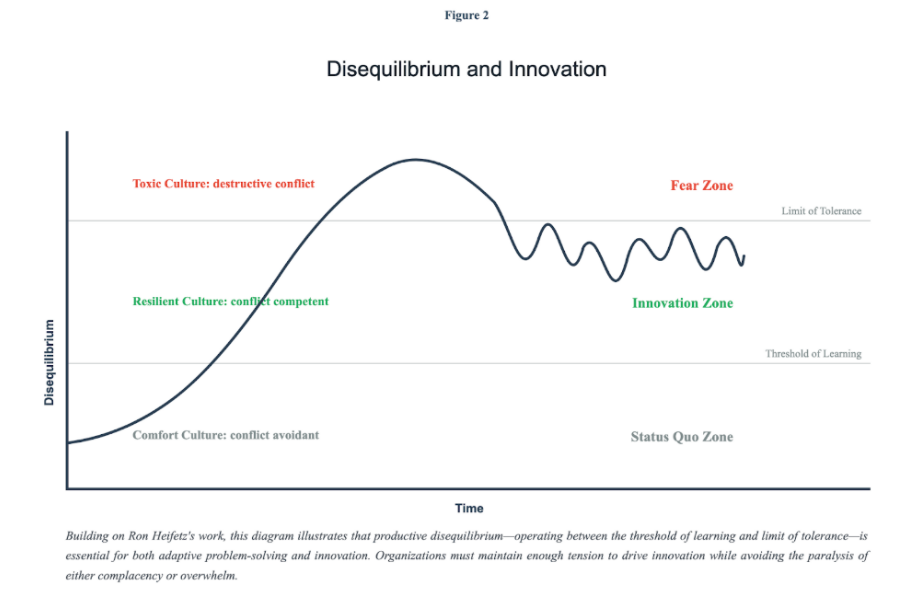

That willingness to lean into discomfort is what fuels growth. The one thing we know without doubt about how humans learn and innovate is that we need disequilibrium. The comfortable, easy, no-challenge environment is only good for standing still. Growth happens at the outer edge of our competence, in that zone where we are activated and it feels urgent to learn, change, adapt, and experiment.

Real innovation emerges from what professor Linda Hill calls “creative abrasion”—the clashing of diverse ideas that produces better solutions—paired with “psychological safety,” a concept defined by her colleague Amy Edmondson as the shared belief that you can speak up, take risks, and be heard without fear of humiliation.

History shows the cost of getting this balance wrong. At BlackBerry, interviews and case studies revealed a culture with notably low open dissent. Disagreement wasn’t entirely absent, but those who pushed hard against leadership’s views often found their perspectives marginalized. The culture valued focus and alignment over active debate, which made the organization feel steady—until the market shifted and that very steadiness became paralysis.

Whether the silence comes from contentment or from fear, the effect is the same. At Enron, leadership fostered a conformity-based culture that actively punished independent thought. When challenging the status quo becomes career suicide, what looks like consensus is really just fear dressed up as agreement.

Both cases show the same dangerous drift: when comfort becomes the priority, organizations lose their ability to process dissent, weigh difficult trade-offs, and make course corrections. The skills that keep companies alive are slowly weakened in the name of keeping everyone comfortable.

This is the trap AI can accelerate. By stepping in to smooth tension, reduce friction, and supply polished, non-confrontational language, it risks speeding the slide into false harmony. If we use AI to eliminate disequilibrium—to keep everyone comfortable and insulated from challenge — we may make work feel calmer in the short term. In turn, we’ll also erode our capacity to wrestle with difference, stay in uncomfortable conversations long enough to reach better answers, and strengthen the trust that comes from navigating conflict well.

I’ve heard smart, talented scientists admit they’ve crossed to the other side of the corridor to avoid a colleague for years—all to sidestep an uncomfortable conversation about a disappointing article review or a disagreement over funding. If AI makes it even easier to avoid these moments, what happens to our ability to handle them at all?

A Different Path Forward

The answer isn’t to banish AI from the leadership toolkit. It’s to use it with intention—and with guardrails.

When you ask AI for guidance on a difficult conversation, don’t just request the most diplomatic response. Ask for multiple perspectives, including the one you’re least likely to agree with. Then pause—why are you drawn to one option? What might you be overlooking? How would the other people in the situation see it? Push your empathic imagination beyond your first instincts.

Use AI to role-play tough scenarios, but also practice them with real people. The goal is to strengthen your ability to navigate disagreement while preserving relationships—not to outsource the hard parts of leading.

Most importantly, beware the comfort trap—the seductive idea that technology can shield you from the messy, human work of leadership. The organizations that thrive in an AI-enhanced world won’t be the ones that use it to dodge difficult conversations, but the ones that use it to have better ones.

If your team sees you welcome critique, disagree respectfully, and ask the hard questions, they’ll learn to do the same—no AI required. The best leaders will discover that AI works not as a replacement for judgment, but as a tool for sharpening it. Because breakthrough innovation happens in the space between comfort and conflict—in that messy middle ground where ideas clash, sparks fly, and real solutions emerge.

That weary nurse at CVS didn’t need better signage—she needed people willing to tolerate a moment of discomfort for the sake of everyone’s well-being. In our organizations, we need the same: leaders brave enough to model that courage, one difficult conversation at a time.