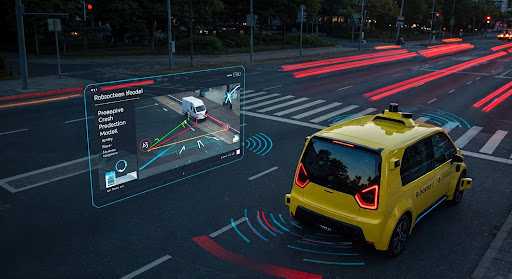

How can you teach a Robot car to fear a crash before it happens? Would you trust a driverless car with the most precious cargo you have—your child—even if today’s software still misses a life‑threatening scenario once every hundred thousand miles? That uneasy question hangs over the entire autonomous‑vehicle (AV) industry, especially in a nation that lost an estimated 40 990 people on its roads last year, according to the latest National Highway Traffic Safety Administration figures NHTSA. Closing that safety gap means proving, beyond statistical doubt, that a machine can anticipate and avert danger faster and more consistently than a human driver. Yet the proof itself has long hit a paradox: companies generate billions of simulated miles but still rely on humans to sift through the replays, identify true conflict events, and label them for machine‑learning retraining. That manual bottleneck slows progress precisely where speed could save lives.

Chinmay Kulkarni, a data scientist who worked inside a California‑based autonomous‑vehicle developer, confronted the logjam every night his simulation dashboards lit up red with unresolved “disengagements.” Each flag represented a potential collision that needed expert review; each review consumed scarce human hours. “We were walking on a treadmill that kept getting faster,” he recalls. “Every extra mile of simulation produced more triage work than our team could clear.” It was hardly a niche difficulty: the company’s virtual fleet now drives more than 20 billion miles inside its cloud‑scale simulator, an unprecedented trove of edge‑case scenarios. Without an automated referee, the sheer volume threatened to drown the safety mission it was meant to serve.

Kulkarni’s answer was deceptively simple: treat safety like fuel economy and measure it per mile. His Mile‑Per‑Conflict (MPC) framework scores every simulated mile according to whether it triggers a potential crash, risky disengagement, or other safety‑critical anomaly. Instead of asking overworked engineers to view every second of every replay, the algorithm classifies and ranks conflicts, surfacing only those that truly matter. Under the hood, the system blends supervised classifiers trained on historical crash data with a heuristics layer that looks for tell‑tale patterns in sensor fusion logs. The result is a live “danger index” that can be scanned like a stock ticker—and a feedback loop that reroutes engineers’ attention toward the rarest, scariest failures first.

Over the past year the tool has processed more than one million simulated trips and cut manual reviews by forty‑five percent, lifting analyst throughput from roughly twenty replays per hour to thirty while improving collision‑catch precision by fifteen percent and recall by ten . Those gains translate directly into saved labour—about 9800 engineer‑hours in 2024 alone—and, more importantly, faster iteration cycles for the driving stack. “Safety can’t wait for a human eyes‑on check,” Kulkarni tells AI Journal. “We had to teach the system to fear a crash before it ever gets near the road.”

The academic foundations for MPC pre‑date its industrial rollout. In February 2021 Kulkarni and a collaborator published “Evaluating Autonomous Vehicle Performance: Integrating Data Analytics in Simulated Environments” in the International Journal of Science and Research. The peer‑reviewed paper laid out a taxonomy of safety, efficiency, and adaptability metrics, singling out conflict‑normalized scoring as a way to compare heterogeneous test runs. At the time, mainstream simulators such as SUMO and CARLA offered scenario generation but lacked end‑to‑end conflict triage. By disclosing the core ideas, Kulkarni effectively seeded the field with a blueprint that anyone, from startups to research groups, could adapt.

That openness drew outside attention. SiliconIndia highlighted the framework earlier this year, noting that deployment at one of the sector’s flagship driverless programs slashed manual triage workloads and tightened quality operations across more than a million simulations Enhancing Driverless Cars. The coverage matters because it moves the story out of insider engineering circles and into public view: software originally conceived to protect a single fleet now speaks to societal stakes that dwarf any one company’s business plan.

Those stakes are enormous. Every minute saved during virtual validation accelerates the timetable for safe public launches and functional expansions—robo taxis on city streets, freight carriers on interstates, shuttles in retirement communities. Early studies of fully driverless operations already hint at dramatic injury reductions compared with human drivers. Scale that advantage across millions of vehicles and the payoff is measured in lives, not merely efficiency charts. Conversely, delay the rollout because validation lags, and human‑error crashes will continue to extract their gruesome toll.

Crucially, MPC is not a magic‑number KPI tucked away in an internal spreadsheet. Because the algorithm scores conflicts per mile, it can compare apples to apples across disparate operating domains—sunny desert boulevards, fog‑laden ports, dense urban grids. Regulators who often struggle to interpret raw disengagement counts could, in theory, adopt a standardized MPC threshold for performance reporting. “We wanted a unit that made sense outside the lab,” Kulkarni says. “It’s intuitive: fewer conflicts per mile is good, zero is the goal.”

Industry peers are taking note. Independent researchers at several U.S. universities now cite the 2021 paper as a reference when benchmarking their own simulation pipelines, and at least one simulation‑software vendor has incorporated conflict‑normalization widgets inspired by the framework. Such ripple effects align with the National Highway Traffic Safety Administration’s ongoing push for data‑driven AV safety cases; automated triage gives the agency richer evidence without inflating test budgets.

None of that public benefit materializes, however, unless the tool stays honest about its blind spots. Kulkarni is the first to acknowledge the limits. MPC presently excels at vehicle‑to‑vehicle and vehicle‑to‑infrastructure conflicts but needs refinement for pedestrian and cyclist nuances. His next milestone is to extend the classifier to so‑called vulnerable road‑user scenarios, a move that will require retraining on entirely new data cohorts as well as recalibrating the risk heuristics. The work is under way, aided by the speed boost the current pipeline already unlocked. “We bought ourselves a runway,” he says. “Now we can invest it back into catching the hardest edge cases.”

The ambition does not stop at single‑company deployments. Because the metric is journal‑published, transportation departments or insurance consortia could adopt MPC as a common yardstick, allowing apples‑to‑apples risk comparisons across different AV platforms. That, in turn, could inform zoning for driverless freight corridors, urban geo‑fencing maps, or even dynamic speed‑limit adjustments based on real‑time conflict density. For communities wrestling with congestion, emissions, and safety all at once, the prospect of a reliable, shareable danger index is more than an engineering curiosity—it is a planning tool.

Kulkarni’s own motivation keeps circling back to those national fatality charts. “If automation is going to prove it deserves the wheel, we have to spend less time finding the crash in the haystack and more time preventing it,” he says. In a field famous for moon‑shot promises, MPC’s success rests on a more prosaic premise: that the mundane work of labelling, triaging, and iterating can be automated to a point where breakthroughs compound instead of bottleneck. By showing that scale‑ready safety validation is within reach, Kulkarni stakes out a practical path toward the public’s ultimate benchmark—roads where the question “Would you trust your child inside?” no longer needs to be asked.

Reporting by AI Journal newsroom, with additional information from the International Journal of Science and Research and SiliconIndia.