As we head into the second quarter of 2023, macroeconomic conditions are getting tougher. Even if the recent Spring Budget announcement included a freeze on energy prices, they remain at an all-time high in the UK and continental Europe, supply chain disruption has shifted from an anomaly to business-as-usual, and inflation remains a constant barrier for consumers and organizations alike. As a result, and to meet this disruption head-on, business leaders will need to dig deeper and search for new strategies that allow them to do more with less – arming employees with the ability to act – and react – to market disruption with the dwindling resources at their disposal.

Given the economic uncertainty, we’ll see more companies accelerate the digital evolution to integrate AI and automation technology as the solution to this efficiency challenge. But with many still wrestling with the foundational data challenges needed to onboard those tools effectively, a stronger focus on data quality strategies and analytic maturity will be required to succeed.

Our own research, commissioned with IDC, highlighted that nearly 80% of organizations in the UK do not trust their own analytic insights enough to use them for decision-making. This, in many cases, is a hallmark of the relatively low level of analytic maturity seen in businesses across the globe. According to the International Institute for Analytics (IIA) Analytics Maturity Scale, the majority of organizations sit at just a 2.2 out of 5 – usually defined as the ‘spreadsheet stage’. If most of the business intelligence available to business leaders is delivered through manual spreadsheets, then the reason for low trust levels in those insights becomes more apparent.

While AI capabilities and applications have certainly advanced in recent years, a wider market understanding of what AI actually is has not. AI is a tool like any other – it lets us do more with less. It allows us to make more intelligent decisions. Without human expertise and knowledge guiding AI development and usage, the end result is an increased risk of AI models trained with inaccurate, biased, or misunderstood data sets.

The battle between legacy strategy and future focus

At its heart, we can see the global business landscape is battling between the legacy strategies of yesteryear and the impactful strategies of today. These strategies may work as intended if the right ingredients are secured – namely a strong foundation of data defined by flexibility, accessibility, and effective governance – but are also entirely dependent on the upskilled knowledge workers expected to actually use and interact with these tools.

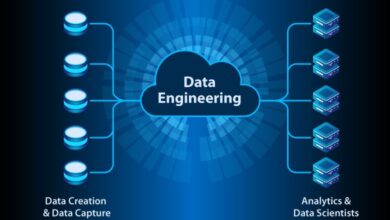

Data has become more and more available in business today. While the ability to perform analytics on that data has also gotten easier over time, the number of companies striving to do so has not kept pace with the demand for analytics-generated intelligence. This is the future that ‘digital native’ start-ups currently operate in. It’s also the future that legacy businesses strive towards.

Business leaders know that AI and automation are the solution to doing more with less but, despite this, their roadmaps to progress from Point A to Point B are missing a number of foundational steps to ensure that good quality data feeds these AI models. Look at the most successful companies in the world. Their unique selling proposition (USP) is not cash reserves or real estate – it’s their data products and foundational ability to collect, analyze and act on data at scale, and use it to make better, more intelligent decisions.

AI: a human-centered tool with wide-reaching potential

Whether a pandemic, natural disaster, ransomware, or financial investment, the ability to anticipate where the next crisis may hit and to what effect it may have has one thing in common – and it’s not a crystal ball. They all require the capability to leverage data with swiftness and skill. Good information, good data, and the capacity to derive good decisions with analytics are more critical than ever to predicting and implementing forward-thinking strategies to mitigate the impact of risk.

In delivering this AI future, one thing is clear. Layering on technology after technology alone will not deliver on the need for faster insights at scale. Effective use of any technology is always dependent on the human factor. In many cases, that can be a net benefit – allowing human ingenuity to rise to the forefront of decision-making. In other cases, as we see with data science teams today, the human factor can also act as a bottleneck to business value. Without the ability to scale teams effectively to match the growing business needs, data science teams find themselves overwhelmed and burned out due to their excessive workloads.

With an increased requirement to analyze the data held by businesses, and the inability to scale data science teams across businesses to actually deliver on those insights, the shift towards efficiency tools like AI models becomes clearer from a leadership perspective.

Mitigating AI Bias to deliver the business strategy

Data science and AI ethics are inextricably intertwined, yet data ethics has not historically secured the attention it warrants within businesses. Rather than relying on a small team of data experts, businesses must instead ensure people with a diverse range of perspectives and lived experiences are included in any AI project – delivering quality assurance at the source of said data.

By bringing diverse and upskilled knowledge workers on board at that foundational data level, businesses can ensure that the ones closest to the problem – and closest to the dataset – are best positioned to highlight any errors, anomalies, or misunderstandings within that data. This results in a more robust strategy to highlight potentially biased datasets before that biased data is fed into an AI model, and where that bias will become amplified.

The democratization of AI-driven decision intelligence must be underpinned by a strong foundation of data literacy, as well as proper training and upskilling to ensure knowledge workers can get the most value out of these tools. Humans and the ethical use of data must be at the center of any AI innovation. Otherwise, organizations risk wasting resources by creating flawed AI products which generate biased, inaccurate results. Data scientists may lack the direct domain knowledge or personal experience to recognize inconsistencies or biases within datasets, so organizations must focus on creating a workforce that is data literate and aware of data ethics.