Many people, including OpenText CEO Mark Barrenechea, now consider data to be the lifeblood of modern business. Without data, enterprises risk missing out on many of the major competitive advantages of using AI, which has the capacity to turn what might seem a mundane dataset into a goldmine of invaluable strategic insights.

For this reason, there is a big incentive for companies to store all the data they can get their hands on, even if they have not yet identified a use-case for it. But this approach can be costly in terms of both money and energy consumption.

This article examines the pros and cons of limitless data storage. In addition to this, I consider the challenges faced by some industries in their acquisition of data, the growing demands on data centres/storage systems throughout the world, and the benefits of creating a ‘data ecosystem’.

Reasons to delete data

But before we find out whether it could be a good idea to store data forever, we first need to understand the reasons why people delete data in the first place.

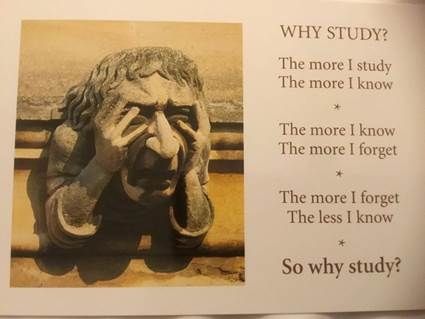

The most obvious reason is to free up space by getting rid of information that is no longer relevant, interesting, or accurate. We do this within our own brains all the time, which are constantly consuming content. Indeed, the function of memory relies just as much on our ability to forget as our ability to remember. If we didn’t forget things, our brains would be jammed so full of irrelevant and conflicting pieces of information that they would be incredibly slow processors – and that’s a best case scenario.

This limitation of human cognition is comically expressed by an epigram found on a set of Oxford University postcards:

Of course, the existential despair expressed here seems to overlook the fact that studying usually involves a lot more than just storing new facts in your brain. Nevertheless, it raises the key point that as humans, we can only store a certain amount of information in our brains, and so we should think carefully about which information that should be.

But this is not the case for artificial intelligence thanks to the evolving capabilities of machine learning. Memory capacity and GPUs for data intelligence systems are growing exponentially, and are already capable of ingesting and processing a huge amount of data in a short amount of time. OpenText, for example, promises that it can have up to 1 million documents fully processed and cloud-ready for each of its customers in as little as 2 weeks with its ‘earn your wings’ programme.

As data storage capabilities continue to grow, we face the question: just because we can store huge new amounts of data artificially, does that mean we should? In a world that is already crammed with information, is there much value to be gained by storing more and more of it? Would it not serve us better to focus technology on optimizing the lifecycle of data, ensuring that the right type of data is procured via quality collection systems, transmitted to its most relevant applications, and then deleted (or at least condensed) once it has served its purpose?

The second big reason to delete data is to get rid of information that we don’t like, that incriminates us, or puts us at some disadvantage. For example, a common cognitive response to trauma is to forget, i.e. to delete the information that is causing us harm. But while this can be temporarily beneficial as a survival mechanism, it can have negative consequences further down the line, such as disassociation and/or identity crises.

Therapies such as EMDR (eye movement desensitization and reprocessing) aim to help individuals gain a more unified and cohesive sense of self by processing their memories of traumatic events in a safe and beneficial way. This evidences the importance within the human brain of being able to access hidden information which nevertheless still affects our behaviour and experiences.

Similarly, it is important that we are able to access the data that is affecting the behaviour and outputs of an AI system. This is especially the case with growing perceptions of AI as being a ‘black box’ which we are required to trust blindly since we don’t have access to the data upon which it’s outputs are based.

Open source models, such as Meta’s Llama, and French start-up Mistral aim to counter this lack of transparency by making their source code publicly available and customizable. But even in these cases, the data used to train the model typically isn’t publicly released. This is due to competition in the field and the ongoing struggle that developers face in acquiring data, especially with the added complication of now ubiquitous copyright infringement claims against developers.

Indeed, in recognition of data as one of the biggest assets that gives AI models their real value, we are seeing the emerging trend of enterprises creating customized versions of LLMs with their own proprietary, company-specific data. This trend is also recognised by many tech industry experts, including Mark Barrenechea, who forecasted in his OpenText Europe keynote speech that we will see 1000s of LLMs emerging in the near future, each one customized for specific use-cases. Thus, the acquisition and retention of company-specific data is only going to become more important for enterprises.

Struggles in acquiring data that has real value

Another reason that companies might feel a big inclination to hold on to all the data they can is the difficulty of acquiring data in the first place. Given the centrality of data to modern business, this can be a significant barrier to successful adoption of AI technology. Without data, companies are left unable to reap any real benefits from software solutions. Just a brief glance through the websites of any of the major software vendors will show you how integral data is to digital operations; it’s the key to unlocking the potential of AI in essentially any context. Salesforce express this concisely in the marketing for their latest offering, Einstein 1:

CRM + AI + Data + Trust. Explore the genius of Einstein 1.

But acquiring data is no easy feat, despite what the marketing suggests. A recent AI Journal poll found that a majority of business people (86%) had encountered difficulties in acquiring data that could be used for strategic business insights.

Acquiring the right form of data is challenging for some industries in particular. In a round table discussion at Fortune’s Brainstorm AI conference in London on 16th April, National Grid Partners President Steve Smith highlighted the struggles that the utilities sector faces in acquiring data that can be utilized for predictive insights.

“We work in a conservative industry, we’re targeted against 99.999% reliability. One of the key challenges we have is… actually the absence of data. For example, one of the holy grails of utilities is predictive maintenance. At the moment, the maintenance on our assets is largely driven by ageing condition [of equipment], and we want to be able to do clever stuff in this area. We’ve got this great company that can do predictive maintenance, and they said, “just give us your data and we can do amazing things”. But there’s the problem, because the sort of data they need is not the sort of data that typically we’ve got. So then when you get into discussions around ROI, you’re having to get over two hurdles. One, whether the algorithm is going to work. And two, you’ve got to spend some serious money to actually get the data.”

Steve Smith, President of National Grid Partners

But Smith points out that this is not the case in all industries. In retail banking, which he used to work in, he says “we would have data coming out of our ears”. This points to a current imbalance in the data market, which will have a big impact on where AI is actually able to provide real business gains. Indeed, while it is recognised that more conservative and/or heavily regulated industries such as utilities and the public sector are slower and more cautious in their adoption of new technologies, their limited access to and use of the required forms of data could prove to be a key barrier preventing them from gaining significant ROI from their AI investments.

On the one hand, these industries have more reason to hang onto all the data they have, given the expense and difficulty of acquiring data. But on the other hand, storing data is in itself an expense, and there seems little point in storing data which cannot be utilized for any clear gain.

The cost of unlimited data storage

Another aspect of limitless data storage that merits consideration is its energy consumption. Given the virtual nature of cloud storage and the remote locations of most data centres, it is easy to forget the amount of maintenance that storing data requires. Cloud or colocation datacentres require high security protection, state of the art cooling/ventilation systems, uninterruptible power supply, and backup generators.

Currently, data centres account for approximately 1.5% of global energy consumption. Despite progress in energy efficiency, their net energy consumption is expected to grow due to the emerging technologies of blockchain, cloud gaming, VR/AR applications, and ML. So, while storing data is in itself not one the most energy consuming applications of virtual technology, it remains a contributing factor to growing energy consumption from data centres. And if the data is redundant, why add to this demand?

The approach of deleting redundant systems/information has already been taken with other technologies. For example, fixed mobile networks and legacy 2G and 3G networks have now been decommissioned so as to reduce mobile network energy consumption. The process of decommissioning these networks is not a fast or simple process. Factors such as competition regulation, consumer protection, and the maintenance of critical services, must be addressed in order to ensure that their decommission does not negatively impact the service quality of a network provider, or compromise any consumer data.

The case is similar for data. Just like decommissioning mobile networks, the process of deleting data will require careful consideration to ensure that consumer data is protected, that the quality of LLMs is upkept, and that sufficient records of training data are kept in order to provide transparency and accountability for an AI system. But our ability to delete data that no longer has value is an important step in streamlining our progression into the future and ensuring that the world is not burdened with unnecessary demands of storing redundant information.

Creating a functional data ecosystem

Before the digital age, the cost of storing information was even higher. Trees had to be felled for paper, ink had to be procured from plants and animal fat, and scribes/printing press operatives had to spend hours copying out page after page of text. These time-consuming and costly processes acted as a natural quality control mechanism, restricting the amount of information that would be stored to just the crème de la crème of content.

As the inheritors of all the surviving information, we can appreciate the benefit of this funnelling of information because it means that we spend less time sifting through useless, mundane, and repetitive content, and more time consuming works that were considered as making an important contribution to culture and progress.

With the endless data storage capabilities we now have through virtual technology and cloud infrastructure, we have lost this inherent and natural quality control mechanism for content. But perhaps we should take a lesson from our ancestors here, and preserve only the data that we think has value for current or future applications.

The key question for many businesses here though is how to decide whether data has value or not (particularly with uncertainty over what the future applications of data could be). This is where a graded approach comes in handy, utilising AI to analyse the accuracy, relevance, and potential applications of data at various points of its lifecycle.

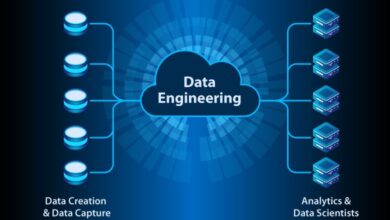

AI offers a variety of automated services that can help us decide if data still has value and is worth keeping around. Automated data profiling and lineage tracking, for example, can provide key insights into the quality and use-cases for a dataset. It also provides visibility into how raw data has been transformed into different forms via data pipelines. These services give data engineers and analysts a better idea of the average amount of value provided by different types of datasets, and the best use-cases for various forms of data. This fosters the creation of a more functional data management ecosystem, based on automated processes which transform, recycle, and ultimately delete data.

Furthermore, AI can help businesses source data, reducing the incentive for struggling industries to retain redundant forms of data. Generative AI in particular is useful in this aspect, enabling businesses to create synthetic data from just a small amount of actual data, or simulate realistic environments from which theoretical/hypothetical data can then be collected.

Overall, our information-overloaded world is set to benefit from information management strategies which optimize the natural lifecycle of data, rather than those which prioritize storing all data regardless of its relevance and diminishing applications. The opposing yet interdependent functions of remembering and forgetting in human memory, remind us that optimal cognition and intelligence does not mean just storing ever-increasing amounts of information. Instead, it comes from an optimal balance of acquiring, storing and transforming valuable information, and deleting redundant and stagnant data.