AI systems are only as good as the data that trains them. Every model, from chatbots to image recognition tools, depends on high-quality, diverse datasets gathered from across the web. But in practice, accessing that data is far from simple.

Researchers often face region locks, IP bans, throttled requests, and inconsistent availability across markets. Public datasets alone rarely reflect the diversity of the real world — and without global data, AI models risk learning only part of the story.

In a world where information is fragmented across borders, platforms, and policies, residential proxies have quietly become the unseen infrastructure powering ethical, reliable, and scalable AI research.

They don’t replace algorithms or training pipelines — they make them possible

The Data Access Challenge in AI Research

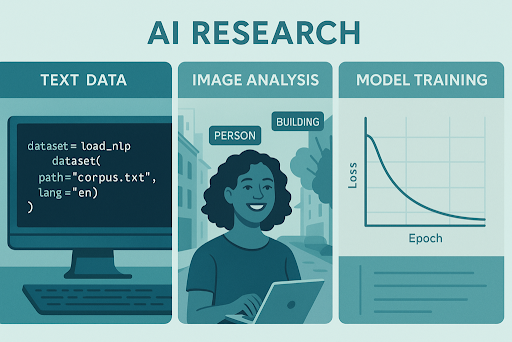

Behind every AI breakthrough lies an enormous amount of data — reviews, news articles, product listings, social posts, images, and metadata collected from the web. These datasets fuel model training, validation, and continuous improvement.

But obtaining this data isn’t as straightforward as it sounds.

Many websites limit request frequency or block automated crawlers altogether. Region-based restrictions add another layer of complexity — what’s accessible in one country may be hidden behind firewalls or legal filters in another. E-commerce platforms, media archives, and financial portals are particularly strict about inbound traffic and repetitive queries.

Even when data is technically public, it’s not always reachable. Unstable connections, high latency, or inconsistent API responses can make large-scale data gathering nearly impossible without the right infrastructure.

For AI researchers, data access is not just a technical hurdle — it’s an ethical and infrastructural challenge. It raises questions about fairness, transparency, and the hidden biases introduced when only certain regions or sources are represented in a dataset.

Why Residential Proxies Solve These Problems

When AI researchers need to collect or refresh large datasets, reliability matters as much as accuracy. That’s where residential proxies come in.

A residential proxy routes data requests through real user IP addresses instead of datacenter servers. To websites and APIs, these connections look completely organic — just like everyday traffic from normal devices. This simple shift makes a huge difference: fewer blocks, fewer captchas, and more consistent access to public information.

For AI workflows that depend on continuous data retrieval or regional sampling, this distinction is critical. Residential proxies enable researchers to:

- Simulate real-world traffic, using authentic IPs that reflect natural browsing behavior.

- Filter connections by country, city, or ISP, allowing region-specific dataset collection.

- Distribute requests across global endpoints, improving speed and reducing load concentration.

- Minimize sampling bias, ensuring balanced and representative data coverage.

In essence, proxies don’t just hide requests — they make data collection smoother, fairer, and more accurate.

Learn more in Gonzo Proxy’s detailed guide on how residential proxies work.

Use Cases: How AI Teams Leverage Proxies

Residential proxies have quietly become part of the data backbone for many AI teams — from academic labs to enterprise-scale R&D departments. Here’s how they’re used in real workflows across different domains.

1. Dataset Collection for NLP and LLMs

Large language models depend on text corpora that represent different cultures, dialects, and regions. Residential proxies allow researchers to gather content from multiple countries without hitting regional restrictions or creating duplicate records.

They help maintain linguistic diversity in datasets — ensuring models understand how language actually varies across the world.

2. Computer Vision Training

Image-based AI models need diverse visual inputs — from retail catalogs to public archives. Collecting those images at scale can quickly trigger anti-bot filters or IP bans.

Routing through residential IPs allows teams to collect metadata and imagery smoothly, improving the diversity and realism of training sets while reducing collection downtime.

3. Sentiment and Market Analysis

AI tools analyzing product reviews, news tone, or social sentiment rely on live, localized data. Proxies make it possible to pull information from multiple countries and languages without missing key regional perspectives — a critical advantage for global brands and researchers tracking real-time trends.

4. Academic and Corporate Research

Universities, AI startups, and innovation labs often need to access open web data for non-commercial studies. Using residential proxies ensures ethical, stable, and non-invasive access to those datasets — minimizing blocks and preserving compliance with public data usage norms.

In short, proxies don’t just mask connections — they make large-scale AI data operations possible.

Why Gonzo Proxy Fits the AI Research Workflow

When AI teams operate at scale, connectivity becomes more than a convenience — it’s a form of infrastructure. That’s where Gonzo Proxy aligns naturally with the demands of research environments.

The platform provides access to over 20 million real residential IPs across 195 countries, each filtered by latency, bandwidth, and reliability. Its adaptive routing system continuously learns from previous connection histories, selecting the fastest and most stable path for every new request — a key advantage for workflows that depend on uninterrupted data streams.

From a technical perspective, Gonzo Proxy integrates seamlessly with standard research toolkits:

- Compatible with Python, R, Node.js, and any REST-based API framework.

- Auto-scaling capabilities adjust bandwidth dynamically during heavy training or scraping phases.

- A built-in analytics dashboard lets teams monitor usage patterns, latency, and traffic costs in real time.

For research teams, this means one less thing to worry about — reliable connectivity that scales with their projects and stays invisible when everything else needs attention.

Ethics and Compliance: Responsible Data Gathering

Collecting data for AI research is not just about access — it’s about responsibility. Every dataset has an origin, and every connection leaves a footprint. As AI systems become more powerful, the methods used to obtain their training data face growing scrutiny from regulators, institutions, and the public.

Using residential proxies responsibly helps researchers balance accessibility with accountability. They enable data gathering from open sources without triggering invasive scraping practices or violating site integrity. The goal isn’t to hide identity — it’s to maintain stability, fairness, and compliance while building global datasets.

Gonzo Proxy maintains strict adherence to international data protection frameworks, including ISO/IEC 27001:2017, ensuring that its infrastructure meets high standards for information security and risk management. Combined with GDPR-aligned policies, this gives research teams confidence that their data workflows are both effective and ethically sound.

Ethical AI begins long before model training — it starts with how the data is collected, stored, and transmitted.

The Future: Data Infrastructure for the AI Age

As AI becomes more dependent on real-world data, the networks that deliver that data will define the limits of innovation. Algorithms can only go as far as the information they’re trained on — and that information must be gathered, filtered, and transmitted through reliable channels.

Residential proxies are quietly evolving from niche utilities into a cornerstone of modern AI infrastructure. They don’t just support model training or global analysis — they make these processes sustainable, secure, and scalable. In a sense, they’ve become the connective tissue between human knowledge and machine understanding.

In the end, smarter data access leads to smarter AI — and the infrastructure that enables it will shape not only how machines learn, but how the world continues to innovate.

Key Takeaways

- AI systems depend on consistent, unbiased data sources.

Without reliable access to diverse datasets, even the most advanced models risk learning from incomplete or skewed information.

- Residential proxies help researchers access that data safely and reliably.

They provide stability, geographic flexibility, and compliance — key factors for scalable, ethical AI development.

- Infrastructure matters as much as algorithms.

Tools like Gonzo Proxy make high-quality data access possible, empowering AI teams to build smarter systems with confidence.