The Changing Landscape of AI Training

The landscape of AI model training is constantly evolving, with reinforcement learning (RL) emerging as one of the most exciting and impactful areas. In simple terms, RL is like teaching a system through trial and error—similar to how a person might learn to play a game by experimenting with different moves and receiving feedback when they win or lose. Instead of passively absorbing data, RL systems actively make decisions, learn from the outcomes, and steadily improve over time. However, this approach does not come cheap. Leading frontier labs such as OpenAI, Anthropic, and DeepMind spend hundreds of millions of dollars each year on RL-based training to improve LLM performance—driven by enormous amounts of compute, complex engineering, and human-curated data to make these models more reliable.

A Mission to Democratise RL

To unpack what this means for the broader AI community, we spoke with Vignesh Ramesh, an applied AI engineer at a leading AI startup and an independent researcher. Vignesh has made it his mission to democratise reinforcement learning—making it simpler, more accessible, and open to anyone with basic coding knowledge who wants to learn, replicate, and build upon it.

From Complex Ideas to Approachable Insights

Through his writing on rldiary.com and his talks at leading events in London, Vignesh has become recognised as one of the foremost voices in RL training, distilling complex ideas into approachable insights. He explains RL through a simple analogy: “Imagine animals in a lab setting, where good behaviour earns rewards and mistakes bring penalties—except here, the ‘animal’ is an AI model learning how to make better decisions with every attempt.”

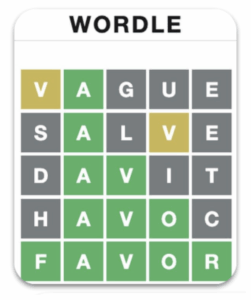

Wordle-GRPO: A Blueprint for Accessible RL

Vignesh’s open-source reinforcement learning pipeline, Wordle-GRPO, exemplifies his philosophy of lowering the barriers to RL experimentation. The project builds a reinforcement learning loop around the popular word game Wordle, creating a training setup where models learn language reasoning through task-oriented rewards and iterative feedback cycles. Remarkably, even with modest compute—just a few hours of training on a single GPU—the Wordle-GRPO system delivers significant improvements in performance.

What makes this work stand out is not just the technical results, but the accessibility: all the code, methodology, and benchmarks are open source, enabling learners, researchers, and hobbyists to replicate the process at very low cost. The training pipeline of Wordle-GRPO is directly transferable to agentic environments where agents take actions, observe outcomes, incorporate feedback, and iterate until completion.

The $100 Agents Project

The Wordle-GRPO training pipeline is part of a wider initiative that Vignesh leads called the $100 Agents project. In this project, Vignesh and a small group of RL researchers are working on fine-tuning task-specific agents with supervised learning, reward modelling, and reinforcement learning—all within a compute budget of just $100. Using a distributed training framework across multiple GPUs, and combining synthetic data generation, preference modelling for automated data curation, and GRPO reinforcement learning—techniques normally reserved for deep-pocketed labs—the $100 Agents project aims to prove that cutting-edge results can be achieved on a shoestring budget.

The project has already delivered significant improvements in task completion rates on niche domains such as TauBench Retail. The initiative also sparked wide interest when Vignesh presented it at Stanford’s AI Professional Programme Show and Tell series, where discussions centred on creating more blueprints like these for making RL pipelines more efficient, replicable, and transparent.

Tackling One of RL’s Hardest Problems

Beyond his open-source contributions, Vignesh has also pioneered a proprietary algorithm to automatically annotate data on multi-turn conversations at scale for reinforcement learning. One of the hardest problems in training multi-turn conversational agents is that while success or failure can be observed at the end of a task, it is often unclear where exactly the agent went wrong in its long chain of decisions. Identifying these failure points is critical to teaching AI models how to improve, but doing so has traditionally required intensive manual annotation.

Vignesh’s breakthrough came from applying Monte Carlo Tree Search (MCTS) to conversational traces. His algorithm performs automated rollouts, branching, and pruning across possible conversational paths, enabling it to pinpoint the exact step where an agent’s reasoning failed. This approach transforms what was once a labour-intensive manual process into an automated one, reducing annotation costs to near zero. In doing so, the algorithm unlocks a new paradigm for scaling reinforcement learning pipelines—where data annotation is no longer the bottleneck, and models can be trained more efficiently with richer and more precise feedback loops.

Looking Ahead

The future of reinforcement learning is open to those who can rethink the fundamentals with creativity, efficiency, and openness. By pioneering low-cost training pipelines, releasing replicable open-source projects, and advancing novel methods such as MCTS-based annotation, cutting-edge RL can become not only more powerful but also more accessible.