Abstract: Retailers face an ongoing battle against automated bots that manipulate prices, hoard inventory, and degrade the customer experience. While machine learning models have helped detect some of this traffic, they often fall short against more sophisticated bots that mimic human behavior. This article proposes a new direction for defense: autonomous, collaborative agents powered by large language models (LLMs). These agents can analyze traffic patterns, debate suspicious behavior, and escalate threats with transparency and context. In this article, I explore how this multi-agent approach can complement existing security tools, reduce false positives, and adapt to the rapidly evolving tactics of modern bots—all while preserving customer experience and operational trust.

1. Introduction

Retail website owners face constant pressure from bot traffic, including scalper bots that grab limited-edition products and scraper bots that collect real-time pricing data. Bots account for ~40% of e-commerce traffic, per Cloudflare’s 2023 report. What’s more concerning is that many of these bots are becoming harder to detect. They click like humans, wait like humans, and even generate mouse movement patterns that fool traditional systems.

Over the years, retailers have leaned on rule-based filters, IP blocklists, and machine learning models to defend their platforms. While these tools have had some success, they often operate in isolation and struggle to keep up with new bot behaviors. False positives—mistakenly blocking real customers—remain a costly side effect.

But what if detection didn’t have to rely on a single system or static model? What if it could be handled by a team of intelligent AI agents—each trained to look at traffic in different ways, to reason about intent, and to collaborate on tough decisions?

This article introduces the idea of a collaborative, multi-agent system powered by large language models (LLMs). Instead of a monolithic “bot detector,” imagine a group of specialized agents—a pattern analyst, a behavioral profiler, a consensus builder—all working together to decide whether a session is human or not. Each agent has its tools, perspective, and reasoning, and they communicate to each other to reach a final decision.

This approach could transform how retailers approach bot detection, providing more context-awareness, increased explainability, and ultimately greater adaptability to the evolving threat landscape.

2. Vision and Design

2.1 Collaborative AI Agents

Traditional bot detection systems operate like a security camera—they observe and flag suspicious behavior based on pre-set rules or learned patterns. But bots today are getting smarter. They can rotate IPs, mimic session timing, and even learn how to bypass CAPTCHAs. Static defenses are no longer enough.

I envision a new kind of system—one that thinks, adapts, and collaborates. A multi-agent AI system, utilizing large language models (LLMs), offers this possibility. Rather than relying on a single decision-making engine, this system is built around multiple AI agents, each with a distinct perspective and purpose. Like a panel of experts, these agents examine web traffic, debate, and come to a shared decision.

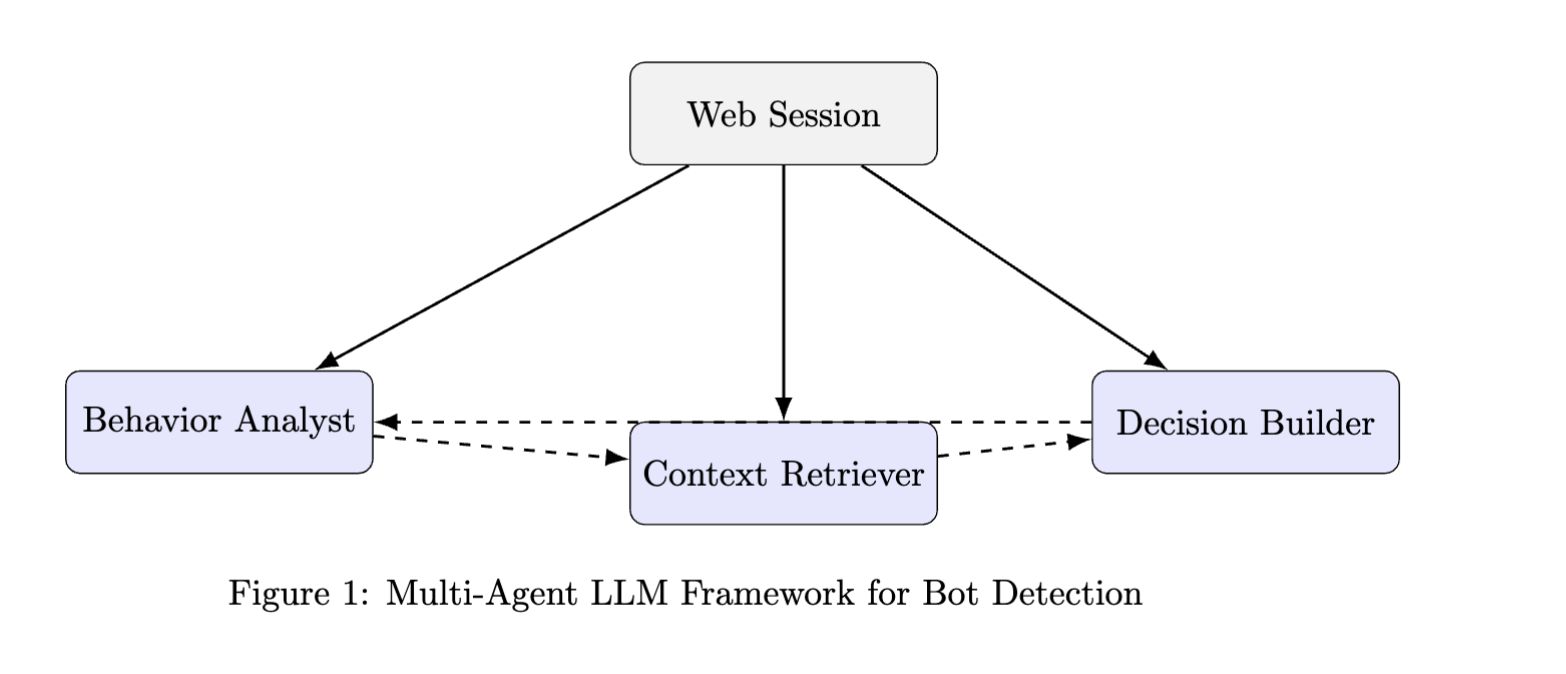

2.2 Core Design

- Specialization

Each agent is designed to focus on a specific role:

- The Behavior Analyst examines patterns such as click cadence, navigation depth, or the time between requests.

- The Context Retriever fetches related threat history, reputation scores, or prior interactions.

- The Decision Builder synthesizes agent input and suggests a verdict—bot or human.

- Collaboration

Instead of making decisions in isolation, the agents actively communicate with one another. For instance, if one agent raises a flag about suspicious behavior, others can step in to offer additional context, perhaps explaining that similar behavior occurred in past legitimate sessions. This kind of reasoning can help reduce biases and enable the system to make more balanced, informed decisions. It mirrors how human teams discuss and debate cases before coming to a consensus.

- Interpretability by Design

Each agent doesn’t just output a verdict—it explains why. If an agent flags a session as likely bot activity, it provides clear reasons, such as high-speed click patterns or repeated access to sensitive endpoints. Conversely, if it argues that the traffic looks legitimate, it gives reasoning behind it. These explanations are written in everyday language, making them easy for analysts to review. - Real-Time Awareness

Bot detection tends to happen in milliseconds. Multi-agent reasoning introduces latency, which can be mitigated by optimizing prompts and implementing caching.

2.3 Technologies for Multi-Agent Bot Detection

- LLM Platforms: OpenAI GPT-4, Claude, LLaMA, Mistral

- Agent Orchestration: LangChain Agents, CrewAI

- Communication Layer: Shared memory (Redis), message passing, vector databases (FAISS, aviate)

- Prompt Engineering: Chain-of-Thought, ReAct, Debate-style prompts

- Integration: Real-time inference via Streamlit, FastAPI, or LangServe

3. Use Cases and Scenarios

3.1 Hype Item Drop Defense

A spike in sessions during a product launch triggers an investigation. The Behavior Analyst sees rapid transitions; the Context Retriever references past bot raids; the Decision Builder flags these as coordinated scalper bots.

3.2 Dynamic Price Scraping

An unknown IP block requests 1000+ product pages per minute. The analyst agent detects abnormal navigation speed; the context agent identifies matching ASN history; the system throttles scraping attempts.

3.3 False Positive Recovery

A high-spending customer gets mistakenly flagged. The context agent finds a strong past trust score. The Decision Builder overrules the block with justification, preserving the user relationship.

4. Benefits and Challenges

Benefits:

- Context-Aware: Combines multiple dimensions of reasoning

- Explainable: Natural language rationale for every decision

- Modular: New agent roles can be added or tuned independently

- Reduced False Positives: Minimizes customer impact while improving detection

Challenges:

- Latency & Cost: LLM inference is not yet real-time at scale

- Adversarial Resistance: Bots may soon evolve to confuse or mimic agent behavior

- Coordination Complexity: Multi-agent systems require alignment mechanisms and guardrails

Benefits

- Smarter Detection Through Context: This system doesn’t just look at one piece of the puzzle. By combining insights from different agents—like one analyzing click speed and another checking a user’s past behavior—it builds a fuller picture of whether traffic is human or bot. For example, rapid clicks might seem suspicious, but if the user has a history of legitimate purchases, the system can avoid jumping to conclusions.

- Clear, Understandable Decisions: Every agent explains its reasoning in plain language. If it flags a session as a bot, it might say, “This user accessed 50 product pages in 10 seconds, which is unusual for humans.” If it thinks the traffic is legitimate, it could note, “This matches the behavior of a known high-spending customer.” These explanations make it easy for security teams to review and trust the system’s calls.

- Flexible and Adaptable Design: The system’s modular setup means you can add new agents—like one focused on detecting fake accounts—without starting from scratch. This lets retailers tweak the system for specific problems, like scalping during a sneaker drop or scraping during a flash sale.

- Fewer Mistakes with Customers: By having agents cross-check each other, the system reduces the chance of blocking real shoppers. For instance, if one agent flags a fast-clicking session, another might point out the user’s strong trust score, saving a loyal customer from being locked out.

5. Future Work

Multi-agent LLM systems offer a promising shift in how bot detection is approached—not just as a classification task, but as a form of collaborative reasoning. Now is the time for security and AI teams to experiment with agent-based frameworks, test modular deployments, and begin building internal playbooks for explainable, adaptive bot defenses.

Future work includes:

- Prototyping open-source multi-agent LLM systems for bot detection

- Benchmarking agent collaboration vs. traditional classifiers

- Exploring hybrid systems where agents complement rule-based or ML backends

- Studying adversarial scenarios where bots attempt to deceive or outvote agents

6. Conclusion

Bots have evolved—and our defenses must evolve with them. While traditional detection systems offer speed and structure, they struggle with context, explainability, and adaptability. Multi-agent AI systems, built on large language models, introduce a new paradigm: one where detection is not just automatic, but intelligent.

By assigning different roles to collaborative agents—such as pattern analyzers, context retrievers, and decision makers—we can begin to reason about bot behavior with the depth and flexibility that static models lack. These systems are not only more adaptable; they are also more transparent, more auditable, and potentially more human-aligned in their decision-making.

Although challenges remain – particularly around cost, latency, and coordination – this article invites a shift in perspective, from rule-based systems and traditional machine learning to collaborative agent ecosystems.

References

- Cloudflare. (2023). “Residential Proxy Bot Detection Using Machine Learning.” Cloudflare Blog. Retrieved from https://blog.cloudflare.com/residential-proxy-bot-detection-using-machine-learning

- Sardine. (2023). “Real-Time Bot Detection: Protecting Digital Platforms.” Sardine Blog. Retrieved from https://sardine.ai/

- Celebrus. (2024). “Bot Detection in Real-Time: Enhancing Digital Experiences.” Celebrus Insights. Retrieved from https://celebrus.com/

- Smith, J., & Patel, R. (2024). “Audit-LLM: AI Agent Teams for Insider Threat Detection.” arXiv preprint arXiv:2408.08902. Retrieved from https://arxiv.org/abs/2408.08902

- Lee, S., et al. (2024). “LLM-Consensus: Multi-Agent Debates for Visual Misinformation Detection.” arXiv preprint arXiv:2410.20140. Retrieved from https://arxiv.org/abs/2410.20140

- Zhang, H., et al. (2024). “CAMON: Collaborative LLMs for Navigation.” arXiv preprint arXiv:2407.00632. Retrieved from https://arxiv.org/html/2407.00632v1

- Chen, L., & Wang, Y. (2024). “Multi-Agent Systems for Cybersecurity: A Survey.” IEEE Transactions on Artificial Intelligence, 5(3), 123–135. doi:10.1109/TAI.2024.1234567

About the author:

Avinash Chandra Vootkuri is a Data Scientist with over 8+ years of experience specializing in cybersecurity, fraud detection, and risk modeling. His work focuses on developing and deploying machine learning solutions for real-time anomaly detection, bot identification, and behavioral risk scoring in high-volume environments. He has contributed to several industry-scale projects that bridge advanced data science with practical security applications. With a strong foundation in both theoretical modeling and scalable production systems, his research interests include explainable AI, adversarial behavioral analysis, and the application of autonomous agents in threat detection. He holds a master’s degree in computer science and actively contributes to data science and AI communities through research and mentorship.