As artificial intelligence becomes increasingly embedded in our personal lives — from chatbots to dating apps to workplace communication tools — developers are confronting a new ethical question: Should AI be able to recognize toxic human behavior?

More specifically, should we train AI models to detect traits associated with narcissistic abuse?

At first glance, the idea might sound either intrusive or overly ambitious. After all, even human beings struggle to identify manipulative behavior in real time, especially when it’s cloaked in charm or plausible deniability. But with emotional abuse and narcissistic dynamics becoming more widely discussed in therapy circles and social media, the question isn’t entirely abstract anymore.

If people are increasingly using AI to navigate relationships, mental health, and professional boundaries, shouldn’t the technology be equipped to spot patterns that can cause real psychological harm?

The Case for Digital Pattern Recognition

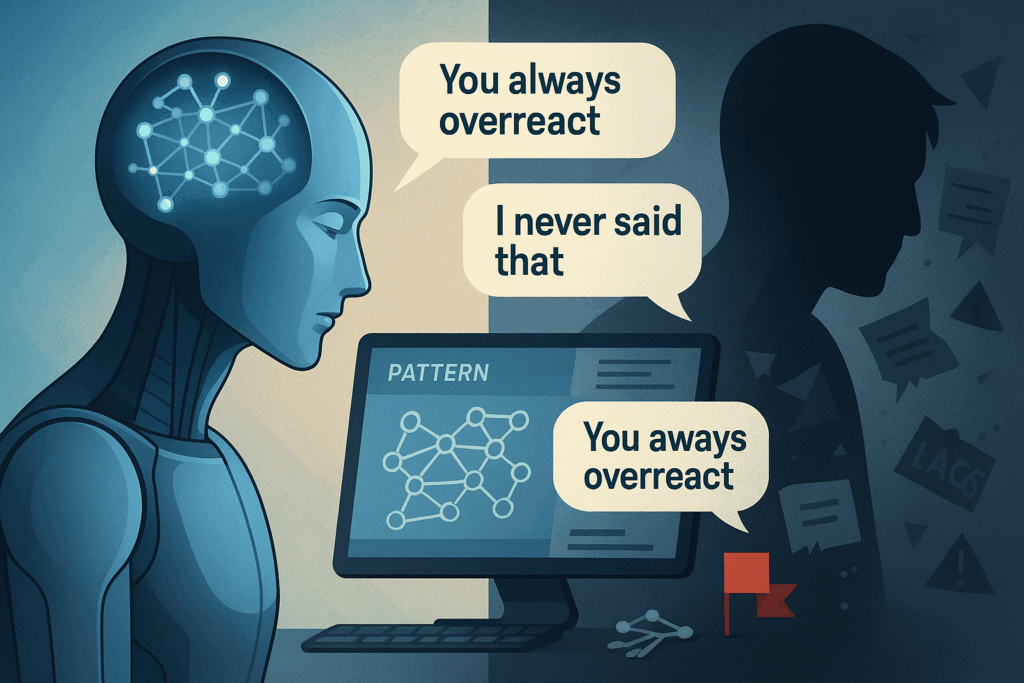

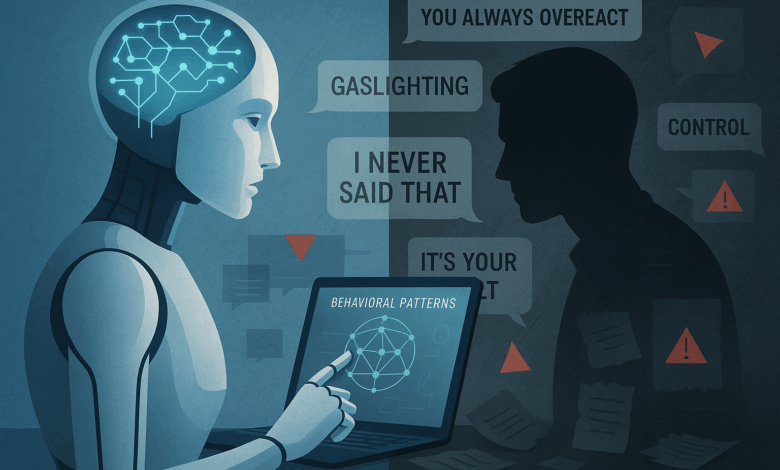

Modern AI models excel at pattern recognition — from financial fraud to cancer cells in medical imaging. So why not extend that logic to emotional abuse?

Narcissistic behavior, especially in its more covert forms, tends to follow recognizable patterns. These include:

-

Love-bombing followed by devaluation

-

Gaslighting and reality distortion

-

Blame-shifting and refusal to take accountability

-

Excessive need for admiration and hypersensitivity to criticism

These aren’t just vague personality quirks — they are behavioral loops that leave tangible psychological impacts. Many therapists now use structured tools to help clients spot these dynamics early. One such tool is a narcissist behavior checklist designed to help individuals recognize common red flags in relationships.

If a human therapist can work with this kind of structured input, could a machine learning model do the same — not to diagnose, but to assist users in identifying emotional risk?

Potential Applications (and Pitfalls)

There are several promising — and controversial — use cases for AI-powered toxic behavior detection:

-

Dating Apps: Flagging patterns in messages that indicate coercion, manipulation, or classic “narcissistic cycling.”

-

Workplace Platforms: Alerting HR or managers to communication patterns that resemble gaslighting or bullying.

-

Therapy Bots & Journaling Tools: Offering gentle nudges when a user describes experiences that align with known abuse frameworks.

But deploying such tools would come with serious caveats. First, there’s the risk of false positives — no one wants to be incorrectly labeled as toxic by an algorithm. Second, even accurate identification raises privacy and autonomy concerns. Would people feel comfortable knowing their conversations are being analyzed for psychological flags?

Then there’s the question of intent. Should AI act as a silent observer, simply surfacing insights to users, or should it actively intervene?

Ethically, any system that attempts to model complex human behavior — especially one as nuanced as narcissism — must prioritize consent, transparency, and user agency. This isn’t about creating an AI therapist or judge. It’s about surfacing patterns that people can choose to explore further if they want to.

Emerging moderation tools already use NLP to detect hate speech and harassment — extending that to interpersonal toxicity isn’t far off. Imagine Slack bots that flag manipulative manager language, or mental health apps that warn users about emotional coercion patterns in journal entries.

Why Narcissistic Pattern Recognition Should Matter in AI Design

AI is not just a utility anymore; it’s a mirror and sometimes even a mediator of human relationships. Whether you’re chatting with a support bot, filtering messages on Hinge, or documenting thoughts in an AI-assisted journal, your digital world is increasingly reactive to your emotional state.

If we’re serious about building emotionally intelligent systems, it’s worth considering how those systems handle manipulation — not just empathy.

Just as AI is learning to detect hate speech, fraud, and misinformation, it may one day be able to flag emotional dynamics that undermine a user’s well-being. The key is building with care — and grounding everything in frameworks already familiar to mental health professionals.

Final Thoughts

Teaching AI to recognize narcissistic behavior isn’t about diagnosing people. It’s about giving users tools to protect their mental space and make informed choices in complex human environments. It’s about pattern recognition — something machines do well, and something people recovering from emotional harm are learning to do better.

And maybe, just maybe, the more we train our tech to recognize manipulation, the better we’ll become at spotting it in ourselves and each other.