Modern AI excels at analyzing short video clips, but after just 1–3 hours of footage, it “forgets” everything it has seen. That blind spot forces security teams to sift manually through days of surveillance, media producers to hunt for specific scenes in vast archives, and marketers to piece together insights from scattered social videos. Collectively, this “copy-paste” workload costs businesses billions of dollars in lost productivity and leaves critical context buried under layers of clips.

Today former Meta Reality Labs researchers Dr. Shawn Shen and Ben Zhou announced Memories.ai, a new research lab pioneering what it calls the world’s first Large Visual Memory Model (LVMM). Today they’re emerging with an $8 million seed round led by Susa Ventures, with participation from Samsung Next, Fusion Fund, Crane Ventures, Seedcamp, and Creator Ventures. The funding will accelerate their mission to give AI systems a true visual memory, enabling them to remember, search, and learn from video over arbitrary timeframes.

The World’s First Large Visual Memory Model (LVMM)

At the heart of Memories.ai is the Large Visual Memory Model (LVMM), the industry’s first AI architecture built to capture, store, and recall visual experiences continuously. Instead of processing each clip in isolation, LVMM:

- Ingests raw video into a structured memory layer

- Indexes key visual elements—people, objects, scenes, and events—over time

- Creates a searchable, contextual database that AI agents can query instantly

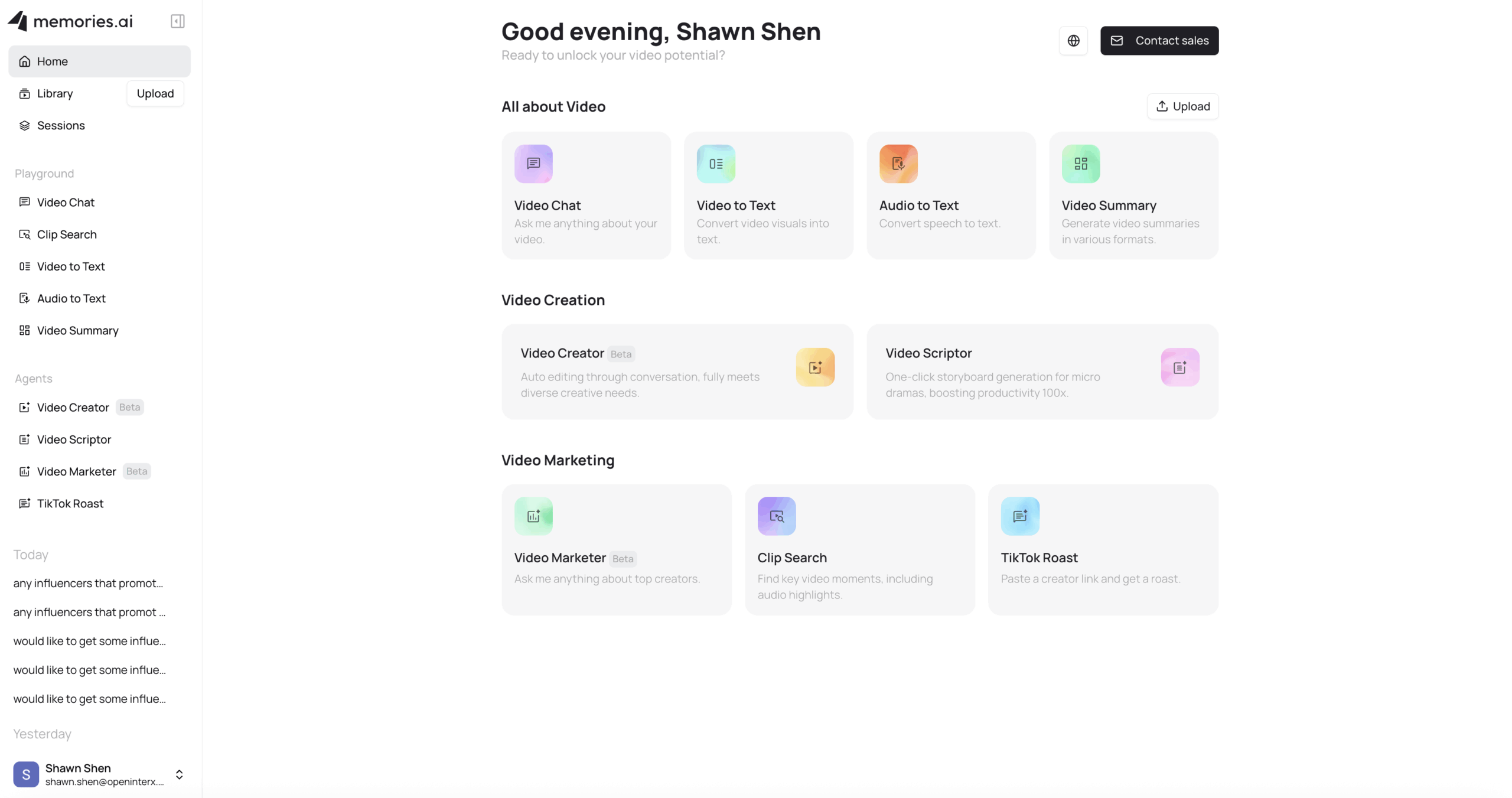

To date, Memories.ai has indexed over 1 million hours of video—providing clients with 100× more video memory capacity than was previously possible. The platform is accessible via a developer API and a chatbot app, where users can upload footage or connect existing libraries to start querying their visual memories immediately.

Real-World Impact Across Industries

The company shared several early customers and use cases the LVMM technology can make a difference for:

- Security & Safety: Jump from an alert to relevant footage months ago in seconds, eliminating manual scrub sessions.

- Media & Entertainment: Locate specific scenes, props, or characters across decades of content, slashing editing and licensing timelines.

- Marketing Analytics: Analyze sentiment and trending topics across millions of social video clips to inform campaigns.

- Consumer Devices: Samsung explores integrating LVMM into mobile experiences, letting users search and recall personal video memories.

- Robotics: As humanoid and other robots become more integrated into our society and workplaces, the ability for machines to have long-term rich visual memories like humans will be vital.

Here’s what early Memories.ai customer Jaden Xie, co-founder of PixVerse, says about the product: “Large-scale video understanding has become essential in today’s fast-evolving social media landscape. Memories.ai’s technology has provided us with valuable insights – from surfacing emerging trends and identifying key topics, to analyzing long-tail conversations across TikTok and other video platforms. The precision and depth of their video analysis capabilities offer important support for maintaining our competitive edge.”

Founders, Funding, and the Path Ahead

Dr. Shen and Ben Zhou bring deep expertise from their work at Meta Reality Labs, where they researched immersive visual experiences. Their vision: endow AI with an interconnected web of visual memories that drives smarter, more context-aware decision-making.

“Human intelligence isn’t just about processing information, it’s about building a rich, interconnected web of visual memories,” explains Dr. Shen. “Our mission is to bring that level of contextual awareness to AI to help build a safer and smarter world.”

With backing from Susa Ventures, Samsung Next, and other strategic investors, Memories.ai will expand its engineering team, deepen R&D into privacy and compliance frameworks, and onboard more enterprise customers.

“The market opportunity for temporal video intelligence is massive, touching everything from robotics and enterprise software to consumer applications, self-driving cars, and eventually AGI,” said Chad Byers, General Partner at Susa Ventures. “As AI systems move from static analysis to dynamic decision-making, understanding video over time becomes foundational. Memories.ai is building the critical infrastructure to power that future.”

As AI transitions from static analysis to dynamic, memory-driven reasoning, powering everything from robotics to autonomous vehicles, Memories.ai is building the critical infrastructure for truly intelligent video understanding.

The company says its.ai’s technology is currently available via API as well as a chatbot web app in which users can upload videos or connect with their own libraries, and customers can learn more at https://memories.ai/.