Did you know that AI chatbots can handle up to 80% of routine tasks and customer inquiries, freeing time for human agents to deal with more complex and creative tasks? Automation is key for customer service teams that are used to facing repetitive and labor-intensive tasks, such as resetting passwords, answering questions, and routing queries to the right department.

Specifically, large language models (LLMs) fit here best, with their ability to understand context and generate human-like responses. In this article, we discuss 5 key use cases of LLMs in customer service and explore how they help streamline support operations and reduce response times. We’ll also help to assess whether LLMs are the right fit for your customer support strategy and how retrieval augmented generation (RAG) can add up to LLM integration with customer support.

Key use cases of LLMs in customer support

Alt tag: Key use cases of LLMs in customer support

Before delving into specific use cases, let’s clarify what LLMs actually are. An LLM stands for an advanced AI system trained on extensive datasets to understand the context, recognize patterns in language, and generate human-like responses.

LLMs are suitable for customer support due to their versatility and ability to handle a variety of tasks that were traditionally handled by human agents. They also differ from rule-based chatbots, which follow predefined scripts. In contrast to rule-based systems, LLMs can adapt to different tones, rephrase answers based on user intent, etc.

Here are the 5 key applications that illustrate how LLMs can be useful in customer service:

1. Chatbots and virtual assistants

LLM-powered chatbots are designed to manage routine queries and simple tasks with high speed and accuracy. They deliver consistent, human-like responses around the clock so human agents can focus on more important tasks.

Use cases:

- Answering FAQs (e.g., shipping, pricing, return policies)

- Guiding users through account setup or troubleshooting

- Collecting basic information before routing to a human agent

- Providing order status updates or tracking information

- Assisting with password resets or account access issues

Examples:

- A banking chatbot answering questions about loan eligibility or interest rates

- A SaaS support bot guiding users through common onboarding issues

- An AI assistant helping with the learning curve on cybersecurity courses

2. Sentiment analysis

LLMs can analyze customer feedback to detect sentiment, emotion, and intent in real time. This can help businesses to make their communication more empathetic and efficient for the customer. In particular, support teams can be able to prioritize urgent cases or provide more tailored responses.

Use cases:

-

- Detecting frustration in customer messages and transferring the ticket to a human agent

- Adjusting tone and messaging style based on customer communication style

- Identifying sentiment trends for service improvements

Examples:

- A travel company detecting an angry customer and routing them to a senior support agent

- A retail chatbot softening its tone when a user expresses disappointment about a delayed order

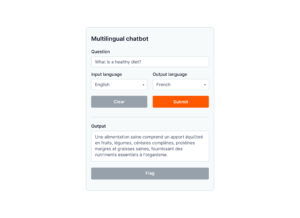

3. Multilingual support

Would you like to make your customer service more inclusive and accessible? LLMs allow your “agents” to speak whatever language you need. As a result, your business can win a global presence without extra spending on human translators or multilingual staff.

Alt tag: Multilingual chatbot example

Use cases:

-

- Providing real-time customer support in a customer’s preferred language

- Translating support documentation and FAQs

- Supporting regional language variations and slang

Examples:

- Offering 24/7 support in English, Spanish, and German through the same chatbot

- Translating help center articles for different markets

1. Automated ticketing

Ticket management can become much easier with the help of LLMs. This technology can automatically classify, prioritize, and route new customer requests based on their content, urgency, and customer history. Again, this reduces the manual workload for support teams. Plus, more critical issues get addressed quickly.

Use cases:

- Categorizing support tickets by topic (e.g., billing, technical issues, product feedback)

- Prioritizing tickets based on sentiment or urgency signals

- Assigning tickets to the right department or agent

- Tagging tickets with relevant metadata for faster processing

Examples:

- Flagging and escalating tickets from premium users

- Routing high-priority bug reports directly to the engineering team for a software company

- Automatically tagging inquiries about lost shipments for quick resolution in logistics

2. Proactive issue resolution

By analyzing patterns in customer interactions, LLMs can help take a proactive approach to customer service and solve potential issues before they escalate. Technology is good at identifying early signs of problems. This works great to improve customer satisfaction and theoretically reduce the volume of incoming support tickets over time.

Use cases:

- Identifying potential churn risks based on sentiment and behavior patterns

- Notifying support teams about common product complaints

- Suggesting fixes or updates based on usage trends or FAQs

Examples:

- A B2B SaaS company identifying clients that are likely to churn and prompting account managers to step in

- Detecting errors based on user messages and alerting the tech team

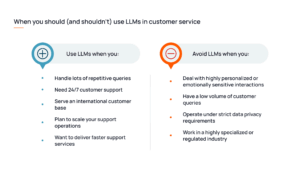

When should you integrate LLMs into your customer support strategy?

When the hype around LLMs in customer service is real, it’s essential to understand when the technology truly fits your business needs. Studies say that LLMs can reduce the time agents spent on customer interactions by 10% on average. Imagine how this could translate into annual savings and customer satisfaction.

Alt tag: When you should (and shouldn’t) use LLMs in customer service

So, when is it worth investing in LLMs? Here are the key scenarios when adoption makes sense:

- Lots of repetitive queries

If your support team spends most of their time on repetitive tasks like FAQs, order status, and password resets, it’s better to shift this work to LLMs. For example, a SaaS provider can integrate an LLM-powered chatbot to reduce manual effort and free up agents for other tasks.

- 24/7 support

LLMs can provide round-the-clock customer service without the need to hire overnight or global teams. This is particularly valuable for companies within a global reach, including online services or travel booking platforms. Their customers are used to reaching out at any time of the day, and with LLMs, you should not worry about different time zones.

- International customer base

In case you work with international audiences, you probably need multilingual or multi-dialect support. LLMs can help you save on native-speaking agents or translators as they understand and respond in multiple languages.

- Plans to scale support operations

If your business is growing quickly, LLMs can help you scale customer service with low investment. It’s a great find for startups, small- and mid-size businesses expanding into new markets since LLMs can handle more queries for less money.

- Faster support services

In case you work in a fast-paced environment, LLMs are your best choice. They’re capable of understanding context, summarizing past interactions, and generating accurate responses within seconds. Respectively, LLMs can help a telecom company resolve a billing problem by summarizing customer history and offering step-by-step guidance. On the way out, you get a reduced wait time and improved customer service.

When LLMs might not be the right fit for customer service

In the scenarios above, LLMs are a win-win solution for both customers and agents. And your company, respectively, too. But there are also scenarios when you had better consider alternative solutions:

- Highly personalized or emotionally sensitive interactions: LLMs are still machines, and people are much better when you regularly deal with empathy, subtle cues, and complex human judgment. For example, a mental health support line would benefit more from a human-to-human interaction, where a soothing tone and emotion would matter.

- Low query volume: In this case, LLMs are just wasted spending. Wait until you have a queue of customers to invest in LLMs for customer service. Another scenario is when you may not even need it. For example, if you work in a B2B consultancy with a small client base.

- Strict data privacy requirements: If you handle sensitive data, such as confidential contracts or medical records, it might be dangerous to feed models with this data. A company risks data breaches or compliance violations and can experience reputational damage and penalties under regulations like GDPR or HIPAA.

- Highly specialized or regulated industry: LLM solutions might have issues to fully grasp niche domain knowledge or legal nuances. Again, financial advisory firms or healthcare providers dealing with the latest regulations, such as HIPAA, risk inaccuracies or compliance issues. The situation might be different if the model is fine-tuned and updated for this specific domain.

While LLMs aren’t a universal fix, they could be a great solution in many customer service scenarios. Still, it has its limitations like outdated knowledge or lack of domain-specific understanding. And this is where RAG can make a difference.

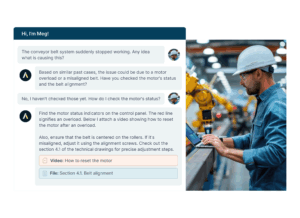

RAG as a boost to LLMs

RAG is a framework helping to improve the performance of LLMs in customer service. As compared to LLMs, which generate responses based on their internal training data, RAG models rely on information retrieved from external sources. This could be your internal database such as help centers or documentation.

Caption: Example of RAG-driven chatbot

Alt tag: Example of RAG-driven chatbot

Here’s how RAG strengthens LLMs:

- Accurate and current information: Since RAG retrieves real-time data from verified external sources, the model delivers more up-to-date and reliable answers. Research shows RAG-based outputs are about 43% more accurate than those from traditionally fine-tuned LLMs.

- Transparency and trust: RAG can generate responses with citations so users can trace back the sources of information. This builds up trust as users can verify the facts behind the AI’s suggestions.

- Domain-specific relevance: By integrating retrieved data directly into the model’s output, RAG’s responses become more tailored to your business’ unique context. So you can make your answers more domain specific, be it insurance, fintech, or e-mobility.

- Cost and resource efficiency: Since RAG doesn’t require constant retraining, it’s a cost-effective solution for business. It integrates new information and makes sure your LLM solution stays up to date.

Making the most of LLMs in customer support

LLMs are changing the customer service that we know today. They are automating routine tasks, providing instant multilingual responses, and contributing to more efficient 24/7 operations. They might not be a perfect fit for every business, especially those with sensitive data or highly personalized services. Still, they offer immense value when applied thoughtfully and can become a win-win solution for your team, customers, and the bottom line.

If you’re curious to see what else LLMs can do beyond customer support, we recommend reading this white paper on LLMs/RAG use cases in business. This reading covers a much wider range of applications than we discussed backed by real-life case studies.