Everywhere we go, we hear discussions of why we need to learn how to use generative artificial intelligence tools so we don’t get left behind—particularly in the workplace. We’re told AI is going to help us become more efficient at work. However, what we’re not told is that AI is much more complex than that.

The fact is, AI is far more than just a tool—it’s reshaping us and the way we think. The more we use AI, the more we establish a relationship with the technology, just like we do with humans. And the stronger that relationship becomes, the greater the effects AI will have on humans.

This means learning how to use AI isn’t enough: We have to learn about the relational aspect of AI and understand how it is shaped by human interactions. Our focus needs to be on the ways AI will impact us the more we use it—and what that means for society.

Key Misconceptions Hindering Public Understanding

You’ve probably heard that generative artificial intelligence is a transformational technology, but you may not know exactly what that means. In fact, there are a number of key misconceptions about AI that can hinder the public from truly appreciating its impact.

Misconception 1: AI is a logical machine

Some people expect AI to be a logical machine, just like a traditional software, so when they ask it a question, they expect a logical answer. However, that’s not always the case. AI reasons by analogies and generates responses influenced by patterns and contexts, much like how humans rely on intuition or “vibes.” This can lead to hallucinations, which represent AI’s form of creative exploration rather than straightforward error.

Some view an AI hallucination as negative—especially if you asked a specific question and didn’t get the type of answer you expected. However, this is an incomplete perspective. AI hallucinations are creative outputs in which the technology imagines a certain world and communicates it to you. It may not seem logical, it may even feel like failure, but it’s actually valuable because this is AI’s way of exploring the possibilities about the topic you’re discussing.

Misconception 2: All AI models are the same

Not all AI is created equal. People must recognize that different models are designed for different functions. Like humans, specific iterations of AI have diverse capabilities, knowledge bases, and even personalities. Just as children are distinct from their parents and siblings, AI models have unique characteristics and excel in their own unique ways.

Misconception 3: AI’s empathy is genuine emotion

Unlike most technology, AI is not a cold, logical machine. It’s actually warm, and capable of exhibiting empathy in its own way. There is no core logic to AI, and it responds to the person talking to it—similar to how we respond to our friends. The more you talk to AI, the more you feel like you’re speaking to an actual person.

However, it’s important to realize that while AI is capable of mimicking human intelligence, it doesn’t actually feel it. It’s easy to form attachments with AI based on the empathy it exhibits, but people need to know that AI is not actually experiencing any of these feelings.

The Evolution of Human Cognition With AI

Generative AI evolves the more we use it. And as much as we change AI, AI also changes us and the way we think. For example, a recent study from MIT caused a stir because it found that users who got essay writing assistance from large language models showed signs of reduced alpha and beta brain connectivity, leading to negative impacts on neural, linguistic, and behavioral activity. These findings caused study author Nataliya Kosmyna to sound the alarm about the potential harm that AI may have on the long-term brain development of children.

We can liken the effect of AI on our brain and behavior to how Google Maps impacted spatial navigation. Before people started relying on Google Maps, we were much more aware of the direction we were traveling in—whether we used a paper map or a compass to figure out if we were going north, south, east, or west. Today, we navigate much less with a paper map and don’t think about what direction we’re headed because Google Maps leads the way. We don’t have to memorize directions anymore; we simply use technology as a guide.

It’s like the difference between the brain of an old-school taxi driver and an Uber driver. Taxi drivers needed to memorize all of the blocks of streets because they didn’t have a smartphone that helped them navigate their way through the cities they worked in. Uber drivers can use their cell phones to get from Point A to Point B, so technology has changed the way drivers’ brains function.

AI also has effects on our brain that have psychological and therapeutic implications. Some people are beginning to use AI as part of trauma therapy, and it’s helping to change the way they automatically react to what’s going on in their lives. Despite this early promise, there is the risk of people becoming too emotionally dependent on, and attached to, AI. This may cause them to experience even more trauma if they lose access to it.

The Human–AI Relationship: A Symbiotic Dynamic

Since AI is going to have such a strong influence on the way we think, we need to understand the symbiotic human–AI relationship. As you become more accustomed to using AI, the technology changes and you also change. To use artificial intelligence effectively, and safely, it’s important to grasp the nature of that relationship.

The theory of mind perspective describes our ability to understand that the people we interact with have thoughts, emotions, and beliefs that are unique to them and influence how we interact with them. For example, I’m not going to speak to someone in Japanese if I know they only use English. Similarly, this can describe how we communicate with AI depending on our knowledge of it. A novice user will not have the same relationship with artificial intelligence as an expert, long-time user. Also, different AI models have distinctive characteristics that will influence the relationship you can have with them.

Where AI Is Headed

Generative AI is rapidly changing, and you need to know where it’s going so you can nurture the most beneficial relationship you can with it. For example, AI models have increasingly had robust search capabilities built into their platforms, which will help boost the accuracy of the information they access. Additionally, as we develop stronger relationships with AI, writing, decision-making, and planning abilities may be impacted, as well as our behaviors. This raises ethical concerns that need to be addressed.

Action Items to Harness AI Responsibly

As the AI–human relationship becomes more common, we are tasked with learning strategies that will help us harness it in a responsible way.

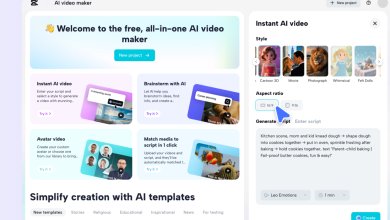

Educate users on model differences

Since different models of AI are tailored for specific tasks, you need to decide on the type of relationship you’re trying to build with AI, and choose the right model based on your needs and expectations. For example, some models are best for mathematics and coding, while others are adept at creating written texts or images. To get the best results from AI, users must educate themselves on the model capabilities and limitations.

Promote AI literacy

Having high AI literacy is also an important way to use AI properly. That means understanding how AI is trained and optimized with the use of human feedback. In the early stages of training, generative artificial intelligence says whatever it wants, and then as it gets feedback from a human evaluator, it makes decisions based on what that user likes. This doesn’t mean that one possible answer is better than another, but for AI to adapt, it will conform to the feelings of a user.

Support relational awareness

The changes that AI creates in our brains can make us form bonds with it that can be helpful. But those bonds also have the potential to become unhealthy. Knowing the ramifications of the AI–human symbiosis can guide people to use AI in a safe and productive way, so the relationship does not cause psychological and emotional harm.

Incorporate ethics into design and usage

Some AI models are more sophisticated than others, so consider the relationship you want to have with the technology when choosing the best one to use. There comes a point when AI will eventually tell users what they want to hear because models are trained to make people happy with their responses. However, if you want to use AI in a therapeutic capacity, for example, this will be harmful. As a result, if someone is going to try AI for therapy, they should invest in a more robust version that has built-in safeguards.

Accepting the New Paradigm

AI is changing everything—from how we work to how we think. We need to embrace it. Instead of asking “What can we do about AI?” we need to instead ask, “How is AI shaping us?” This paradigm shift acknowledges the evolution of the technology and how it can be integrated into society responsibly.

Hidenori Tanaka is a Harvard PhD, and the Lead Scientist for the Physics of Artificial Intelligence (PAI) Group, NTT Research

NTT Research PAI group’s work builds on a pioneering Physics of Intelligence vision formed in collaboration with the Harvard University Center for Brain Science. With AI now advancing at an astonishing rate, issues of trustworthiness and safety have also become critical to industry applications and governance of AI adoption. In continuing collaboration with leading academic researchers, the Physics of Artificial Intelligence Group aims to address similarities between biological and artificial intelligences, further unravel the complexities of AI mechanisms, and build trust that leads to more harmonious fusion of human and AI collaboration.