At 2:17 a.m., the IT department is deserted and all is quiet—at least, to human ears. But beneath the silence, something is stirring. A sudden spike in memory usage starts to ripple across a Kubernetes cluster, subtle enough that it wouldn’t trip any static alert thresholds. Yet it does not go unnoticed.

Before the on-call engineer’s phone can buzz, a host of AI agents embedded in Grafana’s observability stack are already at work. They correlate the memory spike with a configuration drift detected hours earlier. They cross-checks similar incidents in historical logs. Then, they run a simulated fix, find a likely resolution, and execute a safe rollback—logging each step in natural language, complete with reasoning and confidence scores.

This isn’t down to a script, nor a rule engine. The whole detection and analysis process is carried out by something closer to a team of collaborators – a set of autonomous software agents capable of perceiving, reasoning, and acting within complex systems.

Welcome to the age of Agentic AI, where threat resolution, amongst myriad other software functions, is on the cusp of a major revolution.

Introducing Grafana Assistant

At the heart of this revolution is Grafana Labs, a leading observability provider. At the company’s ObservabilityCON 2025 in London, they announced the general availability of Grafana Assistant across their open observability platform, Grafana Cloud.

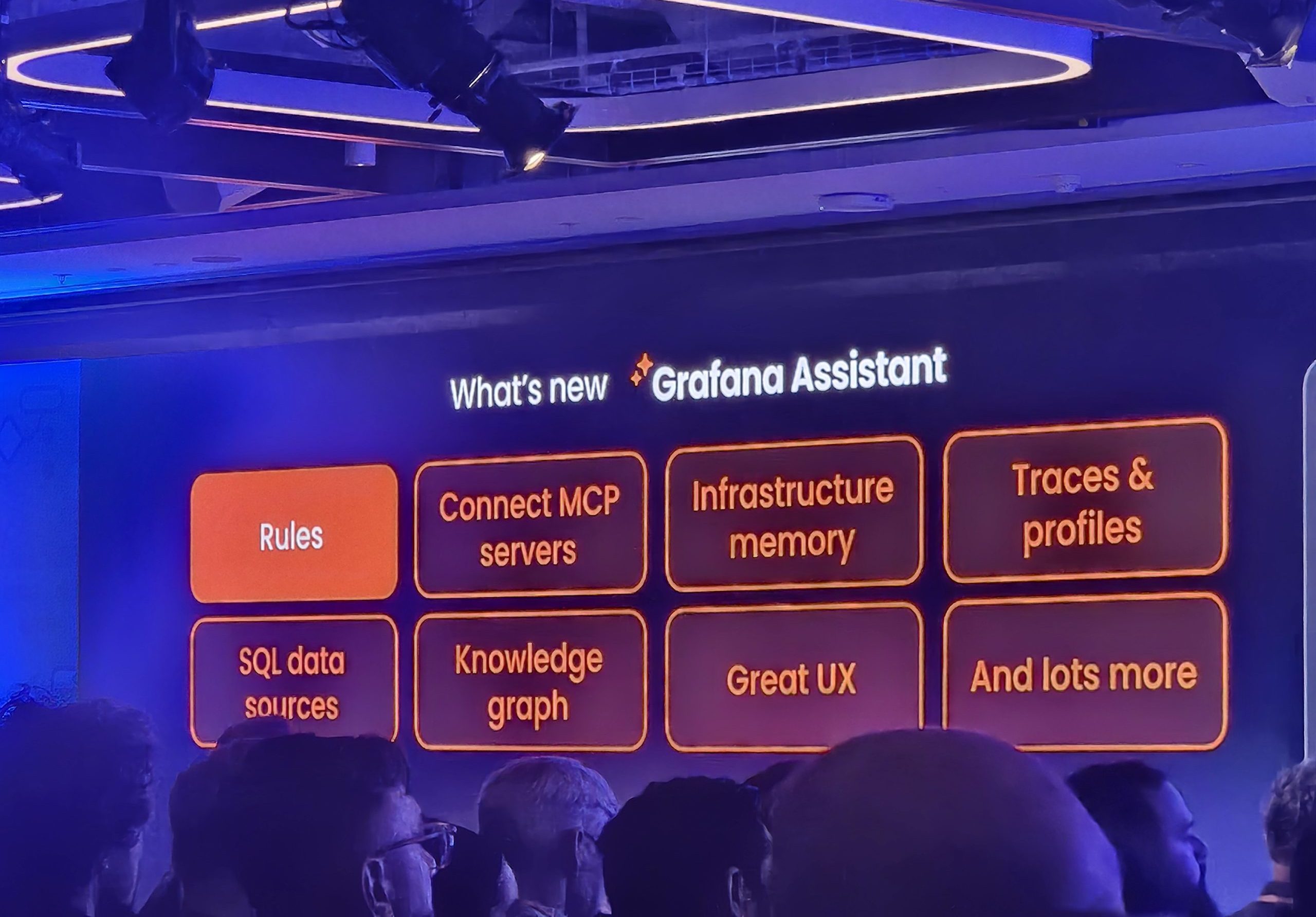

Grafana Assistant is a context-aware AI agent that has been specifically designed for Grafana Cloud. It is now being fully embedded in Grafana Cloud’s full observability stack. Its key capabilities include:

- Query generation without deep syntax knowledge: Automatically generate and refine queries in PromQL, LogQL, and TraceQL based on natural language prompts and work with Pyroscope for profiling.

- Organizational value unlock: Self-service access for non-observability teams reduces dependency on stretched SREs.

- In-context onboarding and learning support: Access contextual help, explanations, and ‘what to try next’ guidance directly within Grafana.

- Enterprise-ready access controls: Building on existing RBAC in Grafana Cloud, Grafana Assistant now offers Assistant-specific roles and permissions. Admins can configure access to features such as rules, Assistant Investigations, and MCP server management, at a granular level. This ensures that teams can align observability workflows with enterprise security and compliance requirements.

Alongside this, they publicly previewed an additional feature that will soon be enhancing the capabilities of the agent: Assistant Investigations.

This feature has been designed specifically for incident response, and works through a network of agents which proactively analyse data, generate findings, and provide actionable recommendations for remediation.

Assistant Investigations is designed to work alongside Grafana Assistant to provide a seamless workflow for resolving complex incidents, and will be rolled out automatically to customers who have access to Grafana Assistant.

This article looks at the current capabilities of AI agents to uncover all that they can (but also what they can’t) do. Crucially, we explore how the technology behind their capabilities really works by speaking to a couple of the masterminds involved in the design and deployment of Grafana Assistant and Assistant Investigations: Principal Software Engineers at Grafana Labs, Dmitry Filimonov and Cyril Tovena.

How autonomous are Grafana’s AI agents?

Grafana Assistant operates across a structured analytics and visualisation platforms that contain organisation-specific data. This enables the Assistant to provide comprehensive data analytics, and perform translation and aggregation tasks that would otherwise have to be done manually through application-specific coding languages such as PromQL, LogQL, or TraceQL.

“When you’re using the Assistant, it’s just an API call, and then it’s coming back to you with a response.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

“Assistant is single threaded, and you guide it, so it’s more of an interactive human-AI interface” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

Nevertheless, while it is built on an LLM architecture and performs some of the same functions as a chatbot, Grafana Assistant can also do things out of the box, such as build dashboards, navigate the user, and write queries.

Meanwhile, the agents which act under Assistant Investigations are capable of greater autonomy, especially when acting as a team. In fact, their collective level of autonomy means that the discovery of infrastructure issues can be fully automated, essentially reducing the role of the engineers to that of a reviewer or overseer.

“Assistant Investigations is autonomous, and there are multiple assistants all working together and collaborating. They message each other, but also do things on their own.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

Nevertheless, human intervention is required more at the set up stage of Assistant Investigations, where users will need to set up alerts (either manually or through Grafana Assistant).

“With investigations, its more autonomous, so it could be triggered by an alert, but you still have to set up the alert, though the assistant can also help you set up alerts. Then once you have the set up done, it’s gonna trigger automatically, so it’s pretty simple to use.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

Overall, Grafana’s Assistant Investigations model demonstrates the growing autonomy of Agentic AI, whereby agents are able to take cues from their environments (i.e. a security alert), and use them as context to take action. This represents a step away from other types of Generative AI such as chatbots, which remain dependent on explicit user prompts to take action.

How do the agents work together in Assistant Investigations?

In Assistant Investigations, there are two staple agents: a lead agent, and a reviewer agent. As their names suggest, they take on the roles of orchestrating the overall operation by designating tasks to other agents, and checking the outputs or ‘findings’ of other agents, respectively.

“There’s one lead agent whose job is to start new agents when they are needed. So in the beginning it just starts with very basic ones. For example, if you ask what’s going on with the service, and there’s no extra context, it’s just gonna start looking at the very basic metrics like the request rate, the presence of any errors. So the lead essentially plans the operations and starts the other agents.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

“We have this interesting dance between the lead agent and the reviewer agent, where the reviewer agent will look at the evidence that the other agents collected and will recommend to the lead agent what to do next.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

These other agents are context dependent, and are initiated into the investigation by the lead agent. They take on the role of a ‘specialist’, performing a deep dive into one type of data such as logs, metrics, or profiles.

“These agents are responsible for figuring out what’s going on. Their role is to form hypotheses.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

“You can have different specialists for Loki, Prometheus, you even have one for MCPs if you want to go to external services, there are also ones for tracing and profiling data. So they’re pretty different: different systems, different prompts, different behaviour.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

Architecturally however, there is no real difference between them.

“The code itself running the agents is the same, but the prompt and the tools each one is gonna use are different. So that means in the future that if there is a new signal, or new way of checking something, creating a new agent just means setting a new system prompt and a new set of tools.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

The majority of communication demonstrated between the agents in Assistant Investigations is based on a top-down relationship between the lead or reviewer agents at the top of the command chain, and the specialist agents at the bottom.

“The lead really oversees everything, which is necessary because the specialists don’t know each other, they don’t know about the lead and they don’t know about the other specialists.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

Thus, the closest example of reciprocal communication between agents comes from the lead and the reviewer agent, whereby the output of one modifies the behaviour of the other, and vice versa in a continuous interaction.

How is the risk of AI hallucination managed, and what happens when there is conflicting data from different sources?

As with all LLM models, there is some, arguably inevitable, risk of hallucination. Grafana Labs manages this risk by programming the agents to show all their workings, which can then be used by human analysts to track where and how the hallucination arose.

When it comes to conflicting data, this is also managed automatically in Assistant Investigations, essentially by escalating the level of the investigation. This involves the reviewer agent starting up the specialist agents whose roles are to dig deeper into the data in question, and find the source of the conflict.

“So this is another area where the reviewer agent comes into play. For example, maybe the agents will find one metric that says the pod is down, or the application isn’t running, and then another metric that says the application actually is running. What the reviewer will do in that case is try to get more corroborating evidence if it’s not already available, so it will start more agents, and perhaps even look at another metric, or memory, for example, to either validate the theory or disprove it.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

For the benefit of the users, and in order to maintain observability into the findings of each agent within the system, the complexity of the investigation (as measured by the amount of conflicting data) is continually updated as new evidence is found. This is then shown on the user interface through a ‘confidence’ tab. The higher the level of confidence, the more conclusive the evidence is that there is a real security issue. More often than not, when the confidence level is low, it indicates that the security alert may be a false one.

How are the different types of data aggregated by AI agents, and how are they weighted?

AI agents can only provide a holistic view of what is going within an organisation thanks to the aggregation of multiple types of telemetry data in Grafana Cloud. But you might be wondering how this data comes together in an investigation, and whether agents are programmed to prioritize one type of data over another.

Most of the time, the relative importance of a certain type of data depends on the context of the investigation. This importance determines which agents are deployed in an investigation, as well as the sequence of their deployment.

“We provide instructions of which agents to use in a given situation, which is basically weighting in some way.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

“With latency issues, for example, you will go more to traces and metrics rather than logs, because you have more information on latency in those systems.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

Profiling data, meanwhile, is a particularly useful type of data to use when there are performance issues, because it directs the agent to the exact line of code that reveals where the issue originates.

“If it’s clearly a performance issue, we will start a profiling agent if you have any profiling data on it” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

Nevertheless, most investigations will start out by validating the alert, and this is done through metrics (i.e. the measurements of a service captured during its runtime). While this doesn’t necessarily give metrics a heavier weighting overall, it demonstrates that there is an order to the relevance of different data types to different stages of the investigation.

This order fundamentally enables the agents to triage the different data types relevant to an investigation by prioritising certain tasks over others.

Final thoughts: recognising both the capabilities and limitations of AI Agents

While the current capabilities of AI Agents still remain closely tied to human direction and initiative, Grafana’s Assistant Investigations demonstrates how the unification of agents, working across an integrated data platform, can increase the autonomous capabilities of an Agentic AI system.

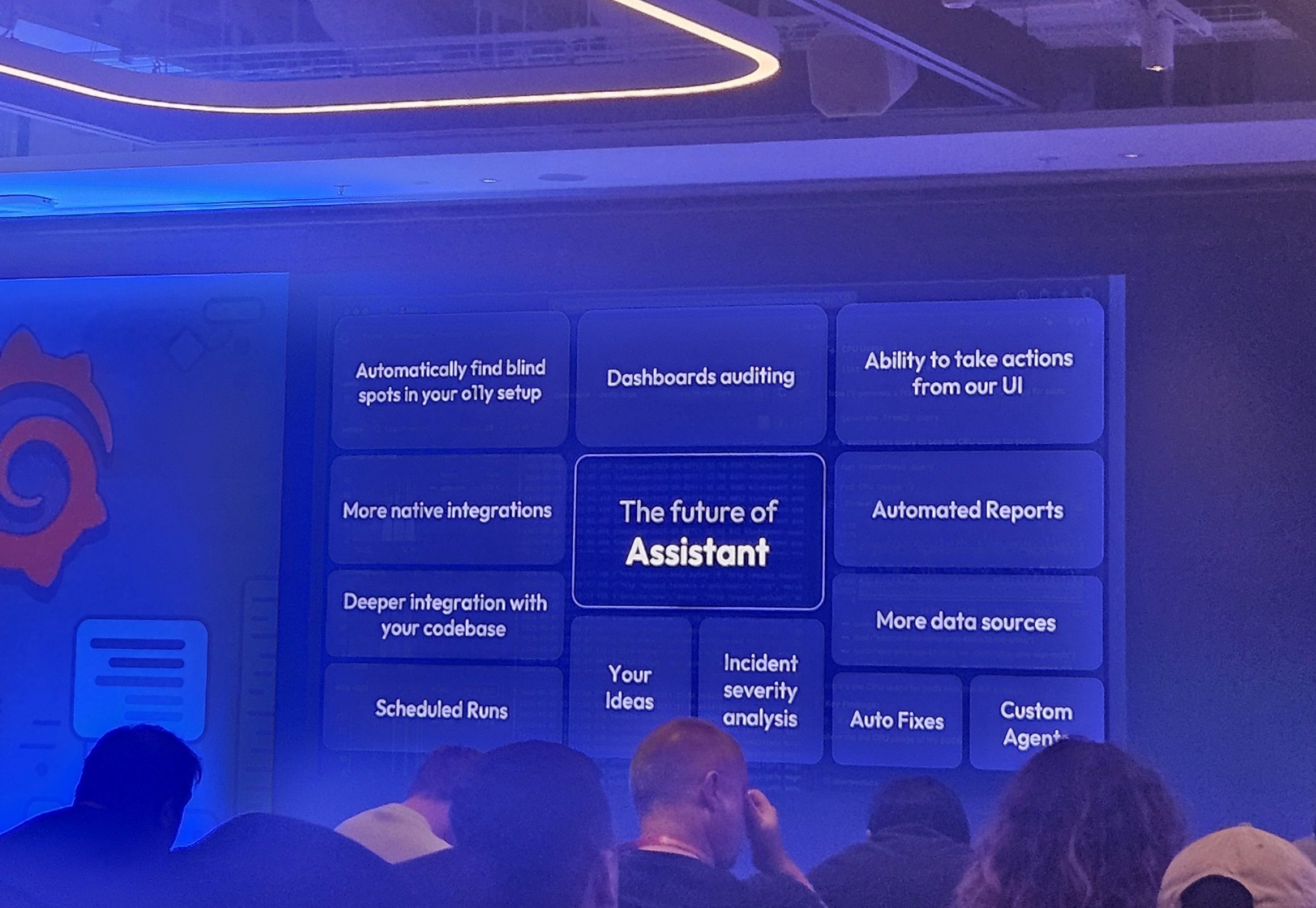

Moreover, given that the field of Agentic AI is one that is very much in development, it is difficult to predict the future capabilities of AI Agents, and exactly the level of autonomy they could demonstrate in different use-cases.

For now, the current use cases for the technology are clear enough for initial adoption, enabling the enhancement of the technology to continue at a more measured pace that is driven by feedback from users.

“We’re still developing it, it’s very much a project with a lot of changes and we’re still figuring a lot of things out. At this point we have something we’re very comfortable showing to a larger audience, and that’s why we’re launching it in public preview.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

Indeed, as one of the more recent forms of autonomous technology, Agentic AI is still at the stage in its development where it is being constantly enhanced thanks to the close monitoring of its applications in different industries, as well as troubleshooting from real-life use cases.

“We try to get a first version that seems to be ok, show it to the customers, and then act fast on the feedback.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

Crucially, it’s important to remember that the efficiency of AI agents within issue response depends on: 1) how the data used in the investigation is pre-organised, and 2) the specificity of the prompt given to the agents (i.e. the context scope).

“It’s not really a game of launching a lot of agents. That’s what we do right now, but ultimately we would rather have just one agent find the signal and solve the problem. So you want to reduce the amount of leads you have, essentially.” ~ Cyril Tovena, Principal Engineer at Grafana Labs

“If you have a more relevant context, that also really helps. Typically, a prompt will contain quite a lot of information, such as which specific metrics are broken, how long they have been broken for, etc. Because it has more context, the agents won’t need to do as many things. But I think the great thing about our system is that it’s so adaptable; it can do an investigation from the ground up, but it can also do an investigation very quickly and efficiently without digging deep and without starting many agents.” ~ Dmitry Filimonov, Principal Software Engineer at Grafana Labs

Grafana Labs continues to work on these areas through its toolkit in Grafana Cloud, aiming to enhance its agents by cutting out the noise from irrelevant or inaccurate data so that they can focus on the important signals. A major incentive to do this comes from the high costs (both financially and environmentally) of running the agents over large amounts of data.

Ultimately, this underscores the dependency of AI agents on the functionality of the data platform it is operating across. In this sense, Grafana Labs and its customers are well-placed to see significant benefit from Agentic AI, thanks to the strong foundations of its data management.