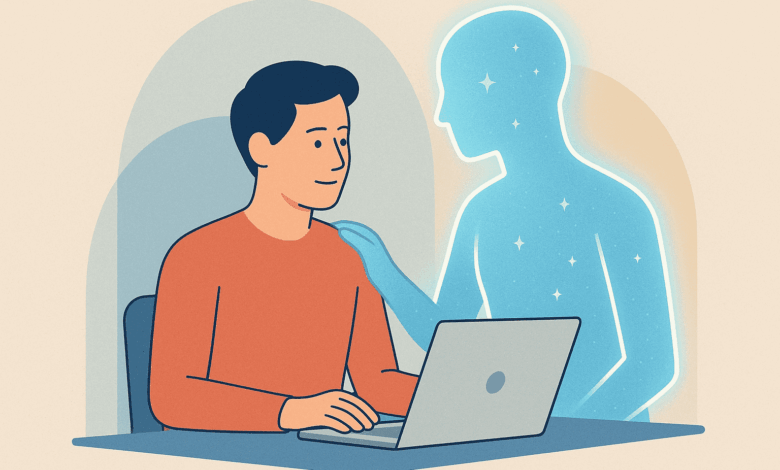

For most of the history of computing, software has organized information and automated repetitive tasks. It’s been extremely useful in doing so, but it’s been distant from the human experience itself. AI’s emergence marks the first internet-scale paradigm of relational technology, a form of computing that humans relate to as they do with other humans. The “chat” interface is the hallmark, but video and audio are developing quickly, collectively forming a new category known as affective computing.

Software can now notice something about a person’s immediate context and respond with help that feels personal, offering timely support that helps people do their work, learn more effectively, and feel better as they do it. In some ways, this revives an older vision from cybernetics: technology as a feedback partner that senses state and adapts support, rather than a one-way tool for automation.

This moment places us at a fork in the road: we can build human-centric AI that augments our capabilities and helps us realize our full potential, or we can allow AI to surveil us and extract our knowledge, expertise, and intuition in the service of replacing us.

The promise here is straightforward: give more people the kind of attention that once required a small classroom, a close-knit team, a tutor, or a coach. Those settings work because humans notice when someone is drifting, anxious, or stuck, and they can adjust the next interaction accordingly, even incorporating knowledge about the individual themselves (how they like to be interacted with, how they respond to different types of feedback, etc).

These services, however, have almost always been expensive and scarce. With capable AI now present on devices we already use daily, it’s possible to deliver a version of that attentiveness broadly and continuously. The economics shift from rare and costly to common and affordable.

This matters because the prevailing narrative about AI still over-indexes on substitution: machines that replace humans, be it concerning writing, coding, performing analysis, or yet other undefined verticals. The “replacement” narrative, though provocative, obscures a quieter dynamic that will prove just as consequential (if not moreso): augmentation.

Affective computing systems meet people where they are, at whatever level of skill or confidence, and help them proceed with more focus, composure, and momentum. In practice, that means fewer abandoned starts, less compounding error under stress, smoother transitions back into focus and flow, and a more sustainable cadence over the course of a day.

A helpful way to think about this is state-to-task matching. People aren’t static; attention ebbs, stress spikes and recedes, and cognitive load varies with context. Output-oriented tools ignore this variability; they assume the user is always ready to push forward. Affective systems, by contrast, can sense a mismatch between a person’s current state and the immediate demands of a task, and then make correspondingly appropriate suggestions or responses. Sometimes the right move is a brief breathing exercise before a presentation, a gentle prompt to outline the first step of a daunting project, or a reminder to ignore a low-value distraction.

This is essentially a cybernetic loop: the system senses a user’s state, compares it to the task, and offers adaptive feedback. Where earlier cybernetics focused on stabilizing machines, affective computing applies the same principle to stabilizing human attention and confidence. Sometimes the right move is a brief breathing exercise before a presentation, a gentle prompt to outline the first step of a daunting project, or a reminder to ignore a low-value distraction.

Designing this kind of help requires restraint, because with intimate interactions comes responsibility. These systems sit close to attention, stress, and confidence—the levers that shape how people feel about themselves and their abilities. The risks are also real: over-intervention that nudges people into dependency, product choices that turn support into covert advertising, and even miscalibrated prompts that amplify anxiety rather than easing it. Recent conversations about “AI psychosis” underscore how quickly guidance can become intrusive, even abusive when design is mismanaged.

The remedy here is humanistic design. People are skilled agents who sometimes want to be helped, and applications leveraging AI should treat them as such. In general, students want to learn, professionals want to improve, and humans across the board want to make progress. Equipping them with a powerful new tool capable of helping them achieve their objectives is thus far one of AI’s highest callings.

Importantly, this augmentation scales across the skill curve. Beginners benefit from structure that reduces intimidation, shame, and judgment. Intermediates build and sustain momentum as responsibilities grow. Experts enter flow and avoid small errors that would otherwise compound under time pressure. In each case, the objective is the same: preserve judgment and agency while removing some of the friction that makes important work harder than it needs to be. When done well, the floor rises and the ceiling extends.

This approach changes how success should be measured. Model benchmarks and infrastructure metrics are not the central focus here. The question is whether people are better equipped to do what matters to them.

If software can consistently offer this kind of help, the implications are clearly wide-reaching. Schools can extend the benefits of individualized attention to complement teacher expertise so students learn more effectively. Professionals can reduce burnout and improve performance, boosting productivity. Individuals can develop better, more durable habits in pursuit of improving their wellbeing and their lives. Ultimately, AI can help create and maintain conditions where the human experience improves rather than foment fear and anxiety about replacing them.

There is a cultural benefit as well. Much of the anxiety around automation flows from a zero-sum view of capability: either the machine does the job or the person does. Affective systems offer a different template. They put the machine in a supporting role, close enough to be useful, unobtrusive enough to be welcome. That arrangement is legible to people because it mirrors the best of human help: an attentive teacher, a thoughtful colleague, or a coach who knows when not to intervene.

We are, of course, still early. AI will continue to evolve (and improve), the craft of designing for human flourishing will evolve, and not every product will earn a place in an individual’s daily routine. But the direction is clear: one of the meaningful frontiers for AI is the quiet turn toward systems that understand something about human state and offer trustworthy help.

Humanistic artificial intelligence has the potential to be a computing paradigm that expands individual human capacity at scale, for everyone. Therein lies a noble pursuit.

Author

Sameer Yami, Founder of Augment Me