In 2025, “responsible AI” is everywhere, but its meaning is often vague. For sectors like healthcare, where decisions impact lives, relationships, and legacies, responsibility must be more than a buzzword. It must be operational.

At London Automation, we build AI tools that support healthcare fundraising teams, some of the most human-centered professionals in the nonprofit world. Our work offers a close-up view of what it means to design AI that collaborates with people, earns their trust, and amplifies their impact.

AI in Healthcare Fundraising: A High-Stakes Human Context

Hospitals and health systems rely on philanthropy for far more than people realize. From pediatric cancer centers to mobile health units in rural communities, donor dollars often fund the difference between basic care and exceptional care.

These teams are small and stretched thin. They manage grateful patient interactions, estate planning conversations, and major gift cultivation—all while juggling compliance, CRM upkeep, and community engagement.

In this deeply personal environment, the very idea of AI can feel uncomfortable.

That discomfort is valid.

Healthcare fundraising is grounded in trust, vulnerability, timing, and relationships. The idea that an algorithm could support this work feels counterintuitive. That’s where responsible AI comes in.

When Collaboration Begins with Listening

In our experience, the most successful AI implementations don’t start with a pitch deck. They start with empathy.

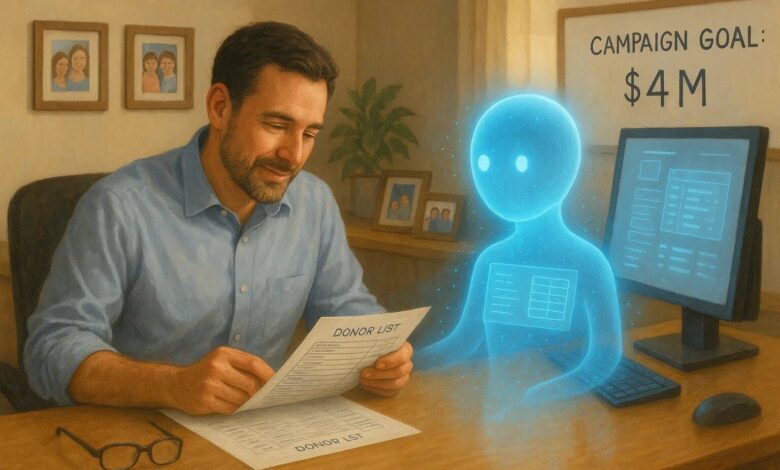

Healthcare fundraisers aren’t looking for sci-fi solutions. They’re asking for time – time to write personalized letters instead of pulling spreadsheets. Time to meet a donor face-to-face instead of chasing down mailing lists. Time to steward, connect, and think.

The most appreciated features aren’t flashy. They’re quiet forms of relief:

- Automated obituary scanning

- Address verification and donor record cleanup

- Nightly updates to patient encounter data

- Wealth segmentation and prioritization based on dozens of data points

AI handles these time-consuming tasks quietly and accurately, freeing fundraisers to focus on what they do best: building relationships.

In short, responsible AI collaboration does not start with adding functionality, but with subtracting friction.

GenAI Beyond Marketing: Operational Use Cases That Matter

Most GenAI conversations still focus on marketing: blog copy, social posts, chatbots. But some of the most impactful applications are happening far from the marketing team.

In healthcare fundraising, we’ve seen major impact from GenAI-powered tools that:

- Generate donor briefings by summarizing financial, clinical, and engagement history into actionable insights

- Draft call notes and proposal follow-ups for review

- Segment mailing lists based on custom logic and gift potential

- Recommend engagement tactics based on predicted donor affinity

These tools connect fundraising strategy to execution. They reduce the mental load and close the loop between data and decisions.

These GenAI tools aren’t here to replace human instincts. They’re decision aids: thoughtful, contextual, and overrideable. For fundraisers asked to do more with less, that kind of support is essential.

Redefining Responsible AI in Sensitive Industries

In high-trust industries like healthcare, responsible AI must go further. It must meet the emotional, cultural, and operational needs of the people it serves.

That means:

- Transparency: Fundraisers want to know not just what an AI system is recommending, but why. “Black box” suggestions are unacceptable.

- Human-in-the-loop design: AI should flag a prospect for outreach instead of initiating it. Final judgment belongs to the human.

- Emotional intelligence: Context matters. A patient’s recent experience with the healthcare system should influence how and when they’re contacted.

- Data dignity: Donor information is more than metadata; it represents relationships. Responsible AI must handle that data with care, nuance, and compliance.

In our work, we focus on clear, auditable scoring frameworks. Fundraisers see specific signals, like clinical encounters, giving history, wealth patterns, driving each recommendation, along with the rationale.

The goal isn’t just to be accurate. It’s to be trusted.

The Limits of Metrics and the Power of Trust

AI can optimize for response rates, giving likelihood, and donor lifetime value. In professions built on trust, however, no metric matters more than confidence.

Confidence decides whether someone uses a recommendation. Whether they’ll trust it in a moment of uncertainty. Whether they’ll advocate for it to their peers.

Confidence isn’t earned through accuracy alone. It takes clarity. It takes consistency. It takes care. That’s why trust-building is a product discipline. It shapes design, training, and documentation.

In AI systems deployed in frontline environments, whether healthcare, education, or government, trust is not ‘nice to have.’ It’s the product.

Human-Centered Design Is Not Optional

As AI becomes more integrated into knowledge work, its biggest challenge won’t be technological. It will be relational.

In healthcare philanthropy, even the best models fail if they disrupt workflows, confuse users, or ignore context. When AI respects workflows, aligns with systems, and comes with good training, it becomes a force multiplier.

Fundraisers prioritize smarter. Outreach hits home. Time goes where it matters most.

And critically, users feel supported, not replaced.

Designing AI That Deserves to Be There

In one recent customer conversation, a hospital director of philanthropy said:

“We’re a small hospital, but we’re a top 100 hospital… We’re great on the healthcare front, but not so great on the AI front. It’s almost taboo to be using AI. My current hospital president is open to technology, which is huge, because that’s where we’re going.”

The tension between tradition and transformation is where responsible AI has the most to offer.

In our experience, the highest compliment a user can give is not “This AI is impressive.” It’s “This AI belongs here.”

Responsible AI, Revisited

Responsible AI isn’t just about compliance. It’s about building systems that make someone’s work more meaningful, their time more protected, and their outcomes more human.

If a system can do that, and it’s built with rigor, empathy, and humility, then AI isn’t just accepted. It’s welcomed.