A new, autonomous era of AI is gaining traction. Agentic AI is set to completely transform how people interact with technology, in another major step forward for the industry. Unlike generative AI, agentic systems are proactive – meaning they can solve complex problems and make independent decisions. While this has the potential to be hugely beneficial for organisations, as always, there is nothing to stop these capabilities from also being leveraged by threat actors.

Take ransomware groups, for example, which are sometimes structured so that after gaining initial access to a network, they study how the infrastructure works, where the most significant vulnerabilities are, and which assets are distinctly valuable. This is where agentic AI could be useful because, armed with the ability to reliably and silently navigate through networks autonomously, their effectiveness will be massively increased. Accelerating the rate at which repetitive tasks such as recon, analysis, and target identification can happen pre and post attack could have catastrophic cyber security implications.

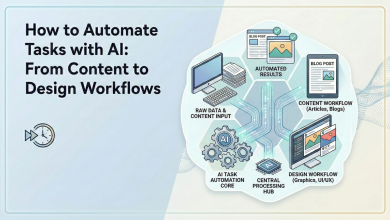

To say that agentic AI is a step-up from the generative tools in use today would be a major understatement. Think of it this way: if GenAI is a business consultant that provides insights and guidance, agentic AI is an implementation expert that takes action and drives outcomes. Rather than simply producing outputs, AI agents can follow instructions, adapt to real-time information, and make their own context-based decisions. This includes determining the best route to achieve a goal, selecting the most effective method to do so, and executing the task – often with minimal human involvement.

Filling the resource and capability gap

Applied to the delivery and execution of ransomware, agentic AI has the potential to transform how threat actors go about the task. At the moment, ransomware attacks operate more like a supply chain, where the first step is usually outsourced to an access broker whose role is to breach systems and sell credentials on the black market. From there, the ransomware group steps in to move laterally through the network, locate valuable data, and deploy the payload.

This process is complicated by the fact that every network environment is different. Some have strong endpoint protection in place, others don’t. Some are well segmented, many are not. But in many cases overcoming these security hurdles, bypassing antivirus tools, identifying critical systems, and avoiding detection takes a huge amount of manual effort, skill, and experience. Clearly, when organisational security is effective, threat actors are more likely to give up and move on to the next potential target in the hope that it takes less time and effort to succeed.

This is the capability and resource gap that agentic AI is likely to fill. By taking over the repetitive, manual steps involved in lateral movement and target identification, AI agents can offer a faster, cheaper, and always-on alternative to using human resources at every stage of the process. Whether it’s scanning for open ports and known vulnerabilities or evaluating which systems hold the most valuable data, an AI agent can methodically work its way through an environment without human involvement.

For ransomware-as-a-service (RaaS) operators, the appeal is obvious. Many of their buyers lack the technical know-how to conduct complex attacks. By embedding AI agents into their toolkits, they could offer a far more streamlined experience, one that increases reliability while lowering the barrier to entry. Ironically, this is a similar mindset to that which exists in organisations looking to use agentic AI legitimately – it’s all about transformational efficiency.

How immediate are the risks?

One of the big questions this all raises is when are security teams going to be faced with this scenario for real? While there’s growing evidence of generative AI being used to create and refine malware, improving its effectiveness and productivity, agentic AI is still emerging.

Over the next few years, however, organisations should expect to see steady growth in AI-enhanced intrusion workflows. Enumeration, privilege escalation, and lateral movement are all areas where agentic tools could provide a performance advantage. The most likely short-term impact, however, is a reduction in the time it takes for attackers to go from initial access to ransomware deployment, which in turn shortens the window defenders have to identify and contain the threat.

Looking further ahead, agentic AI has the potential to become a powerful resource – enabling attackers to automate decision-making and reduce the time between initial access and impact (among various other capabilities), all while lowering the technical barrier to entry. While there’s no need to panic, organisations should keep a close eye on how agentic AI is developing. The same capabilities being explored by attackers will soon be leveraged by defenders, particularly in areas such as Managed Detection and Response, threat hunting, and automated response. Today, it’s a race to see who gets there first, with those who move early best placed to stay ahead.