By late 2025, more than a thousand new AI tools were launched in a single month—each claiming to be the fastest, smartest, or most creative yet.

For many professionals, that endless innovation feels less like progress and more like paralysis.

We’ve automated content creation, yet decision-making has never been slower.

You click another “Top 50 AI Tools” list hoping to make sense of it all.

Ten browser tabs later, you’ve learned little beyond the fact that everyone seems to recommend the same products.

What you gather isn’t knowledge but noise.

We aren’t drowning in AI innovation; we’re drowning in AI opinions.

The problem isn’t too much information—it’s not knowing what to trust.

Ironically, the very thing designed to help—AI reviews—often deepens the confusion.

This article is for those caught in that cycle: creators, analysts, marketers, founders who’ve bookmarked more tools than they’ve ever used.

You’ll see why many reviews mislead, how to recognize credible ones, and how to turn those insights into clear, confident choices.

For a deeper perspective, explore this detailed guide on fighting information overload.

Why We Keep Falling for Shallow AI Reviews

If we already know most AI reviews are shallow or biased, why do we keep clicking them?

Because they look credible.

A clean design, a five-star chart, a confident tone—those cues fool our brains into assuming authority.

When information floods our feeds, we rely on surface polish instead of substance.

It isn’t laziness; it’s survival.

Affiliate sites know this.

They rarely show test data or failed results, yet they rank high because search algorithms reward quantity over quality.

A 2024 SEMrush study found that over 80 percent of AI review sites used affiliate links without disclosure.

The system is optimized for traffic, not truth.

We end up confusing visibility with credibility.

A tool mentioned everywhere feels “best,” even when that repetition is just an echo.

Professionals outsource judgment to “trusted reviewers,” and the cycle repeats—creators referencing creators, lists quoting lists, each recycling the same half-truths.

It’s the social-media trap all over again: most-liked does not mean most reliable.

The first step to escaping that loop is understanding what genuine credibility looks like.

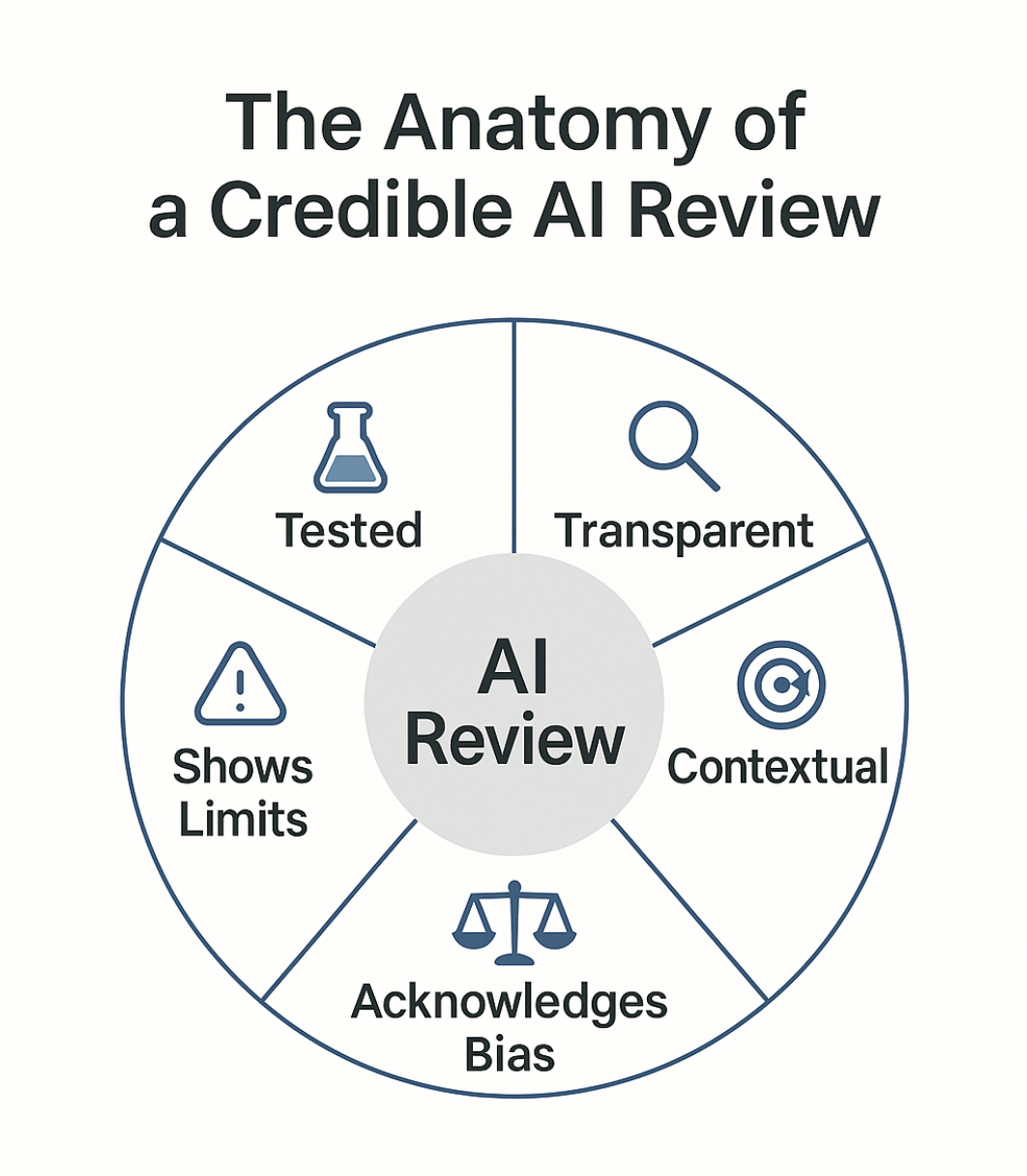

How to Identify In-Depth Reviews of AI Products You Can Trust

A credible review doesn’t shout; it shows.

It trades hype for evidence and replaces adjectives with transparency.

You can find examples of truly in-depth reviews of AI products that demonstrate these principles in action.

1. The Author Tests, Not Just Asserts

Real reviewers use the tool in genuine workflows, not one-click demos.

If you don’t see prompts, sample outputs, or comparisons, you’re reading marketing, not analysis.

Notice mentions of friction—what failed, what surprised, what couldn’t be replicated.

Honesty is the clearest signal of truth.

2. Context Is Clear

No tool is “the best” in isolation.

A review that defines its use case—for long-form writing, for small teams, under $20/month—instantly gains credibility.

Vague universality usually means the writer hasn’t tested deeply.

3. Methodology Is Transparent

Even a brief explanation of how tests were run shows respect for the reader.

Was it one trial or many? What metrics mattered?

It’s scientific thinking for everyday readers—simple, structured, repeatable.

4. Bias Is Acknowledged

Every writer has bias.

Good reviewers admit it, disclose partnerships, and separate preference from proof.

Pretending to be neutral while chasing clicks kills trust.

5. Depth Includes Limits

Strong reviews tell you not only what works but where the tool falls short—token limits, weak integrations, slow exports.

You leave informed, not sold.

Truly in-depth reviews don’t promise universality; they deliver understanding.

Shallow reviews blur details; deep ones reveal structure.

That clarity is the real productivity boost no app can automate.

A Four-Step Method to Filter the Noise

You don’t need another massive checklist.

You need a habit—a simple system for reading smarter.

Step 1 — Define What You Actually Need

Before opening another “Best AI Tools” article, pause.

Ask yourself: What problem am I solving? Productivity? Research? Design?

Clear goals remove 80 percent of irrelevant noise instantly.

Step 2 — Check the Source, Not the Summary

Who wrote it? Are they a practitioner or a copywriter?

Do they describe testing conditions or simply “recommend”?

Common trap: mistaking posting frequency for expertise.

Step 3 — Scan for Evidence, Not Enthusiasm

Ignore adjectives; hunt for artifacts—screenshots, metrics, benchmarks.

Whenever you feel convinced, stop and ask: “Where’s the proof?”

Step 4 — Cross-Reference, Then Test Once Yourself

Compare two or three solid reviews, note where they overlap, then run your own five-minute test.

Insight without validation is just opinion in a lab coat.

Following this method won’t silence the internet, but it will quiet your mind.

Reviews start working for you instead of against you.

From Reviews to Real Decisions

A framework only matters if it saves you time in reality.

Example 1 — The Marketing Team That Couldn’t Choose

Lena’s content team tested six AI writing tools over two weeks.

Each review said “best for marketers,” yet every output sounded identical.

By focusing only on reviewers who shared prompts, data, and context, they narrowed to two strong candidates.

After an hour of testing, one tool fit their tone perfectly.

They turned two weeks of confusion into a single afternoon of clarity.

Example 2 — The Researcher Who Trusted Process, Not Hype

Arun, a graduate researcher, needed an AI summarizer for academic papers.

He followed the 4-step filter and found one review that documented real tests and limitations.

When the app failed on PDFs over 10 MB, he wasn’t frustrated—the reviewer had warned him.

That small honesty restored his trust in his own judgment.

Good reviews don’t just save time; they teach you how to think.

Once you see reviews as learning tools, you move from chasing opinions to cultivating discernment.

The Future of Research-Driven AI Platforms

Manual filtering works—until it doesn’t.

With thousands of tools launching every quarter, credibility must scale.

Tomorrow’s AI platforms will compete not on speed but on research transparency.

Imagine if every output came with citations, testing logs, and reasoning trails.

That’s not utopian—it’s responsible design.

Some ecosystems already test this idea through DeepResearch frameworks, letting users trace how each answer forms.

Transparency doesn’t just reduce errors; it rebuilds trust.

For professionals drowning in content, an AI that explains why it answered the way it did is as valuable as one that answers faster.

When you can see how information was built, you stop consuming it passively and start collaborating with it.

The promise of AI was never just automation—it was augmented understanding.

That’s what credible, research-driven platforms are finally delivering.

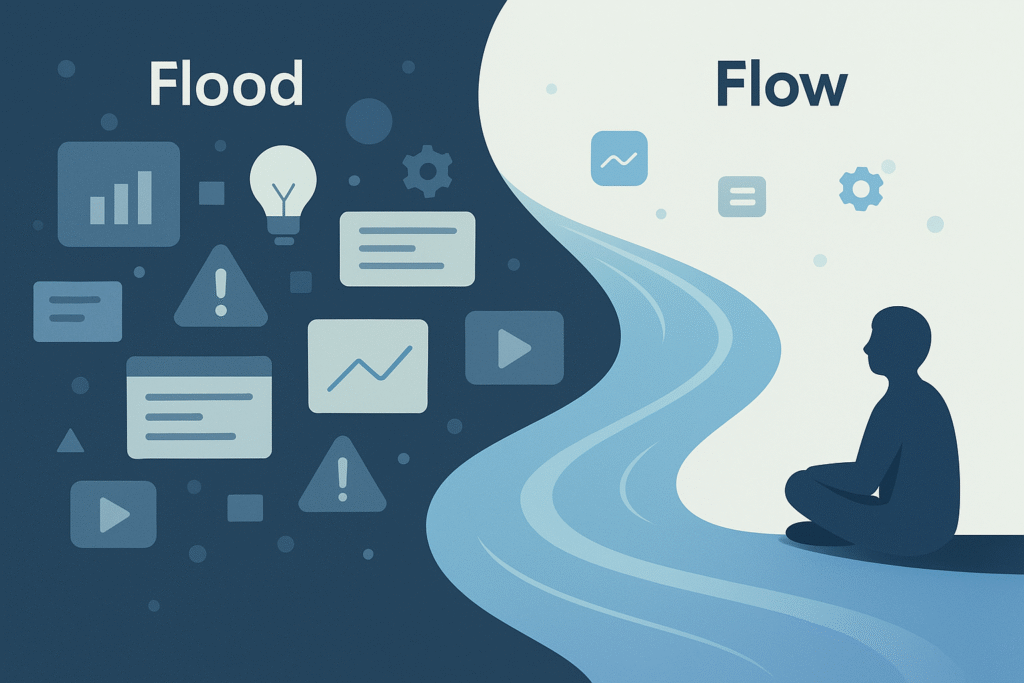

The Mindset Shift: From Consuming to Curating

Many think the way out of information overload is to read less.

In truth, it’s about reading with intention.

You can’t control tomorrow’s flood of new tools or reviews, but you can control what earns your attention.

The best content feels slower—it explains instead of persuading and admits what it doesn’t know.

That’s the shift: from chasing the newest to understanding the truest;

from being impressed by confidence to being convinced by evidence.

AI will only get louder.

But clarity is a choice, not a feature—the quiet discipline of choosing your inputs as carefully as your outputs.

So next time you see another “Top 50 AI Tools” post, don’t scroll.

Pause and ask:

Who wrote this?

What did they test?

Can I verify it myself?

That’s how information stops being a flood and becomes a flow—

a current you can navigate, not drown in.