AI is having its breakthrough decade. The generative AI boom has driven investment, innovation, and a surge of new use cases across industries, from logistics to healthcare to creative tools. But beneath the excitement is a less-discussed challenge: the physical infrastructure needed to support AI’s exponential growth in both scale and speed.

AI is accelerating a shift in computing that demands advances across the hardware stack. Executives must now focus on reengineering chips, servers, interconnects, and power systems to support scalable, energy-efficient infrastructure.

The Growth of AI Was Always Inevitable

In just a few years, AI has moved from experimentation to integration. It now powers applications across industries, including search, diagnostics, robotics, and logistics.The number of AI-enabled devices—from smart assistants and wearables to autonomous systems and industrial equipment—is projected to grow rapidly through the end of the decade.

This expansion is driven by increasingly complex models. Training large language models, in particular, requires compute resources that are scaling at an exponential rate. Some projections estimate future models may require up to a million times more compute than current systems.

Behind the scenes, these applications depend on ever-larger and more complex models. The training of large language models (LLMs), in particular, is growing exponentially as well, with projections that compute requirements could scale up to a million times more than what was needed for today’s most advanced systems.

AI Is Driving a Surge in Energy Demand

As AI models grow larger and more complex, their energy requirements are increasing rapidly. Nowhere is this more visible than in data centers which power the majority of AI workloads. Electricity usage tied to AI workloads in cloud infrastructure is projected to increase by at least a factor of seven in the coming years, and data center power demand is projected to increase 165% by 2030. This growth raises concerns about cost, sustainability and grid capacity. Can our current infrastructure support such a rapid ramp up?

Interconnects, the physical and electrical pathways that transfer data between chips, components or systems, are one of the bottlenecks at the heart of this energy problem. Moving vast amounts of data between chips inside servers and across racks consumes enormous amounts of energy. Traditional copper interconnects, which rely on electrical signals and are limited by factors like heat generation and signal degradation over distance, are fast becoming inadequate in terms of speed and energy efficiency.

A Holistic Look at AI Infrastructure

To address the energy and performance challenges of AI, it’s important to step back and consider the full stack of AI infrastructure.

At the top layer are AI applications: the smart interfaces and assistants that people interact with daily. Just beneath are the models that power those experiences: large language models, domain-specific models, and other sophisticated architectures.

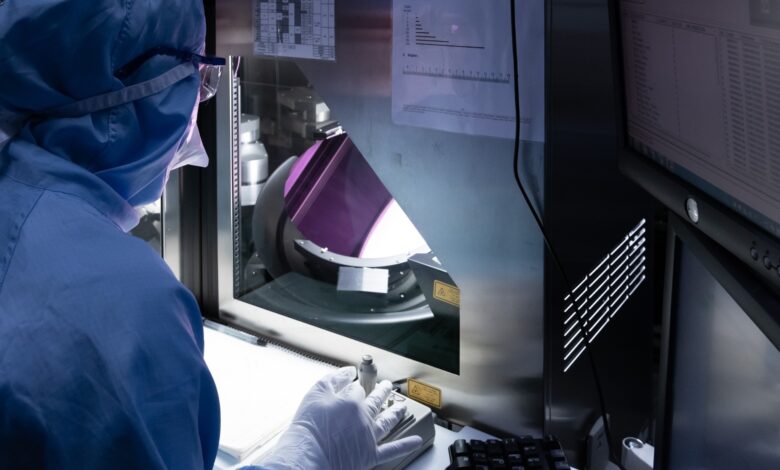

But it’s the hardware, that bottom layer of our infrastructure, that is under the most pressure to evolve. This includes not only compute cores like GPUs and ASICs, but also the substrates, packaging and interconnect technologies that bind them together. As model size and user demand increase, so does the need for innovations that can deliver high bandwidth, low latency and energy-efficient data movement.

In the Cloud… Optical Interconnects

Cloud data centers are where many of today’s AI models are trained and deployed. These facilities require ultra-fast optical interconnects to manage massive data transfers between processors, memory and storage. In response, the industry is rapidly moving toward photonic solutions, specifically silicon photonics, that can transmit data with higher efficiency and lower power than legacy options.

One emerging standard in this space is co-packaged optics (CPO). Unlike traditional pluggable optics, CPO integrates optical components directly alongside compute and switch chips, shortening the distance data must travel and reducing energy loss. With major players announcing CPO adoption in 2024, this shift signals a new phase in how AI infrastructure will be built.

New Architectures and New Solutions Emerge

Silicon photonics and CPO represent an architectural rethink of our traditional problem-solving. These systems can handle enormous amounts of data (imagine the equivalent of streaming hundreds of ultra-HD movies at once) and are expected to double that capacity in the near future. As AI workloads scale, we need these kinds of innovations to support the demand without a corresponding spike in power consumption.

But integrating optics with compute isn’t simple. It requires specially-designed materials like engineered substrates, and precise co-design across experts in chip design, optics and systems engineering that traditionally operated in silos. The result is a convergence of semiconductor, optical and systems engineering all aimed at achieving the next leap in performance-per-watt.

AI Inference Inches Closer to the Edge

While cloud infrastructure remains crucial, AI inference is increasingly moving to the edge, closer to the user and the data source. In cases like a wearable health tracker analyzing patterns in real time, or a factory floor system making split-second decisions, these edge AI devices need to be smart, but they also need to be small and energy efficient.

The challenge here flips: instead of cooling energy-hungry servers in massive buildings, edge devices must deliver compute performance while still having a battery-operated model. This requires highly specialized, low-power substrates and hardware optimized for performance-per-watt.

Edge AI is not replacing the cloud, but complementing it—offloading inference to reduce latency and bandwidth demands on central data centers. As AI becomes pervasive, the infrastructure must become distributed.

Addressing AI’s Energy Appetite is Key

Whether in the cloud or at the edge, AI’s energy appetite is unsustainable without breakthroughs in hardware design. That’s why addressing the energy consumption is absolutely critical, not just for operational cost, but for environmental impact and long-term scalability.

Innovation in engineered substrates, silicon photonics and co-packaged optics is enabling a new generation of AI infrastructure that balances performance with energy responsibility. These innovations represent a future where we don’t have to choose between the two. And while these technologies are often invisible to the end user, they truly are fundamental to the AI experience.

Engineering a Hidden Hero

When we talk about AI, the conversation often focuses on algorithms and applications. But a real breakthrough is happening far beneath the surface of your ChatGPT interface, within the substrates, photonics and interconnects powering the entire ecosystem.

To build a future where AI is both powerful and sustainable, we must look beyond the software and into the silicon. It’s here, in the hidden layers of infrastructure, that the foundations of truly scalable AI are being quietly engineered.