AI is the most transformative technology shaping the world around us—including how enterprises will operate, compete and innovate.

Enterprises are no longer sitting on the sidelines

Last year was marked by experimentation and proof-of-concepts—but now, organizations are moving toward real-world implementation. Most are actively exploring how AI can drive productivity, enhance operational efficiency, and unlock new products and services.

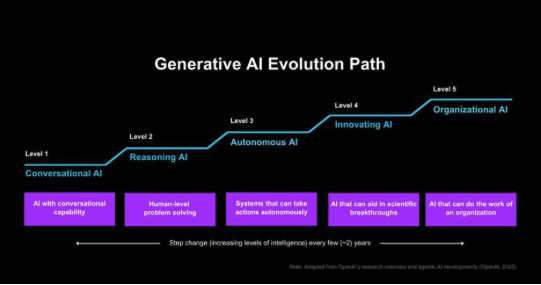

AI is moving at a pace faster than most enterprises can comprehend. Yet, despite the headlines, we’re still in the early stages of AI maturity. As illustrated below, we’ve moved past the Conversational AI phase—where interacting with tools like ChatGPT became mainstream—and we are now entering the Reasoning phase, where AI demonstrates cognitive capabilities and logical reasoning. With the rise of AI Agents, we’re beginning to see the early emergence of Autonomous AI, where agents can execute complex, long-running workflows with minimal human input.

Every year or two brings a paradigm shift, and this trend shows no signs of slowing. In the near future, AI could extend into creative thinkingand even contribute meaningfully to R&D. This chart is not to show a final destination—but it’s an ongoing evolution that will continue to unfold in the coming years.

AI will become more capable, more autonomous, and more integrated into the fabric of our daily lives, including the enterprise. Here are the key trends shaping AI’s evolution from experimentation to enterprise-scale deployment.

AI Models Are Getting Smarter

AI models are the “brains” behind the AI system we use today, and these brains are getting significantly smarter, faster, and multi-modal.

Reasoning Models

Reasoning capability has been the biggest innovation with models in recent months. Five of the top 10 models are now reasoning models. A Reasoning model is a type of AI model designed to perform multi-step logical thinking, inference, or problem-solving beyond simple pattern matching or recall.

Just a couple of years ago, we had to provide AI systems with carefully structured questions and lots of context to get decent results. Today, models are reasoning on their own, generating structured outputs, and performing increasingly complex tasks with very little input.

One thing to note is the enterprise inference costs will go higher with reasoning models as they require more compute, memory, and time to handle multi-step logical processes and longer context windows.

Regardless, the scope of how and where we’ll use models will continue to expand.

Multi-modal Models

LLMs are increasingly multi-modal with the ability to process and generate not just text, but also images, audio, and video. This can unlock an entirely new set of enterprise use cases, from document analysis to video summarization to voice-based interfaces.

Open-Source Models

While commercial models still lead in performance, open-source models are catching up quickly.

The release of the open-source model from DeepSeek was a pivotal moment – we realized that models are becoming a commodity and differentiation will be at the app layer, and the data you own.

Open-source and commercial models will become comparable in performance. Today, most hyperscalers provide almost all the leading commercial and open-source models that all enterprises can leverage. So, the real differentiation lies in your data and the applications that you build on top of them.

Multiple, Small Models

DeepSeek also gave light to techniques like Model Distillation that can allow anyone to take existing large models and create their own specialized, smaller models. In the future, rather than relying on one massive model to do everything, enterprises will build smaller, fit-for-purpose models. Imagine a specialized LLM that deeply understands your domain (e.g. Telecom Network Operations Model, Payments Transaction Model, etc.) without requiring exhaustive context.

Multiple models will co-exist in the enterprise to cater to different sets of use cases. Enterprises will need an LLM Router to route requests to the appropriate model based on the use case complexity and requirement.

AI Agents: From Copilot to Auto Pilot

The next big leap in AI is the rise of AI agents, autonomous systems that don’t just respond to prompts, but actively work on your behalf.

Think of Agent as your personal assistant, but unlike a human assistant, AI agents can work 24/7, interface with digital systems instantly, and scale to help millions at once — all while learning and improving.

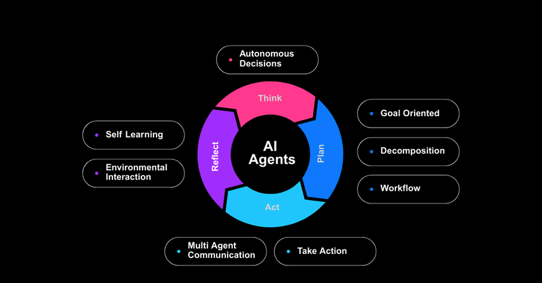

An AI agent can think, plan, act, and reflect. It can break goals into tasks, take action by calling APIs or browsing websites, and reflect and improve over time to achieve your goal.

Enterprises have started building and deploying AI agents to automate workflows, monitor systems, and even collaborate with other agents.

Enterprises have been using workflow or RPA tools for years now. These tools supported fixed rules and operated on concrete numbers leading to deterministic outcomes. But now with AI, Agents can support fuzzy rules, operate on text and images, resulting in non-deterministic outcomes.

AI Agents can vary from simple to complex tasks. It can have humans in the loop or advanced agents can do more and more things autonomously. It can range from a Single Agent doing dynamic workflows to multi agents collaborating with each other.

With the advent of new standards like A2A, we will increasingly see a multi-agent ecosystem, where hundreds of agents collaborate in a complex network to execute tasks to achieve a common goal.

Building the Data Core

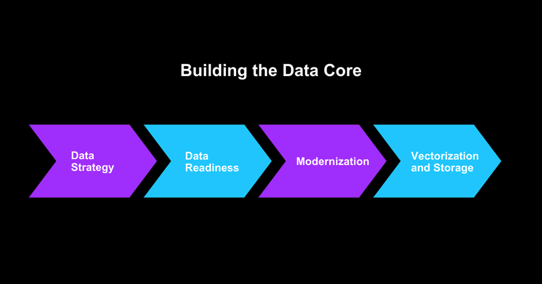

AI is only as powerful as the data behind it. To move beyond experiments, enterprises must modernize their data foundations. A strong data core is not just about storage or access—it’s about structuring data for AI readiness, governance, and scale. There is a need to relook at the overall data strategy.

Data Strategy

Until recently, enterprise data strategies focused heavily on structured data. Upward of 80% of enterprise data is unstructured as per Gartner. With GenAI, all the unstructured information such as emails, documents, PDFs, meeting notes, scratch pads, call transcripts, and images become usable and valuable.

AI is forcing enterprises to relook at their Data Strategy. They are working to redefine their data governance, relook at the data architecture, and evaluate their data quality.

Data Readiness

Organizations are embarking on initiatives to get their unstructured data ready for generative AI. They are working to create an inventory of their unstructured data, add metadata and labels, and also ensure data lineage and quality. Tools around unstructured data management are still evolving, and this adds to the complexity and time.

Modernization

Typically, enterprises are building AI using cloud-based AI services and cloud-hosted models. To take advantage of this, enterprise data needs to move to the cloud. This is driving data platform modernization initiatives like migrating on-premises or legacy data platforms to modern platforms like Microsoft Fabric, Databricks, Snowflake, etc.

These modern data platforms allow you to aggregate (sometimes virtually) data in a single data lake. A data lake is a centralized repository that stores large volumes of raw, unstructured, semi-structured, and structured data in its native format for future analysis. Furthermore, most of these platforms now support data lakehouse architecture that combines the scalability of a data lake with the data management and performance features of a data warehouse.

Vector Stores

Generative AI evolution also demands vector stores, which are specialized databases designed for AI to search and reason over unstructured content. While general-purpose solutions exist (e.g., from the hyperscalers), more advanced or high-performance use cases may require custom vector storage infrastructure.

Software Engineering in the Age of AI

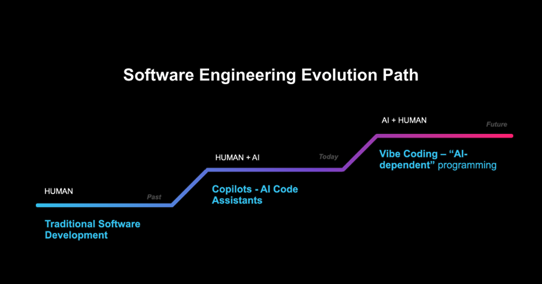

For decades, software development has largely been a manual process: developers writing code line by line; hands on keyboard. In recent years, we’ve entered the “Copilot era,” where AI tools like GitHub Copilot, Windsurf, Cursor, etc. assist developers by generating code snippets, offering suggestions, test case generation, and generally speeding up routine tasks. This shift has already shown a noticeable boost in productivity, with estimates around 20%.

But the next level of transformation allows even non-programmers to produce software. We’re now seeing early signs of a new approach—what some are calling “vibe coding.”

With Vibe Coding, developers simply provide a prompt or an idea, and the LLM generates much of the code. Developer accepts code without full understanding and goes with the vibe of the model. While this paradigm shift will take time to mature and enter the enterprise, it signals a future of code where we move from AI assisting human developers, to humans assisting AI.

As someone said, the hottest new programming language is English!

AI Governance, Risk & Ethics

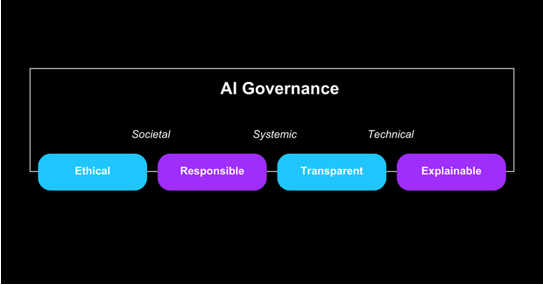

As enterprises scale their AI initiatives, governance can no longer be an afterthought. While many organizations have started implementing AI use cases, few have invested in the guardrails required to ensure AI is deployed safely, fairly, and legally.AI systems must be ethical, responsible, transparent, governed, and explainable. These dimensions span societal expectations, systemic risks, and technical safeguards.

Yet most enterprises face a fragmented landscape: while frameworks like NIST, OECD, and the EU AI Act offer high-level principles and compliance mandates, there is no single tool that operationalizes all aspects of governance.

Enterprises must build a governance stack that combines policies, tooling, custom solutions, and dashboards. This includes observability and monitoring, red-teaming models for bias, implementing audit trails for decisions, using explainability toolkits, and putting human-in-the-loop checks in place for critical decisions.

Navigating this complexity is not just about compliance—it’s about trust, brand integrity, and risk management.

Staying Ahead in the AI Race

As these trends unfold, one thing is clear: AI is no longer just a shiny tool—it’s becoming foundational to how modern enterprises build, operate, and compete. Every application being developed will increasingly have AI woven into its fabric, and every business will need to harness it to stay relevant.

Enterprises must now take a more deliberate and iterative approach to AI adoption—modernizing their data infrastructure, investing in AI-first engineering capabilities, and fostering a culture of experimentation. The best way to begin is with a prioritized backlog of high-ROI, low-friction use cases. Run fast cycles, validate outcomes, learn fast, and scale what works.

Real competitive edge won’t come from waiting for the perfect strategy—it will come from moving early, moving fast, and building AI into the DNA of the enterprise.

We’re only just getting started.