The brutal gap between boardroom demos and bedside reality—and how to bridge it

TL;DR

- The execution deficit is real: 80% of leaders prioritize workflow optimization, yet only 63% feel prepared to execute with GenAI.

- Administrative annihilation beats robot surgery: Ambient documentation and revenue cycle automation offer immediate ROI with low clinical risk. Governance trumps privacy fears: 57% worry about eroding clinical judgment vs. 56% who cite privacy—it’s about accountability, not hackers.

- Integration muscle matters more than algorithms: Vision is cheap; data plumbing, change management, and MLOps pipelines pay the mortgage.

The Groundhog Day Conference Circuit

Picture this: A chief medical officer clicks through ten screens to order Tylenol for a patient. Two hours later, the same CMO presents a “AI-First Hospital” slide to thunderous applause at a healthcare conference. The resident still drowning in documentation? The nurse burning out on prior authorizations? They’re not in the room.

Sound familiar? This scene plays out weekly across America’s healthcare conferences. The same PowerPoint promises of AI transformation, the same worried nods about regulation, the same vague concerns about “black box” algorithms. Meanwhile, a 2025 Future-Ready Healthcare survey crystallizes the brutal reality: 80% of hospital leaders rank workflow optimization as their top AI/GenAI goal, yet only 63% believe they can execute it. That 17-point credibility gap tells the story. This isn’t an AI/GenAI ‘strategy’ problem. It is an execution indictment.

After splitting time between clinic and product war rooms, I’ve learned that healthcare’s GenAI problem isn’t what the headlines suggest. It’s not about regulatory uncertainty or mysterious algorithms. It’s about execution, pragmatism, and accountability. Here are three uncomfortable truths about where we really stand.

Truth #1: Vision Is Cheap, Integration Muscle Pays the Mortgage

The conventional wisdom says clinicians resist AI because they fear being replaced or don’t trust the technology. That’s mostly wrong. I’ve yet to meet an anti-automation clinician. Instead, I see an execution vacuum where good intentions meet operational reality and die.

The numbers don’t lie. However, 80% of healthcare leaders consider workflow optimization critical, but only about six in ten feel prepared to let GenAI handle it. This isn’t about philosophical resistance. It is practical paralysis. The real obstacles are mundane, unglamorous details that never make headlines: data lakes that resemble garage sales, with 47% of executives saying their clinical data landscape looks more like a junk drawer than a lake. EHR integration nightmares that force clinicians to switch between multiple screens for simple tasks. Shadow IT pilots running on rogue GPU clusters that get stopped in their tracks the moment compliance asks, “Who owns the malpractice?”

Consider what frontline staff worry about: 82% cite staffing shortages as their top imperative, 77% want administrative overhead eliminated, and 76% are desperate to address burnout. These are operational problems that are begging for operational solutions. Yet pilot projects stall because nobody funds the tedious work of data engineering, workflow integration, and post-deployment monitoring.

Until health systems staff up with product owners who’ve shipped software before, GenAI will remain a PowerPoint promise. Treat AI deployment like launching a new cardiac cath lab: dedicated staff, protected budgets, rigorous protocols, and leadership accountability that survives quarterly earnings calls.

Truth #2: The Killer App Is Administrative Annihilation, Not Robot Surgery

Forget autonomous diagnosis and surgical robots. Silicon Valley loves to demo autonomous scalpels, but clinicians drown in denial letters. The actual revolution happens in the back office, eradicating the administrative parasites that drain $600 billion annually from American healthcare. For the next three years, GenAI’s sweet spot is administrative annihilation. Erasing chores no human should still be doing.

Two applications are already proving themselves where rubber meets the road. Ambient documentation represents the lowest-hanging fruit. These tools draft clinic notes while physicians talk to patients, cutting cognitive load and after-hours “pajama time” by over 60%. The survey shows that 41% of respondents put this on their GenAI wish list, and the technology is already live in early-adopter health systems. The ROI is immediate and measurable. You can feel the difference by next Tuesday.

Revenue cycle automation follows close behind. Prior authorization packets, denial appeal letters, and other back-office sludge are prime targets for large language models. With 67% of leaders saying prior authorization alone is choking productivity, and 62% calling out EHR administrative drag, automating these workflows offers clear dollars-and-cents justification. In a market where 68% of executives list staffing costs as their top financial pressure, tools that give hours back without changing care plans are the easiest “yes.”

Why do these applications work when others stall? They hit the trifecta of low clinical risk, high workforce relief, and transparent business cases. These tools don’t make clinical decisions. They document them or handle downstream paperwork, neatly sidestepping liability landmines.

The lesson: start with the clipboard, not the stethoscope. Master administrative automation first, then earn the trust and operational muscle needed for higher-stakes applications. Autonomous diagnosis will come, but first, GenAI must earn its keep by slaying the administrative dragons that fuel burnout.

Truth #3: Governance Gaps Trump Privacy Fears (And That’s Fixable)

Here’s what surprised me most in recent survey data: only 56% of healthcare professionals cite privacy as a top GenAI risk, while 57% worry about erosion of clinical judgment. The headline-grabbing fear isn’t hackers. It is accountability.

This isn’t technophobia—it’s rational self-preservation. Think about what this means. Most organizations assume their firewalls are decent. What keeps them awake is the question of liability when an algorithm influences care. If a model hallucinates a contraindicated dose, who signs the malpractice check? The vendor, the hospital, or the clinician who clicked “accept”?

That answer is fuzzy because fewer than one in five organizations have published GenAI policies.

This governance gap creates a cascade of problems. Without clear data stewardship rules, audit trails, and role delineation, even the most promising AI tools risk becoming compliance grenades. Clinicians default to caution, not because they fear the technology, but because they can’t trace accountability when things go wrong.

The solution isn’t more regulation. It is better governance. Organizations need explicit policies covering data use, validation protocols, and human-in-the-loop checkpoints. They need transparency about where recommendations come from, confidence scoring, and always-visible override buttons. Most importantly, they must socialize these frameworks across physicians, nurses, and support staff so everyone understands their role.

The pragmatic fix is boring: bake guardrails into the CI/CD pipeline. Every recommendation should arrive with citations, confidence bands, and model-version hashes, just like vaccine batch numbers. Governance documents on SharePoint won’t scale; they are turned into code that gates deployments, runs compliance tests, and fails to build if policy is violated.

Reality Check: Only 18% of healthcare organizations have published GenAI policies that anyone can find. That’s like running an ICU without dosing protocols. Until governance catches up with innovation, caution isn’t just a choice—it’s the only logical stance for any clinician who wants to keep their license.

Quick-Win Playbook

1. Start with data hygiene and boring plumbing: Clean medication lists, standardize note templates, and map data lineage before chasing algorithms.

2. Hire a product owner who’s shipped before: Stop promoting clinicians into tech roles; find someone who’s survived production outages.

3. Deploy ambient documentation first: Target high-volume specialties with clear ROI metrics and protected GPU budgets.

4. Build governance-as-code: Turn policy documents into automated compliance checks that gate every deployment.

The Path Forward

Healthcare’s GenAI future won’t be built on regulatory clarity or perfect algorithms. It’ll be built by operators who understand that the real barriers are organizational, not technological. The revolution won’t be a single dramatic breakthrough. It’ll be a steady, pragmatic march toward a system that finally frees its most valuable assets to do what they do best: thinking, healing, and caring for patients.

Picture a clinic visit in 2030: You walk in bare-handed. A discreet agent records the dialogue, drafts a SOAP note, cues guideline-based orders, and generates the prior-auth—before your hand reaches for the door handle: no swivel chair, no drag-and-drop hell. The technology is ready. The question is whether we’ll build the operational muscle to make that transformation real.

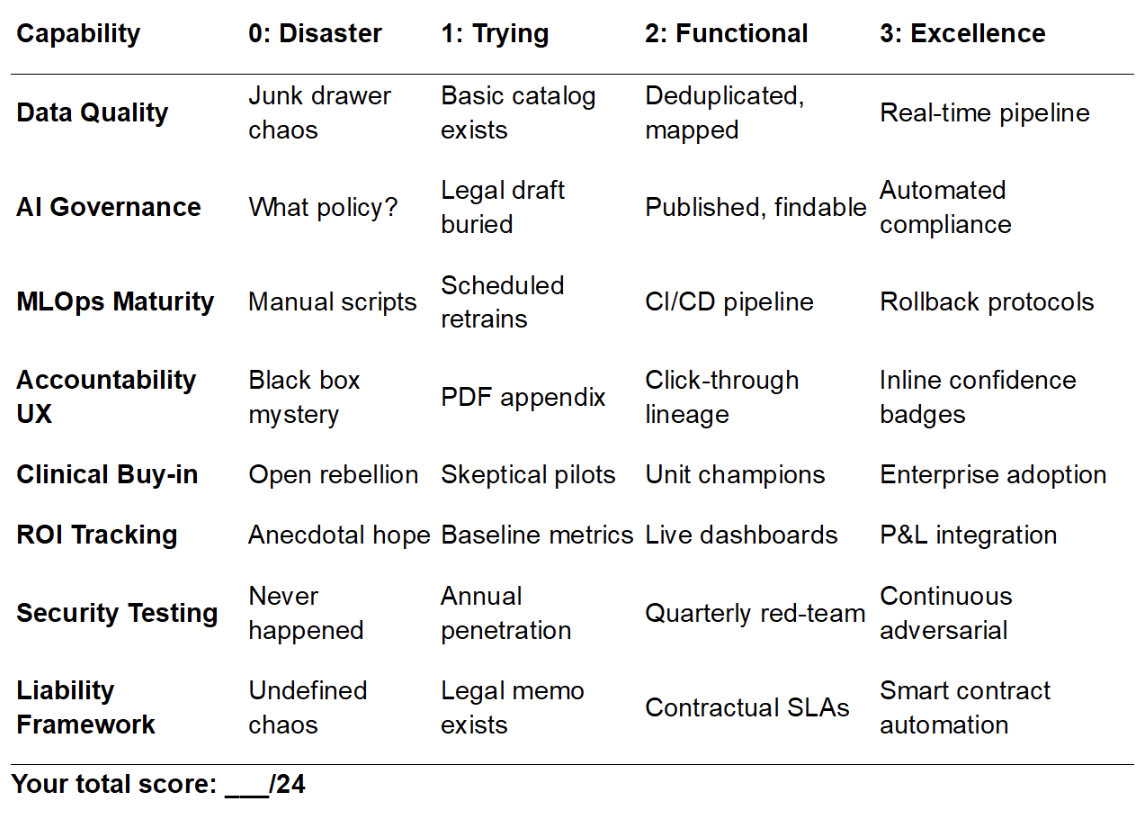

Are You Shipping AI or Shipping Hope? The Brutal Scorecard

Score yourself 0-3 on each capability (24+ means you’re ahead of the curve):

Under 12? You’re still in myth-busting mode. 12-23? Pilot-ready but fragile—one compliance audit away from shutdown. 24-plus? Time to scale and budget for serious GPU infrastructure.