Artificial intelligence (AI) continues to show great promise for global development. Since the 2022 launch of ChatGPT, and the beginning of the current AI hype cycle, the technology has lived up to some of its high expectations, showing promise for important and diverse applications from diagnosing rare disease, helping supply chains become more resilient to geopolitical and climate shocks to reshaping how we work and learn. This is no less evident in the impact sector where social innovators have shown how the technology can be used at the forefront, improving healthcare outcomes, strengthening climate resilience and democratizing education access in diverse geographies.

However, concerns remain about the ethical development and use of the technology, particularly its social impact, its effects on the labour market, its potential to reinforce existing biases, and the documented environmental costs linked to its high energy consumption.

Social innovators are proving that AI can be ethically harnessed by adopting deliberate deployment frameworks that prioritize risk mitigation and community benefit over pure efficiency or profit maximization. A 2024 report by the Schwab Foundation and the World Economic Forum revealed that, there are at least 10 million social enterprises with solutions to the world’s most complex challenges, creating over 200 million jobs and driving significant social and environmental impact while generating over $2 trillion in annual revenue, eclipsing the apparel industry. AI has the potential to help these enterprises to rapidly scale their impact. For these enterprises, their deployment strategies represent a fundamental shift from technology-first to impact-first approaches, treating AI as a tool for addressing systemic inequities rather than simply optimizing existing processes. Yet less than 1% of AI implementation budgets currently go towards impact-focused initiatives.

To ensure AI benefits humanity, including underrepresented communities, it is essential that technology companies, government bodies, intermediaries (e.g. accelerators) not only work collaboratively with them to effectively deploy this technology but also create avenues to learn from their experience in ethical usage.

How Social Innovators Drive Better AI Solutions

As a result of their deep roots in their local communities, social innovators by natureunderstand community needs and design inclusive solutions that prioritise ethical implementation. Rather than deploying pre-built solutions, these organizations prioritize local capacity building and ensure AI systems reflect community needs and cultural contexts.

Take SAS Brasil, a social enterprise operating at the intersection of healthcare and technology in underserved communities in Brazil where cervical cancer ranks as the 4th most frequent cancer among women and the 3rd most frequent cancer among women between 15 and 44 years of age. Across the country, it is reported that over 9000 women die annually from the disease. As a response, the founders of the enterprise have developed an AI diagnostic tool to predict cervical cancer risk in Brazilian communities, especially in indigenous areas. One of the most persistentchallenges SAS Brasil faces in the development of their diagnostic tool is data bias as most datasets originate from clinical populations in Europe and North America, with scant representation of many underserved groups. To address this, SAS Brasil partnered with local academic and health institutions to build expansive and representative datasets. This process is resource-intensive but critical to ensuring the AI tool reflects the realities of the communities it serves.

SAS Brasil also invested in internal AI skills-building, collaborating with local universities to engage graduate students in computer science to co-develop the tool. This approach highlights how social enterprises, when equipped with the proper resources and strategic partnerships, can collectively contribute to the ethical advancement of AI within their local ecoystems, for example in healthcare, where important questions about AI’s future role continue to emerge.

The role of the private sector

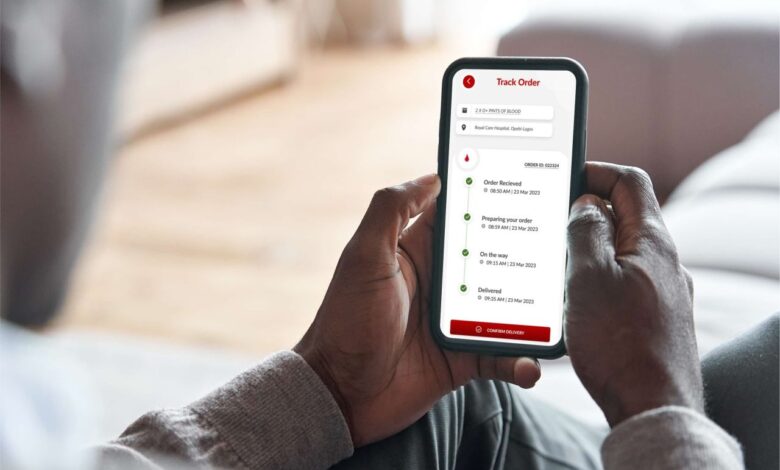

A fundamental challenge facing social innovators in AI deployment lies not in vision or commitment, but in accessing the infrastructure, technical expertise, and financial resources required to build and scale equitable AI systems. Private sector institutions, tech companies especially, have a critical role to play in scaling AI for impact, as they possess both the capability and responsibility to enable equitable AI. Beyond funding, technology companies can engage with social innovators in other important ways. For example, by providing open-source foundational models like Meta’s Llama series, offering accessible cloud computing resources through programs like Microsoft’s EfPi and Google Cloud for Nonprofits, and developing low-code AI platforms, major technology firms can democratize access to sophisticated AI capabilities. This infrastructure-as-enablement approach allows social enterprises to focus their limited resources on community engagement, cultural adaptation, and impact measurement rather than building technical foundations from scratch.LifeBank, a Nigeria-based medical distribution company, offers a compelling example. The social enterprise developed proprietary AI tools to manage supply chains for essential medical supplies including oxygen, medical consumables and blood. In building this technology, LifeBank has addressed infrastructure constraints, data availability and storage costs, internet availability and speeds, power accessed, common and endemic in low-resource settings. Through a partnership with IBM, they have been able to scale a blockchain-based blood tracking tool, demonstrating how the private sector can co-create solutions with social innovators.

Meta’s AI-powered population density maps demonstrate how data access can be leveraged for public good, with evidence of these maps being used to support vaccination campaigns in Malawi, rural electrification in Somalia and Benin, and water sanitation planning in Rwanda and Zambia, reaching millions with targeted interventions.

Resource mobilisation is also gaining traction. Google.org committed €10 million to its AI for Social Innovation Fund, offering grants and mentorship to help underserved communities integrate AI. NVIDIA’s Inspire 365 initiative contributed over 1,150 hours of employee expertise to impact enterprises, while EY engaged more than 7,000 employees in AI for social good initiatives, reaching over 2.6 million lives.

These kinds of partnerships often prove to be mutually beneficial: social enterprises receive capital, technical expertise, and support; in turn, companies benefit from their agility and deep community trust, which surface new markets and use cases. Co-developing with social enterprises also provides ground-truth data on technology performance in hard-to-reach settings, accelerating the design of more inclusive AI. This collaboration produces a feedback loop where community insights directly influence foundational model development, ensuring that subsequent AI iterations inherently incorporate equity considerations rather than retrofitting inclusivity as an afterthought.

Collaboration between social innovators and the private sector also generates value beyond the immediate exchange of resources and expertise. The strategic implications extend to market development and competitive positioning within the broader AI landscape. Social enterprises function as innovation laboratories, identifying use cases and deployment strategies that commercial entities might overlook due to their focus on high-volume, standardized applications. Their willingness to experiment with emerging technologies in resource-constrained environments generates practical knowledge about AI system resilience, cultural adaptation, and performance optimization under real-world conditions. This intelligence becomes invaluable for companies seeking to expand into emerging markets or develop products for underserved populations, transforming social impact initiatives into strategic market research and development investments.

Most significantly, these collaborations reshape the fundamental architecture of AI development by embedding community agency and local expertise into the technology creation process. Rather than extractive relationships where communities serve as passive data sources or deployment sites, effective partnerships position social enterprises as co-creators who influence algorithmic design, training methodologies, and performance metrics. This participatory approach not only improves AI system effectiveness but also establishes new standards for responsible technology development that prioritize community ownership and long-term sustainability over rapid deployment and scale.

The role of intermediaries

Intermediaries, governments, incubators, accelerators, and non-profit organizations play an essential role in shaping the future of AI for social impact. With deep networks and on-the-ground insights, they can help technology organizations understand local contexts, build trust, and tailor solutions to the needs of social innovators. They also act as translators across sectors, ensuring that the priorities of communities, social enterprises, and funders are not lost in the technical or commercial framing of AI adoption.

Beyond surfacing real-world challenges such as skills gaps, ethical concerns, and barriers to entry, these actors create the connective tissue that enables collaborations to scale. Their proximity to underserved communities allows them to highlight blind spots that may not be visible to corporate or philanthropic partners, while their convening power can align diverse stakeholders around shared goals. In doing so, intermediaries help reduce duplication of efforts, lower transaction costs for innovators, and accelerate the diffusion of effective practices across ecosystems.

For example, the Entrepreneurship for Positive Impact Initiative (EfPI), developed by Microsoft in partnership with Ashoka, a social enterprise intermediary with presence in 90+ countries, demonstrates how intermediaries can strengthen the organisational capacity of impact enterprises. EfPI provides mentorship and promotes two-way learning, enabling technology companies to benefit from the lived experience of social innovators.

Collaborating for the future of AI

Social innovators are actively redefining how responsible AI development occurs by embedding technology within community needs and local contexts. However, transforming these localized successes into systemic change requires moving beyond fragmented support structures toward coordinated ecosystem development that recognizes social enterprises as co-creators rather than beneficiaries of AI innovation.

This architecture demands of inclusive AI development demands sophisticated institutional coordination where each actor’s contributions and value proposition is complementary and well-articulated. It positions social innovators at the centre of ethical AI deployment. Technology companies provide computational infrastructure and technical expertise while learning from community-embedded deployment strategies that inform their broader product development. Intermediary organizations function as knowledge brokers and cultural translators, ensuring that global AI capabilities are meaningfully adapted to local contexts while feeding community insights back into foundational model development.

Success will be measured not by the number of AI tools deployed in underserved communities, but by the extent to which these communities gain meaningful control over technological trajectories that affect their futures.