We’ve all experienced the magic moment when a large language model (LLM) perfectly explains a complex concept or writes elegant code. But ask that same model to analyze risk across a portfolio of companies, detect fraud patterns in thousands of transactions, or identify anomalies in a supply chain network, and the limitations become clear.

The problem isn’t that LLMs can’t handle math; they can. The issue is how they handle it. When an LLM attempts quantitative analysis, it builds reasoning chains, working through calculations step by step. For simple problems, this works well enough. But in real-world applications where you need to analyze hundreds or thousands of interconnected entities, this approach breaks down quickly. Context windows overflow. Errors compound. What should take milliseconds stretches into minutes.

This creates a fundamental barrier for deploying AI in domains where mathematical precision and speed matter most. Risk assessment, fraud detection, and anomaly identification all require rapid numerical analysis across vast datasets. The traditional response has been to build larger models or create specialized calculators. But what if there’s a more elegant solution?

Why Knowledge Graphs Are Natural for LLMs

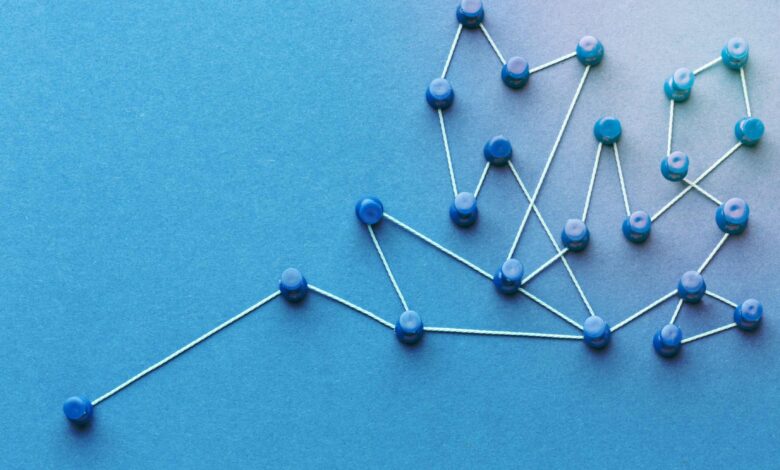

The breakthrough comes from recognizing something fundamental about how LLMs process information. These models excel at understanding entities and their relationships. When you tell an LLM that “Apple is a customer of TSMC” or “Bank A has exposure to Bank B,” it immediately grasps these connections conceptually. This is exactly how knowledge graphs are structured: entities as nodes, relationships as edges.

This alignment isn’t coincidental. LLMs trained on vast text corpora have learned to recognize and reason about entity relationships because that’s how humans naturally express information. Knowledge graphs formalize this same structure but with a crucial addition: They can carry precise quantitative information.

In a knowledge graph, each node doesn’t just represent an entity. It can store computed metrics like credit scores, risk levels, or anomaly probabilities. Each edge doesn’t just show a connection. It can quantify relationship strength, dependency levels, or risk transmission factors. Most importantly, the graph structure enables something text alone cannot: mathematical propagation of effects through the network.

The key insight is this: Instead of asking an LLM to calculate how risk flows through a network, we precompute these flows in the knowledge graph. The LLM then does what it does best, reasoning over the structure and explaining the results in natural language.

Technical Challenges and Innovations

Leading organizations are now putting this theory into practice by building knowledge graphs that cover millions of public and private companies. However, developing such systems involves solving several complex technical challenges.

One major challenge is risk propagation. Similar to models used in epidemiology, mathematical techniques help map how financial distress can spread through interconnected business relationships. These calculations must update continuously as new data arrives to keep risk assessments accurate and current.

Another key innovation involves applying graph attention networks. In large networks, not all connections carry equal weight. A company may have hundreds of suppliers but rely heavily on just a few. Attention mechanisms help identify which relationships are most critical for different risk scenarios, allowing systems to focus on the most relevant parts of the graph when generating insights.

From Finance to Cybersecurity

While we’ve focused on risk, the pattern of grounding LLMs with knowledge graphs applies broadly. Consider these domains:

Fraud Detection: Financial crimes rarely involve isolated bad actors. Fraud networks span multiple accounts, devices and transactions. By building transaction graphs with precomputed anomaly scores, LLMs can identify and explain suspicious patterns without attempting complex statistical calculations. The graph structure reveals connection patterns that individual transaction analysis would miss.

Cybersecurity: Modern cyberattacks propagate through networks of systems and users. A compromise at one node can cascade through access permissions and system dependencies. Knowledge graphs can map these dependencies with precomputed vulnerability scores, allowing AI systems to instantly assess threat propagation paths and recommend containment strategies.

Supply Chain Risk: Recent disruptions have shown how vulnerabilities cascade through global supply networks. A knowledge graph can model supplier relationships with precomputed metrics for financial health, geographic risk, and operational dependencies. When disruption strikes, AI agents can immediately identify affected downstream companies without rebuilding the entire chain analysis.

Compliance Management: Regulatory requirements form complex webs of rules, processes and controls. Knowledge graphs can map these relationships with violation probabilities and control effectiveness scores. This allows AI systems to assess compliance risk holistically, understanding how a failure in one process might cascade to regulatory violations elsewhere.

Each domain shares the same pattern: entities connected by relationships, with quantifiable effects flowing through the network. The knowledge graph provides the computational foundation, while the LLM provides intelligent interpretation.

Why Knowledge Graphs Win

Combining LLMs with knowledge graphs isn’t just a technical optimization. It fundamentally changes what’s possible with AI systems.

Speed transforms use cases. Precomputed graph queries return in milliseconds, enabling real-time applications impossible with calculation-based approaches. A loan officer can get instant risk assessment during a customer call. A security analyst can evaluate threats as they emerge.

Accuracy comes from specialization. Mathematical models optimized for specific domains (credit risk, fraud detection, etc.) run offline with unlimited computational resources. The results are more accurate than what any LLM could calculate in real time.

Explainability builds trust. Every risk score, every anomaly flag, every propagation path exists explicitly in the graph. When the AI explains its reasoning, it’s not generating plausible-sounding text but describing actual computational paths that can be audited and verified.

Scale changes the game. Traditional approaches slow down exponentially as networks grow. Graph-based approaches scale linearly. Whether analyzing 100 or 100,000 entities, query time remains constant.

Graph attention mechanisms solve the needle-in-a-haystack problem. Even in networks with millions of connections, the AI automatically focuses on the relationships that matter most for each specific query.

Looking Forward

Developing knowledge graph grounding for credit risk requires solving complex technical challenges that have taken years of dedicated research and development. The field has made significant progress on the hard problems of entity resolution at scale, mathematical propagation models, and graph attention mechanisms that surface what matters. Now this proven technology is expanding beyond credit risk into new domains.

The same architecture that tracks financial risk through company networks can track fraud through transaction networks, vulnerabilities through IT systems, or disruptions through supply chains. The core technology — precomputed quantitative signals propagating through graph structures — remains constant. What changes is the domain expertise we encode into the nodes and edges.

We’re particularly excited about the possibilities this opens up for organizations that have been struggling to make AI work for their quantitative use cases. Every company has unique networks of risk and opportunity. A pharmaceutical company tracks adverse events through patient populations. A logistics provider monitors delays through transportation networks. A manufacturer watches quality issues propagate through component suppliers.

The future we envision isn’t about one massive AI model trying to understand every domain. It’s about specialized knowledge graphs, each encoding deep domain expertise, working with AI agents to deliver precise, explainable insights. This isn’t just an incremental improvement in AI capabilities. It’s a fundamental shift in how quantitative intelligence can augment human decision-making. And we’re just getting started.