In the fast-paced world of artificial intelligence, Large Language Models (LLMs) like GPT-4 are changing the game in natural language processing. But these models aren’t just about fancy algorithms; a lot of their success comes down to the quality of the datasets they’re trained on. Preparing these datasets isn’t just about collecting data—it’s also about cleaning it up and, importantly, annotating the text. Data annotation plays a key role in NLP and machine learning, as accurate text annotations enhance models’ ability to comprehend and interpret data, addressing challenges posed by unstructured or complex text.

Introduction to Text Annotation

Text annotation is the process of adding labels or annotations to textual data to provide context and meaning. This step in natural language processing (NLP) and machine learning (ML) enables machines to understand the structure and semantics of text. By annotating text, we can train models to perform a variety of tasks such as document classification, sentiment analysis, entity recognition, and linguistic annotation. For instance, in document classification, annotated texts help models categorize documents based on their content. Similarly, sentiment analysis relies on annotated data to determine the emotional tone of a text, while entity recognition involves identifying and labeling named entities like names, locations, and organizations. Linguistic annotation, on the other hand, focuses on analyzing the structure and meaning of language. Overall, text annotation is a foundational element that enhances the capabilities of NLP and ML models.

What is Text Annotation?

Text annotation is all about labeling or tagging pieces of text to highlight things like syntax, meaning, or sentiment. Key phrases play an important role in text annotation as they help in understanding customer intent and sentiment. By doing this, we help LLMs understand the nuances of language, enabling them to do things like translate languages, summarize articles, or answer questions more accurately.

Types of Text Annotation Process:

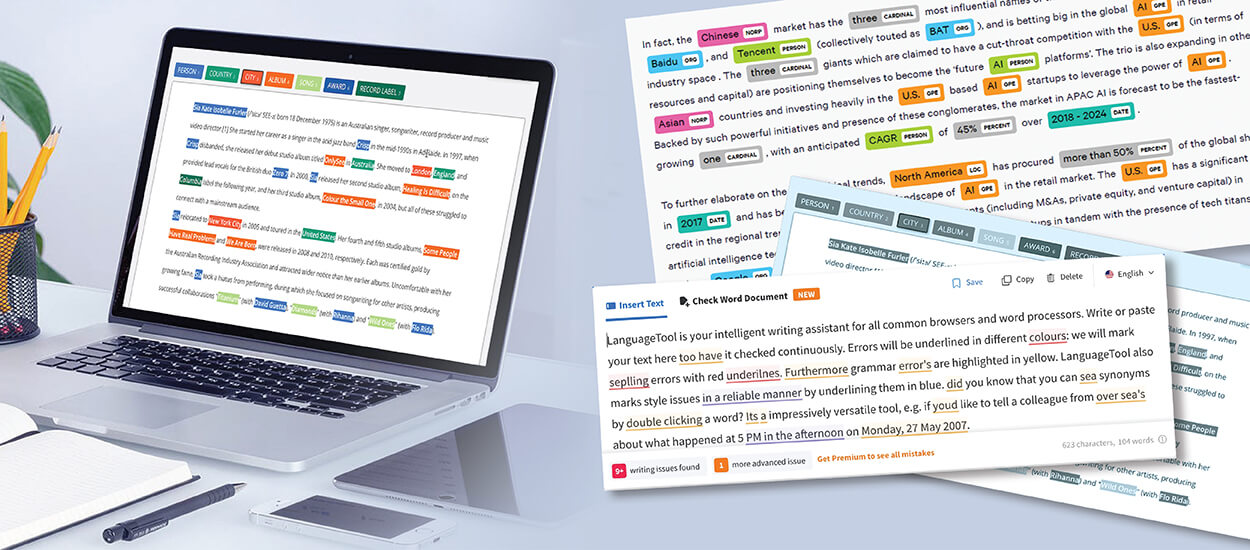

- Entity Recognition: Spotting and classifying important elements in the text. This includes ‘named entity recognition’ (NER), a method that identifies and classifies named entities within a text into predefined categories such as names of persons, locations, organizations, and specific dates. NER is required in enhancing natural language processing applications by accurately labeling entities to facilitate information extraction.

- Sentiment Analysis: Figuring out the emotional tone behind words.

- Part-of-Speech Tagging: Identifying the role of each word (like nouns, verbs, adjectives, etc.).

- Semantic Annotation: Connecting words or phrases to their meanings or external references.

- Entity Annotation: Identifying and labeling entities within a text based on their semantic significance.

Steps to Prepare Datasets for LLMs

- Collect Data

First off, you need a lot of diverse text data that’s relevant to what you want your model to do. This can come from books, articles, social media, transcripts—you name it.

- Clean the Data for Data Quality

Next, you clean up the data by getting rid of typos, irrelevant stuff, and duplicates. This makes sure you’re working with high-quality data.

- Annotate the Text for Entity Recognition

Now comes an important step: labeling the cleaned data using the right annotation techniques. This is super important because it directly affects how well your model will learn and perform. Techniques like entity annotation, which involves identifying and labeling entities within a text based on their semantic significance are extremely important. This includes annotating names, dates, and other important information, facilitating the extraction of relevant data for further analysis or machine learning applications. Additionally, named entity recognition (NER) is essential in this process. NER identifies and classifies named entities within a text into predefined categories such as names of persons, locations, organizations, and specific dates. Both entity annotation and named entity recognition are integral to enhancing natural language processing tasks by accurately labeling entities to facilitate information extraction.

- Augment the Data

To make your dataset even better, you can introduce variations using methods like swapping synonyms or paraphrasing. This helps make your model more robust.

- Split the Data

Finally, divide your dataset into training, validation, and test sets. This lets you effectively evaluate how well your model is doing.

Annotation Task Definition and Guidelines

Defining annotation tasks and establishing clear guidelines are essential steps in the text annotation process. These guidelines ensure consistency and accuracy, which are critical for the quality of the annotated data. The annotation guidelines should be created by a team familiar with the data and the intended use of the annotations. They must outline various cases, including common scenarios and potentially ambiguous situations that annotators might encounter. For each case, the guidelines should specify the appropriate actions to take. Additionally, the guidelines should detail the type of annotation required, the scope of the annotation task, and the expected output. By providing comprehensive and clear instructions, annotation guidelines help maintain uniformity and reliability across the annotation process.

Quality Control and Best Practices

Ensuring the accuracy and consistency of text annotations is paramount, and this is where quality control and best practices come into play. Quality control involves regularly checking the annotations to verify their accuracy and consistency. This can be achieved through methods such as inter-annotator agreement checks and periodic reviews. Best practices for text annotations include using clear and concise language, avoiding ambiguity, and adhering to standardized annotation schemes. It’s also beneficial to use advanced text annotation tools that streamline the annotation workflow and enhance data quality. By following these best practices and implementing robust quality control measures, we can produce high-quality annotated datasets that significantly improve the performance of machine learning models.

Challenges You Might Face

- Quality Control: Making sure your annotations are accurate and consistent.

- Handling Big Data: Managing large amounts of data without getting overwhelmed.

- Avoiding Bias: Keeping an eye out for biases in your data to prevent skewed results from your model.

Tips for Effective Text Annotation Workflow

- Hire Skilled Annotators for Text Annotation: People who know their stuff can understand context and nuances better, leading to higher-quality data.

- Use Advanced Tools: Leverage annotation platforms to make the process smoother. There are many platforms in the market such as UbiAI, Prodigy or Labelbox.

- Set Up Quality Checks: Regular reviews and agreement checks among annotators help keep annotations consistent.

Use Cases of Text Annotation

Text annotation has a wide range of applications across various industries and domains. Here are some key use cases:

- Document Classification: Annotated text data is used to train models to classify documents into different categories based on their content. This is particularly useful in organizing large volumes of textual data.

- Sentiment Analysis: By annotating text with sentiment labels, we can train models to analyze the emotional tone of a text, which is valuable for understanding customer feedback and social media sentiment.

- Entity Recognition: Text annotation helps in identifying and labeling named entities such as names, locations, and organizations. This is important for tasks like information extraction and knowledge graph construction.

- Linguistic Annotation: This involves analyzing the structure and meaning of language, which is essential for developing advanced NLP applications like machine translation and speech recognition.

- Machine Learning Models: Annotated text data is fundamental for training various machine learning models to perform tasks such as text classification, sentiment analysis, and entity recognition. High-quality annotations lead to more accurate and reliable models.

By leveraging text annotation in these use cases, we can enhance the capabilities of NLP and ML models, enabling them to understand and process textual data more effectively.

Wrapping Up

Preparing datasets for training Large Language Models is a foundational step that can make or break your AI project. Text annotation is a key part of this process, turning raw data into something meaningful that machines can learn from. By following best practices and using innovative tools, we can boost the performance of LLMs and push the boundaries of what AI can do in understanding and generating human language.