As AI redefines everything from drug discovery to fraud prevention, organizations face a growing challenge: choosing the right storage architecture to support their AI ambitions. That’s because AI doesn’t just use data—it generates and consumes it at an unprecedented scale. Training and inference workloads feed on massive datasets, constantly ingesting, processing, and producing new information. From raw sensor data to labeled datasets, fine-tuning logs, and model checkpoints, the volume is staggering—and only increasing.

This creates a fundamental, ongoing challenge: how to store, access, and manage all this data efficiently and effectively. Whether you’re a CTO building out your infrastructure strategy, a data scientist training new models, or a cloud architect fine-tuning performance, storage is no longer an afterthought—it’s an essential foundation.

Today’s AI workloads come with demanding requirements for speed, scale, and reliability. And not all storage is created equal.

Different Workloads, Different Storage Needs

Data storage for AI workloads breaks down into two main categories, each with distinct characteristics:

1) Bulk Data Storage

This is storage for vast data lakes of unstructured or semi-structured information—from images and video footage to social media content and emails—that AI training workloads require. It resembles traditional enterprise storage architecture, with a combination of high-capacity SATA HDDs and faster NVMe SSDs. The SSDs here typically use TLC and QLC NAND, which offer a good balance of cost, performance, and endurance. Bulk storage offers easy scalability and can accommodate a wide range of different data types.

SSD Bulk Data Storage

There is an evolving trend in bulk storage to replace SATA HDDs with more SSDs using a cost-optimized media. Instead of focusing on media type and racecar performance, they are sold based on performance and warranty. Performance and power are tuned down to align with server network bandwidth while individual volumes can be accelerated for faster rebuild. Like HDD, media is not explicitly advertised, and the datasheet is focused on capabilities. This opens a path for cost-optimized NAND and even full NAND recycling. The new SSD bulk storage drives improve space efficiency, reliability, and power — all while reducing the datacenter carbon footprint through recycling and reduced power usage.

2) Direct GPU-Supported (DGS) Storage

DGS storage has emerged to meet today’s high-performance demands. It enables the high-speed, high-IOPS process of inference, or putting trained models to work. These workloads require ultra-fast, low-latency access to data to keep each GPU card fully utilized, so DGS storage relies heavily on NVMe SSDs that can saturate PCIe bandwidth with small I/O, to better align with how GPU cards process data. This requires a new class of storage controller with greater compute to process higher IOPS.

In DGS storage, data travels directly between storage and GPU cards, bypassing the CPU altogether. In addition to making AI inference faster by reducing latency and boosting throughput, these direct data paths also bypass the CPU DDR bandwidth limitations, which helps increase effective bandwidth. They also free up the CPU resources for other critical operations beyond basic data buffering, leading to a more efficient system in general.

The key metrics for DGS storage are IOPS, latency, and endurance—with performance turned up to 11. To maximize those capabilities, these drives will use Storage Class Memory (SCM; SLC) or optimized SLC such as XL-Flash or Z-NAND for superior performance, endurance, and reliability.

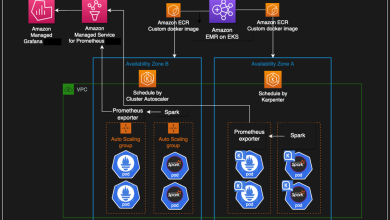

What’s Available in the Cloud?

For organizations wanting to move AI workloads to the cloud, public cloud providers offer a wide range of storage services—each serving a different piece of the AI pipeline:

- Object storage (e.g., Amazon S3, Azure Blob) – Ideal for storing large volumes of data, object storage is highly scalable and cost-effective. It’s commonly used to store training datasets, model checkpoints, and logs. However, it comes with higher latency, which makes it less suitable for inference workloads.

- Block storage (e.g., Amazon EBS, Google Persistent Disk) – Block storage offers fast, low-latency access—critical during model training and inference. It’s more expensive than object storage, but the performance tradeoff is often worth it for high-value workloads.

- File storage (e.g., Amazon EFS, Azure Files) – File-based systems support shared access across distributed systems and offer high throughput. They’re useful in collaborative environments, especially during training phases, though they require more complex orchestration.

The major cloud providers also increasingly support hybrid architectures and GPU-optimized infrastructure, allowing organizations to tailor their storage mix more precisely to AI needs.

Matching Storage to Workload in the Cloud

AI processing in the cloud often involves a blend of storage types. For both training and inference workloads, performance hinges on one metric: GPU utilization. With GPU memory and network bandwidth already maxed out in many environments, storage becomes a primary bottleneck. That’s why organizations are moving toward AI-optimized SSDs, especially for inference pipelines. These advanced devices deliver small I/O access with extremely low latency.

What About On-Prem?

For organizations running AI workloads on-premises, the right storage strategy often depends on scale and budget.

- Larger deployments typically mirror cloud environments, using dedicated NVMe SSDs and high-throughput storage networks such as SANs to support training and inference.

- Smaller deployments might use a mix of local SSDs and tiered storage—combining high-speed disks for active processing with lower-cost options for archival or bulk data.

- Eco-friendly deployments leverage HDD replacement SSDs for bulk data. These SSDs offer substantial total cost (server count, racks, cooling, power, etc.), space and environmental savings over traditional HDD. This new class of SSD can easily outlast HDD by up to 5x as the HDDs are limited to 550 TB/year I/O workload for read and write. These SSDs also reduce their server footprint through higher density. Normally, increasing density reduces total server bandwidth, but they are also not limited by SATA bandwidth and can scale their performance up when density increases.

Block storage via NVMe SSDs or SANs continues to be the best choice for high-performance training and inference, where low latency and fast IOPS are critical. File and object storage, such as NFS, Ceph, or on-premises S3-compatible systems, are better suited for storing large datasets and distributed training workloads. Open-source tools like Ceph or on-prem S3-compatible systems provide flexibility without cloud lock-in.

Weighing the Pros and Cons

Every storage type has tradeoffs. Here’s a quick breakdown:

| Storage Type | Pros | Cons |

| Object storage | Scalable, low-cost, good for archival and training data | Higher latency, not ideal for inference |

| Block storage | High IOPS, low latency, great for inference | More expensive, less scalability |

| File storage | Shared access, good for collaboration | Complex to manage at scale |

| AI-optimized SSDs | Extreme performance, long endurance | Higher cost, but lower TCO over time due to endurance |

| HDD replacement SSDs | Higher density, faster rebuild, and long endurance | Higher initial cost, but lower TCO due to power efficiency, server count reduction, and SSD endurance |

Designing for the Next Wave of AI

As AI workloads evolve, so too must storage strategies. The ideal infrastructure isn’t one-size-fits-all—it’s layered, workload-aware, and tuned to the needs of both training and inference. Organizations should consider not just current performance needs, but also long-term durability, scalability, and total cost of ownership.

With a new class of AI-optimized SSDs and HDD replacement SSDs emerging and cloud offerings becoming more specialized, the future of AI storage will be faster, smarter, and more dynamic. For enterprises building the next generation of intelligent systems, the right storage foundation might just be the difference between fast insights—and frustrating delays.