Amit Kumar Ray has witnessed the transformation of artificial intelligence from academic curiosity to business imperative over the last two decades. As a Senior Principal Architect in cybersecurity, he sits at the convergence of network security, cloud infrastructure, and AI innovation; this vantage point lends itself to unique insights into how enterprises are reshaping themselves around intelligent systems.

Amit’s career spans the evolution of modern tech infrastructure, from his early days as a software engineer at Cisco through leadership roles at Amazon, Brocade, and Extreme Networks. With patents in AI and networking technologies, recent professional education from MIT, and hands-on experience architecting scalable systems on AWS and GCP, he represents a new generation of technical leaders who understand both the promise and practical challenges of enterprise AI adoption.

In cybersecurity, where threats evolve by the hour and traditional signature-based detection falls short, Aimt has been instrumental in developing AI-driven approaches that can adapt in real-time. His work focuses on moving beyond reactive security models toward systems that can predict, prevent, and autonomously respond to emerging threats. This shift mirrors the broader transformation happening across enterprise technology.

In this conversation, Amit looks at the moments that shaped modern AI adoption, shares insights on designing resilient AI architectures that can scale from pilot to production, and offers his perspective on helping organizations transition from “AI-curious” to genuinely “AI-capable.” For technical leaders navigating their own AI transformations, this dialogue provides both strategic vision and practical guidance on building the infrastructure that will define the next decade of enterprise computing.

Q: Over your 20+ years in tech at companies like Cisco, Amazon, and Palo Alto Networks, how have you seen artificial intelligence evolve from a research concept to a practical business necessity? What was the turning point?

The concept of AI existed even in the early 2000s. But the scope was only limited to academic research. But there was no significant business adoption. Part of it that existed during that time was merely intelligent automation. Around 2015, I saw a major shift where online retailers like Amazon became increasingly popular. Amazon quickly starts using various machine learning algorithms like clustering, collaborative filtering for personalized recommendations. At this time, some other businesses also started using many such machine learning algorithms for demand forecasting, fraud detection etc. In 2017 came the turning point when the paper “Attention Is All You Need” (Vaswani et al., 2017) was released which transformed the field of AI, particularly natural language processing(NLP). It introduced the concept of encoder-decoder architecture and the transformer. This has laid the foundation of various LLM models that exist today, like GPT, BERT, etc. Now in 2025, we are standing in an era where businesses are thriving towards an AI-driven architecture. Now the AI is not a separate application; rather, AI capabilities are built into the product itself.

Q: As a Senior Principal Architect at Palo Alto Networks, you’re at the intersection of cybersecurity and AI. How is artificial intelligence fundamentally changing how we approach threat detection and network security? What capabilities exist today that were impossible five years ago?

Cyber security is going through a complete paradigm shift and that is for a good reason. AI has opened up the door for adaptive, real-time and context aware threat detection instead of just signature based rules which were primarily static and needed reactive and manual triaging. AI driven architectures also helped transitioning from static playbook to dynamic incident response when it comes to incident response generation. The AI centric approach helps generate remediation actions from the threat context and historic resolutions. Another area that significantly saw progressive outcomes due to large scale AI adoption is cloud and IoT security. Earlier tracking cloud workload and massive volumes of IoT-based transactions were really hard. With the help of AI, cybersecurity solutions can continuously monitor containers, APIs and devices.

Q: You have patents in AI and networking technologies. Can you walk us through how you identify problems worth patenting and translate complex technical solutions into intellectual property that drives industry forward?

Step 1: Identify the problems: In our day to day work, we are surrounded by various problems that are often the pain points and potentially architectural gaps. Some of them uses old and outdated architectures/tools and some are simply due to bad architectural choices. Identifying such areas are the first steps towards finding a patent-worthy problem.

Step 2: Frame many smaller problems in a bigger vision: Once we find a few pocketed problems, the next step is to bind them together in a problem statement that aims to solve a larger problem holistically. For example, in an organization, IP address management might have various challenges. The existing architecture might not be efficiently allocating and releasing IP addresses, and hence costing a lot of money to the company. Architecting an efficient IP Address Management system(IPAM) that solves all the problems holistically might be a grand vision to aim for.

Step 3: Aim for an innovative solution: Don’t just rush to a solution and patent it. A patent that is just a dump of many buzzwords without any practical claim often gets rejected. A Patent should always propose an innovative solution that is an asset to the company. The proposed solution might just solve one problem, but it should do it in an innovative way. For example, while designing a solution to optimize the cost of IP management, we should also think about how this can also upscale customer experience, how it can solve other IP address-related problems that are present internally in the organization, how do we monitor and alert when the IP addresses are exhausted etc.

Step 4: Telling a story: When we find an innovative solution, document it as if you are telling a technical story. Explain the architectures with many diagrams, flow charts, etc., that are easy to understand. If you are solving a super complicated problem, break it down into many problems and document them.

Q: When designing AI systems for enterprise-scale deployment on platforms like AWS and GCP, what are the biggest architectural challenges that most organizations overlook? How do you ensure these systems can actually scale in the real world?

One of the biggest architectural challenges is to ensure data privacy. Any AI system needs access to data, and data often contains sensitive information. How much of the data we can share with the LLM models needs to be well-thought-out and should be part of the architecture. Another important aspect that often misses out in architecture is resiliency. Most of the services in cloud providers like AWS or GCP are highly resilient. But they still can fail. Services like databases, message buses are highly sensitive. Hence, if one cloud provider fails, the entire architecture fails. This is where disaster recovery comes into play. Every architecture should identify the failure points and build resiliency around them.

Q: There’s often a gap between ML models that work in labs and those that perform reliably in production environments. Based on your experience, what separates successful AI implementations from failed ones?

In a LAB, we generally tend to focus more on model accuracy. But model accuracy is just one attribute in a successful enterprise AI application. Following are some of the other critical attributes that are equally important –

- Data Quality and integrity – The model performs as good as your data. Ensuring clean and quality data fed into the AI system is critical to high quality production-grade AI systems.

- Business KPI – Just deploying some AI application is not good enough unless it is tied to a business objective.

- High-performance data ingestion pipeline – In a production system, data changes all the time. Hence, having a quality data pipeline that feeds the updated data to the model is critical.

- Privacy – In an enterprise AI application, privacy is a must. Sensitive information should not be fed to a model. Also, AI applications should meet regulatory requirements like GDPR or HIPAA.

Q: AI applications today depend heavily on distributed systems and database management. How do you approach designing these underlying infrastructures to support AI workloads that traditional systems weren’t built for?

This generally depends on the type of AI application. But in general, AI workloads are significantly different than those of traditional ones because AI workload requires intensive compute infrastructure and high-throughput data ingestion.

Cloud Providers like AWS and GCP already provide various tools and services to support the AI workloads. Hence, production systems that are deployed on those cloud environments can seamlessly integrate with these tools and services to handle workloads.

For data ingestion and streaming, services like AWS Kinesis, GCP pub/sub are built for handling AI workloads. For self-hosted architectures, Apache Beam is a good solution.

For the model deployment layer, ready-made services like Vertex AI, AWS Sagemaker can quickly get us going. Model chaining is an important aspect to consider while building AI infrastructure. In model chaining, instead of directing all AI workflows to one model, often models are chained where the output of one model is fed to another for better accuracy. For example, Model A can be used to describe an image as text and the text is then fed to Model B, which generates the next set of instructions. Langchain provides a programming framework for building applications that use model chaining.

A database is another important aspect when it comes to handling AI-based workloads. Traditional databases are built for structured and exact-match queries where AI workloads demand semantic similarity. Vector DB enables the AI applications to store and query vector data that captures the semantic meaning of various data formats like image, audio etc.

Q: Looking at your current MIT professional education in AI alongside your decades of industry experience, which industries do you think are most underestimating AI’s disruptive potential? Where do you see the biggest opportunities for transformation?

In my opinion, education is one of the fields that needs a significant uplift to include an AI-first learning curriculum rather than static content. The education sector should focus on creating personalized learning paths using AI. The AI-based teaching assistant should consider various attributes, including students’ focus area, level of understanding, absorption capacity, motivation, stress, and anxiety, and generate a unique curriculum based on these attributes.

Q: As someone who has progressed from individual contributor to principal architect, how do you balance staying technically current with AI developments while leading teams and making strategic architectural decisions?

As an architect, my work mostly requires me to solve complex technical problems and execute projects across various team boundaries. My role also enables me to interface with external companies for various technical adoptions. So, I need to focus on breadth over depth. But in every project, I try to pick up some of the areas for development. This approach not only helps me stay hands-on but also helps me connect well with the team members. I also keep my learning time separate, where I identify key areas in the field of AI and attend various training, seminars, and certification courses. My recent professional certification from MIT is a good example of that.

Q: Many companies are struggling with where to start their AI journey. Based on your experience architecting systems for major enterprises, what’s your framework for helping organizations move from “AI-curious” to “AI-capable”?

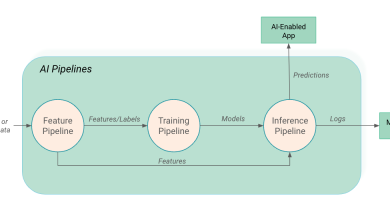

Transitioning from “AI-curious” to “AI-capable” is a significant step for any organization. Most of the organizations get stuck in the pilot purgatory. They often struggle to frame an AI story and identify where they want to use AI – internal applications for operational efficiency or AI-capable products for revenue generation. Organizations going through this transition should start with internal AI adoption. This will help transition the organization to the AI-Ready phase, where it will understand the importance of data quality and remove any technical blockers. The next step is to move to the AI-Pilot stage, where the organization maps its internal AI learning to its products, but enables it only to a select set of customers. The last step is to move to the AI-capable stage where the organization implements MLOps, builds production-ready AI-enabled products.

Q: Given the rapid pace of AI advancement, how do you design systems and architectures today that won’t become obsolete as AI capabilities continue to evolve? What principles guide your long-term thinking?

Due to the rapid evolution of AI technologies, we can’t future-proof all AI-capable tools and applications. But the framework we use in our architecture can balance the agility and durability. So, some of the architectural choices are critical. They are:

- Use modular services instead of monolithic architecture.

- Use standard protocols like MCP for communicating with the model.

- Use model chaining to segregate various AI workloads to different models.

- Abstract the AI interfaces using the standard tools like Langchain.

- Invest in data-centric architecture because any AI framework is and will always depend on the quality of data.