The development of quantum mechanics 100 years ago sparked a first quantum revolution, which led to numerous technical innovations that we rely on today. From the semiconductors that modern electronics are built upon, to lasers and MRI machines, our understanding of quantum mechanics has already transformed society.

While AI has been grabbing all the headlines lately, a second wave of innovation in quantum technologies has been quietly ramping up in the background. Quantum technologies now stand poised to break through into the wider public consciousness as this explosion in research and development kick-starts a new quantum revolution.

A major sector in quantum is quantum computing, which has the potential to solve mathematical problems that are intractable on classical computers and promises to supercharge the possibilities offered by AI. This could provide huge benefits in a range of areas as diverse as healthcare, advanced materials, finance, logistics and aerospace.

The theoretical advantages of quantum computing have been known for a while. Classical computers operate on bits that take a discrete value of 0 or 1, and that can be read out in a deterministic manner. Quantum computers instead operate on quantum bits, or “qubits”, defined by state vectors that can take a continuous range of values between 0 and 1. A state of a qubit can thus be considered as a linear combination of the 0 and 1 states, or a “superposition”. Measuring a qubit collapses the state of the qubit to a value of 0 or 1 in a probabilistic rather than deterministic way. For a qubit in an equal superposition of a 0 state and a 1 state, there will be a 50:50 probability of measuring the state of the qubit as 0 and measuring the state of the qubit as 1. These properties enable quantum computers to offer substantial increases in computing power compared to classical computers.

An early indication of the power of quantum computing came from Shor, who showed in 1994 that, theoretically, quantum computers could exponentially speed up the ability to find prime factors of a number. This has huge implications for data security: if successfully implemented on a quantum computer, Shor’s algorithm could be used to break public-key cryptography schemes, which rely on the fact that factorisation of large numbers into prime numbers is infeasible on a classical computer.

Shor’s result was followed by Grover’s 1996 discovery of a quantum search algorithm that enables so-called NP-complete problems (which are a widely arising set of problems for which a solution is difficult to find but easy to verify) to be solved quadratically faster than with classical computers. Grover’s algorithm has significant potential for solving unstructured search problems, such as for data retrieval or cryptography.

While the promise of quantum computing is well-known, an ongoing challenge has been to build a quantum computer with enough qubits and with sufficient fault tolerance that it can actually achieve these advantages in practice. Today’s qubits are delicate and are hugely sensitive to errors, such as decoherence and noise. These errors have tended to grow with the number of qubits, which has limited the benefits achieved by quantum computers in practice.

A huge amount of work is being done on developing fault-tolerant quantum computers, for which errors can be detected and corrected in real time and at scale. Current approaches are centred on quantum error correction (QEC), in which multiple physical qubits are entangled and used as a single logical qubit. In an entangled system, the state of a qubit depends on the state of the qubits with which it is entangled, even when the qubits are separated by huge distances. By distributing the quantum information to be processed across multiple entangled qubits within a logical qubit, the state of the logical qubit is more robust to noise. However, noise can still occur. As measuring a qubit causes the state of the qubit to collapse, it is not possible to simply measure an individual qubit to ascertain whether it has suffered from an error without destroying the information carried by that qubit. Instead, QEC techniques involve indirectly inferring errors in data qubits of the logical qubit by measuring the state of ancilla qubits that have interacted with the data qubits. The measurements of the ancilla qubits can be processed using classical computers to ascertain if the data qubits need to be manipulated to correct for errors, and the data qubits can then be manipulated as needed.

Huge strides have already been made towards large-scale fault-tolerant quantum computing. For example, Google’s Willow chip, announced in late 2024, exhibited error rates that decreased exponentially with increasing numbers of physical qubits. QEC is an active area for R&D at present, with others, including IBM, Nord Quantique, Microsoft, Quantinuum, and Riverlane, all presenting recent results on improved QEC techniques. We expect to see continued development in this space, which promises to dramatically improve the scalability and reliability of quantum computers.

Despite the difficulties posed by errors arising in quantum computers, we have already seen proof of quantum advantage for certain use-cases, with results of quantum computers completing computations that cannot be perform by classical computing, or that would take a classical supercomputer many orders of magnitude longer to accomplish. Google reported achieving quantum advantage in 2019 for the specific task of sampling the output of a pseudo-random quantum circuit (although this was followed swiftly by suggestions from IBM, makers of the world’s most powerful supercomputer at the time, that Google’s results significantly underestimated the performance of classical computers). Nevertheless, there have been various results since that have provided evidence of quantum computers outperforming classical computers for particular tasks, including results from the University of Science and Technology (USTC) in China in 2020 and 2021 showing quantum advantage for Gaussian boson sampling, which has potential applications in graph theory, quantum chemistry and machine learning, as well as results in 2022 from Xanadu, also on boson sampling, in 2024 from D-Wave Systems on simulation of the dynamics of a magnetic spin system, and in 2025 from JPMorganChase, Quantinuum, Argonne National Laboratory, Oak Ridge National Laboratory and the University of Texas at Austin for a certified randomness protocol.

While the current evidence for quantum advantage is for fairly niche tasks, it provides a tantalising glimpse into the opportunities afforded by quantum computing in the future. For example, the random circuit sampling of Google and the certified randomness results of JPMorganChase et al. may be applied practically to improve random number generation, by enabling the generation of numbers that are more fundamentally random than those generated by classical means. This could notably improve computational processes that are reliant on random numbers, such as cryptography.

We are likely to see quantum computing having an impact on cryptography sooner rather than later. Google recently released results indicating that the widely-used RSA encryption protocol could be cracked in 1 week using 1 million noisy qubits. This represents a dramatic reduction from the previous estimate, in 2019, that 20 million qubits would be needed to break classical encryption.

There are already quantum computers with 1000 qubits with the relevant error rates, and the field is developing rapidly. At current rates of development, existing encryption protocols could be vulnerable within the next 5 or so years. Thankfully, there are already serious efforts underway to prepare for a post-quantum future, in which classical encryption techniques are no longer secure. Post-quantum cryptographic (PQC) algorithms are being developed that are resistant to cracking by quantum computers, and in 2024 the US National Institute of Standards and Technology (NIST) released a first set of three standard PQC algorithms that can already be put into use by businesses seeking to mitigate the data security risks posed by the anticipated improvements in quantum computing.

The NIST cautions that vulnerable systems reliant on existing cryptographic protocols should be deprecated after 2030 and disallowed after 2035. If service providers fail to migrate to a PQC approach in time, we could see a severe impact on users, with data falling into malicious hands. For continued data security, it is vital for businesses to plan ahead now to move to PQC well ahead of the point at which current encryption becomes vulnerable to quantum computers. Provided this is done, there should be minimal disruption to users, despite a significant underlying shift from existing to PQC techniques.

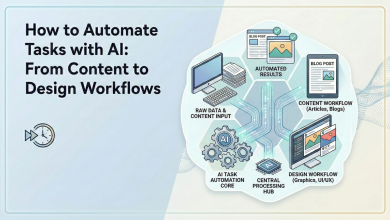

In the shorter term, given the challenges in achieving fault-tolerant quantum computing at scale, we are likely to see increased adoption of hybrid classical-quantum computing systems. A particular focus is on integrating quantum computing with AI, in an approach termed “quantum AI”. In quantum AI, a quantum processor can be used for specific tasks for which a quantum advantage is anticipated, with classical processors used for other operations. AI subroutines for which a quantum processor may have particular utility include those involving searching, such as for optimisation, which can be based on Grover’s algorithm.

As researchers continue to improve error correction and qubit reliability, we could see quantum AI turbocharging AI across a spectrum of different fields, including areas in which AI is already being used (such as natural language processing and drug discovery), as well as providing new utility in complex areas that are difficult for classical computing to handle successfully (such as protein folding, weather forecasting, accurate financial forecasting, real-time logistical predictions and so on).

For startups and scale-ups in quantum technologies, a key question is how to secure market position on the way to achieving turnover and, eventually, profitability. Patents can secure revenue streams, attract investment and enable cross-licensing deals. However, continued focus on R&D is essential in order to bring products to market to start generating revenue. For earlier stage businesses, with a more limited budget, a well thought-through intellectual property (IP) strategy will be vital to maximise return on investment. A few broad, foundational patents, which are robust and enforceable, is likely to be more valuable than a wider scattering of patents with limited scope. Strong patents can offer real, demonstrable value to sophisticated investors as a concrete asset, particularly at a pre-revenue stage. As quantum technologies enter an era of commercialisation, it is increasingly important to consider patent protection, as products being devised now are likely to be brought to market within the 20-year lifetime of a patent.

Businesses should also consider other forms of IP in addition to patents, such as design rights, copyright, trade marks, database rights and trade secrets. Trade secrets, in particular, can be a powerful tool for early-stage companies. Keeping an innovation secret can provide a longer-lasting competitive edge than patents, especially if it cannot be readily reverse-engineered or if it is difficult to detect if third parties are using the technology.

A particular feature of the quantum ecosystem is large amount of collaboration between different entities. In quantum computing, it is rare to offer the full stack, from hardware to software to applications. Interoperability is therefore essential, which means working with partners. Clear agreements on background IP, co-developed inventions, and related innovations that are necessary to put co-developed inventions into practice are essential to avoid disputes and ensure fair value for all parties. Putting agreements into place at an early stage, ideally before collaboration has commenced and while all the parties are on good terms, gives collaborators the ability to work together in confidence, safe in the knowledge that their business is protected.

While quantum technologies are already here, and have been for quite some time, the anticipated boom in quantum computing promises a significant boost in computationally intensive areas as well as opening up new possibilities in areas that are too complex for classical approaches. For those startups and scale-ups that are active in quantum, a coherent and defensible IP strategy will play a crucial role in securing their place in the market.