Today’s adversaries are more sophisticated, attack vectors are multiplying, and the sheer volume of data security that teams must manage is overwhelming. The only way to stay ahead of threats in this environment is with AI, which has quickly become an indispensable tool in the cybersecurity arsenal. It brings huge potential for the SOC in particular.

AI is a broad term, though. To truly harness its power, it’s key to understand the distinctions and synergies between its major forms: machine learning (ML), generative AI and the emerging frontier of Agentic AI. Each plays a unique and evolving role in fortifying your digital defenses.

And while these technologies offer many opportunities for SOC efficiency gains, they can also bring security risks that must be understood. Key to balancing security with efficiency is having a deep understanding of what you’re actually getting when it comes to AI and automation.

Why the SOC needs AI today

SOC operations are 24/7, and ensuring non-stop monitoring can require analysts to work nights, weekends, and holidays to maintain full coverage. On top of that, false positives are still a regular occurrence. Alert fatigue and burnout are significant risks in this space. Almost three-quarters (73%) of organizations surveyed for the 2025 Pulse of the AI SOC report said they deal with analyst burnout and persistent staffing shortages.

Not only is keeping current analysts from burning out a persistent challenge, but finding new talent remains difficult; the skills shortage persists. ISC2 estimates that in 2025, there is a cybersecurity skills gap of up to 4.8 million roles.

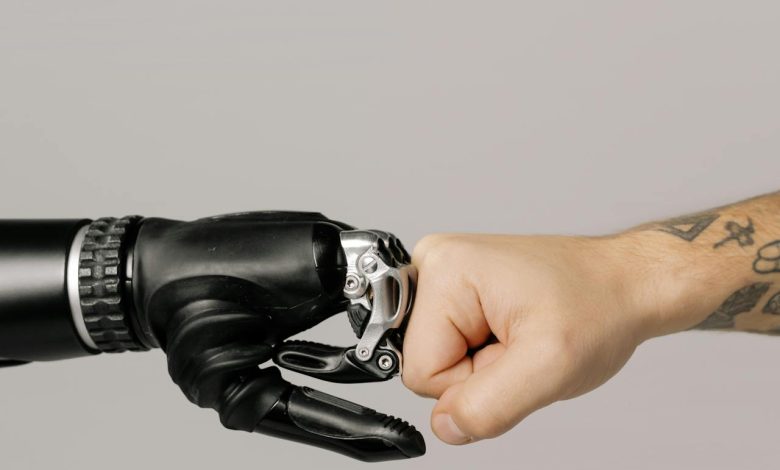

AI in its various forms can boost productivity in the SOC, effectively doubling SOC capacity without needing to expand headcount. Used correctly, AI is meant to augment human analysts, not replace them. It enables junior analysts to handle complex tasks, and it elevates existing talent by reducing repetitive work and enabling upskilling.

The applications and evolution of AI in the SOC

ML has been the bedrock of modern cybersecurity analytics for years. At its core, ML algorithms learn from vast datasets of historical security information – including logs, network traffic, endpoint activity and user behaviors – to identify patterns, anomalies and potential threats.

Having strong ML is a critical foundation for ensuring accurate and effective generative and Agentic AI. AI that acts autonomously or semi-autonomously in security operations relies on this high-quality data pipeline to avoid false positives, minimize analyst fatigue and execute actions with confidence and context.

Generative AI is transforming the analyst experience, offering tools that augment human capabilities and streamline workflows. It also necessitates a proactive defensive posture that can anticipate and counter AI-generated attacks.

Agentic AI is the next evolutionary step. It’s building upon the predictive power of ML and the creative capabilities of generative AI to deliver autonomous action and intelligent decision-making. Agentic AI systems consist of a “worker” agent or a set of agents. Each agent is designed with a degree of autonomy to perceive its environment, make decisions and take actions to achieve specific security goals with minimal human intervention and within a well-defined scope.

Agentic AI can help with things like:

- Data ingestion and schema interpretation.

- Detection engineering with dynamic model creation.

- Alert triage: pre-packaged investigations and risk-based scoring.

- Incident response: executing repetitive playbooks (e.g., antivirus scans).

- Ultimately, agentic AI can act as a virtual team member that handles the mundane tasks and accelerates decisions.

How to get started – and how to get it right

Starting an AI initiative presents yet another opportunity to ensure the data you’re feeding the AI is clean, prepared, enriched and contextualized before AI uses it to make decisions. Data Pipeline Management (DPM) and ML help with this.

Think big, start small and scale fast. Identify pain points with measurable key performance indicators, like time to triage. Begin with low-risk, high-return use cases such as summarization or search/query translation.

Build confidence in the process. Use transparent features that allow human analysts to easily verify results. Gradually expand to more autonomous applications as trust builds. Understand the importance of trust, transparency and human-in-the-loop design. Trust is critical; analysts won’t accept opaque AI outputs without explainability. Adoption depends on understanding how and why decisions are made. Key attributes for building trust include explainable alert summaries and transparent query generation.

Installing the right agentic AI guardrails

Agentic AI isn’t without risks. These include things like hallucinations, prompt injections and data poisoning, in which bad training data can undermine model reliability. In the case of hallucinations, there are countless examples of where AI has generated inaccurate or misleading outputs.

It’s important to have strong safeguards in place for these, including monitoring non-human behavior for deviations, strictly controlling prompts and inputs, and getting human validation before production deployment.

While not a security risk, there is another type of risk when it comes to Agentic AI, and that’s the reality that not all agents are truly agentic. Many vendors rebrand basic automation or API calls as “AI agents.” This is called AI-washing. True agentic AI includes reasoning, decision-making and execution.

How can you know if an AI agent is truly agentic? Ask vendors these crucial questions:

- What decisions does your AI make autonomously?

- Is your system rule-based, or does it use LLMs/contextual understanding?

- Can you demonstrate decision-making and task execution live?

Responsible deployment is strategic deployment

Agentic AI will define the next era of SOC operations. Trust, transparency, governance and accurate vendor evaluation are the keys to success. Organizations that deploy AI cautiously, starting small, mitigating the risks and validating continuously, will be best positioned to scale securely and effectively.