It’s become almost cliché to say that large language models (LLMs) are transforming the way we work with content. But as someone deeply engaged in operationalizing AI across global content programs, I can tell you the transformation is real, and far more complex than most people assume.

One of the most persistent myths about LLMs is that they’re plug-and-play. The interfaces are intuitive, and the results can be startlingly impressive with just a few well-crafted prompts. This ease of access has created the illusion that LLMs can be instantly scaled across enterprise content workflows.

In practice, though, the difference between a clever demo and a production-grade system is vast. Moving from isolated prompt success to consistent, high-quality multilingual content at scale requires much more than just a good model. It requires a complete rethink of how we structure inputs, and more specifically, how we inject context into AI systems.

Why Context Matters More Than Ever

LLMs are powerful because of their capacity to absorb and respond to context. From tone of voice, audience nuance, cultural references, even word count constraints, they can generate fluent, nuanced, and tailored content. This comes not from simply “knowing language,” but from interpreting the cues we give them. However, traditional content workflows, especially those involving global audiences, were not designed with this kind of context-hungry intelligence in mind.

We’ve relied for decades on tools like translation memories, glossaries, and static style guides. These were never built to inform generative models. They often lack the richness, flexibility, and machine-readability needed to shape LLM behavior meaningfully. As a result, even advanced models can generate content that sounds plausible but misses the mark on tone, compliance, audience expectations, or brand nuance.

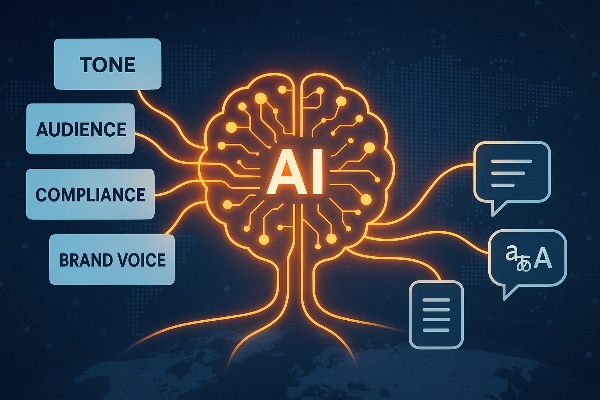

This is where context-first AI comes in. If we want LLMs to generate content that truly performs across geographies, platforms, and personas, we need to give them structured, actionable context from the very beginning.

Introducing Content Profiles

Content profiles are emerging as a critical solution to this problem. Think of them as machine-readable briefs that encapsulate the “rules of engagement” for any piece of content. A well-designed content profile doesn’t just say what the message should be. It tells the AI who the audience is, what tone to use, which brand constraints to honor, which terms to avoid, and even what success looks like.

These profiles can be assembled from multiple data sources: marketing briefs, product metadata, compliance guidelines, campaign parameters, and performance benchmarks. The key is that they’re not static documents. They’re structured artifacts that can be programmatically applied to guide LLMs at the point of generation.

In doing so, content profiles move us beyond reactive quality control and into proactive quality design. They allow AI systems to make better choices upfront, reducing the need for extensive human post-editing and minimizing brand risk.

A New Paradigm for Evaluating Quality

But structured input is only half the equation. If we want to scale LLMs for global content operations, we also need to redefine how we measure quality.

Traditional machine translation metrics, like BLEU or COMET, are useful in narrow linguistic contexts. But they fall short when evaluating the kinds of outputs LLMs now generate. Was the tone appropriate for a Gen Z audience in Brazil? Did the phrasing align with the company’s voice? Were regulatory constraints followed in a heavily legislated market?

These are not sentence-level questions. They are context-level questions. And they require evaluation frameworks that reflect the content profile’s intent.

That’s where organizations are headed, toward model-aware, context-driven evaluation systems that go beyond accuracy and into alignment, appropriateness, and performance.

Navigating Tradeoffs with Intelligence

It’s important to acknowledge that not every model, or every use case, demands the same level of contextual sensitivity. Different content scenarios have different requirements. A chatbot answer needs to be fast and on-brand; a product landing page needs to be emotionally resonant and culturally relevant; a compliance notice needs to be exact, concise, and legally vetted.

Understanding these tradeoffs between latency, cost, fidelity, and risk, is now a core part of working with LLMs in production. A fit-for-purpose approach is essential. That means deploying the right model, using the right content profile, under the right quality threshold, with the appropriate fallback mechanisms in place.

This level of orchestration can’t be managed manually at scale. It requires intelligent infrastructure, platforms and systems that dynamically route content, apply profiles, monitor output quality, and learn over time.

The Bigger Picture: From Promise to Performance

The potential of LLMs to revolutionize content creation is no longer in question. However, the real impact hinges on how we operationalize them. That means shifting focus from the model itself to the ecosystem that surrounds it.

Context-first AI is the key to turning promise into performance. It’s how we move beyond superficial outputs to deliver meaningful, market-ready content, faster, smarter, and at scale. Structured inputs like content profiles, context-aware evaluation frameworks, and intelligent orchestration systems aren’t optional add-ons. They’re foundational to building AI workflows that are both reliable and resilient.

As AI technology advances, our strategies must evolve with it. The real opportunity no longer lies in pursuing the latest model, it lies in building the infrastructure that enables any model to operate more effectively, align more precisely with business goals, and deliver measurable value at scale

Organizations that invest in context-first infrastructure today will define the benchmarks for AI performance tomorrow.