Abstract

As AI systems assume more decision-making tasks, the key challenge is determining when to involve humans. This article outlines a practical framework for building human-in-the-loop AI, based on four key principles: confidence thresholds, human overrides, explainability, and audit trails. Based on real enterprise use cases, it offers a clear approach to designing AI that acts with precision while leaving room for human judgment, accountability, and trust.

AI moves fast. It flags anomalies, routes shipments, and classifies products with precision and speed. But that speed comes at a cost when systems operate without checkpoints. A single wrong decision can spread quietly and do real damage before anyone notices.

In high-stakes environments, mistakes aren’t just bugs to fix. They become liabilities. Merged products. Misrouted medication. Incorrect financial actions. These risks are already playing out in live systems.

So the question isn’t how fast AI can decide. It’s how we ensure the right people are looped in at the right time. The most dependable systems are built with clear boundaries. They let AI do what it’s good at while making room for human oversight when uncertainty or judgment is involved.

This is a guide to designing those boundaries with precision.

Let AI Think, But Teach It to Ask for Help

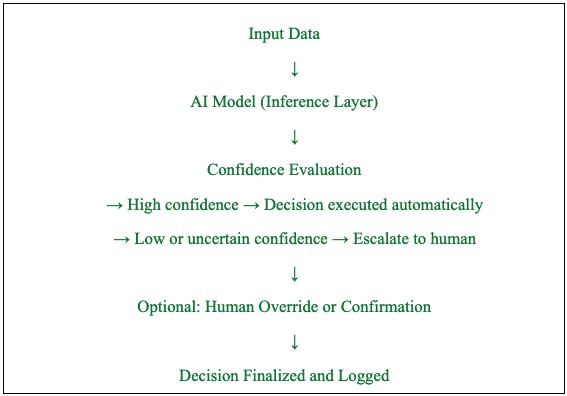

One of the clearest signals that an AI system is ready to act is its confidence level. When confidence is high, and patterns are clear, automation makes sense. But when the system hesitates, or the context is high-risk, it should escalate.

This is where confidence thresholds come in. They act as a triage layer. The AI checks itself before pushing a decision forward.

Here’s how a typical human-in-the-loop decision workflow can look in practice:

At Amazon, I helped develop a GenAI-powered product matching system that handled millions of catalog listings. It needed to determine whether two listings referred to the same item. A mistake could damage customer trust or disrupt fulfillment.

We implemented a confidence-based model that routed low-certainty cases to human review. This allowed the system to scale while still handling ambiguity with care.

Alex Singla, global leader at QuantumBlack, McKinsey & Company, said it well:

“For most generative AI insights, a human must interpret them to have an impact. The notion of a human in the loop is critical.”

Human Overrides: The Role of Final Judgment

Even highly confident AI can be wrong.

Data shifts. Rules change. Context matters more than precision alone.

That’s why override mechanisms are essential. They allow humans to intervene when a system makes a technically valid but contextually flawed decision.

In product classification workflows I’ve helped lead, a brand manager could instantly correct a decision when they saw a regional product variant incorrectly merged. The override didn’t require escalation or delay. It was built into the interface.

Well-designed override systems are:

- Quick to use

- Clear in intent

- Easy to track afterward

And they work. Human-in-the-loop AI frameworks have been shown to improve decision-making accuracy by 31% and reduce harmful or biased outcomes by 56%, according to Analytics Insight.

Those aren’t incremental improvements. They represent a real shift in how AI systems learn, adapt, and improve with human guidance.

Explainability: Show Your Work

Source: Yusnizam Yusof | Shutterstock

Trust isn’t built on confidence scores alone, it’s built on transparency. Knowing how a decision was made matters just as much as the outcome.

That’s where explainability matters.

The systems we built made decisions transparent. Whether based on metadata, behavioral clustering, or visual similarity, the logic was visible to reviewers. When a team understands what the AI is prioritizing, it’s easier to validate or correct that behavior.

Explainability provides:

- A reason to trust

- A faster way to correct errors

- Better data for retraining models

It also increases stakeholder confidence across departments, especially among non-technical reviewers who rely on the system but need to understand how it behaves.

Audit Trails: Keep Track or Lose Context

You can’t improve what you can’t trace. When something breaks, teams need to know exactly what happened and why.

Audit trails record:

- Who approved or intervened

- When the decision occurred

- What data the system used

- What the system predicted

- Whether a human reviewed or changed the decision

These trails proved essential in my experience at Amazon. They made it possible to investigate issues quickly, spot recurring failure points, and continuously refine the AI models behind the scenes.

Audit trails are not just best practices. They are becoming baseline requirements. The EU AI Act includes auditability as a key compliance feature for high-risk AI systems. Without it, accountability becomes guesswork.

A Framework That Keeps People in the Loop

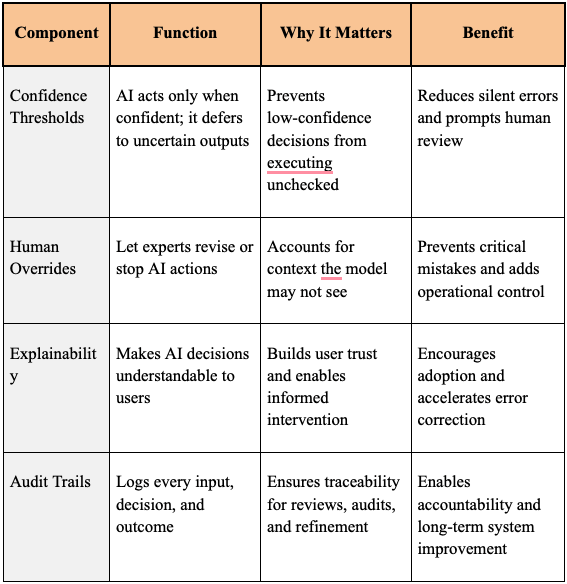

Bringing it all together, these four elements make up the core of any well-designed human-in-the-loop AI system. They serve different purposes, from filtering low-confidence predictions to ensuring long-term traceability, but they all point to one goal: shared responsibility between humans and machines.

Here’s a summary view of how each component contributes:

When these elements are designed to work together, AI doesn’t just operate faster. It operates smarter, and more importantly, it stays accountable as it scales.

Moving Forward Without Losing Control

AI tools are gaining autonomy. But autonomy without pause points creates risk.

We have to decide what kind of intelligence we want in the systems we build. Should they push forward in silence or pause when they’re unsure? Should they operate in isolation or invite humans into the loop when outcomes carry weight?

The most effective systems I’ve worked on weren’t just technically sound. They were designed to know when not to act alone. They made space for people to contribute where AI couldn’t confidently decide.

These systems:

- Escalate edge cases to real experts

- Let people course-correct decisions quickly

- Surface logic in clear terms

- Keep a record of what actually happened

They don’t remove people from the process. They make it easier to show up where it matters most.

Building Systems That Know When to Stop

The systems we design today influence how decisions will be made in the years ahead. They determine what is reviewed, what is trusted, and who carries responsibility when outcomes fall short.

Precision alone is not enough. A dependable AI system must recognize uncertainty. It must involve human input where needed. It must make its logic visible and leave a record of how decisions were made.

This approach is not just safer. It is more sustainable. It supports better judgment and builds stronger alignment between people and the tools they rely on.

Intelligent systems are not defined by speed or complexity. They are defined by their ability to pause, involve others, and improve with every decision.

This is the standard we should build toward. One system at a time.

References

- McKinsey & Company (2023). Keep the human in the loop. McKinsey & Company. https://www.mckinsey.com/about-us/new-at-mckinsey-blog/keep-the-human-in-the-loop

- Analytics Insight (2024). Human-in-the-loop AI: Enhancing decision-making and ethical AI deployment. Analytics Insight. https://www.analyticsinsight.net/artificial-intelligence/human-in-the-loop-ai-enhancing-decision-making-and-ethical-ai-deployment

- ComplianceEU (n.d.). Audit trail: Ensuring transparency and accountability in AI systems. ComplianceEU. https://complianceeu.org/audit-trail/