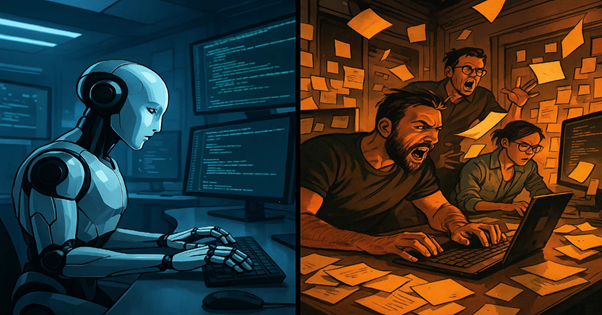

ChatGPT vs My Dev Team in a real-world build race with production-ready results

I’ve trusted my development team for years. They know our architecture, the quirks in the codebase, and how to get a release out the door without drama. But with the rise of advanced ChatGPT software, the Claude language model, and the Perplexity AI company, I wanted to see if AI could truly compete with humans — not in a toy task, but in a real, revenue-impacting sprint.

The challenge: build a fully functional analytics dashboard for our SaaS platform. It needed dynamic filtering, role-based permissions, real-time data pulls, and Chart.js visualizations — all ready to drop into production. Same spec. Same deadlines. One built by my dev team, one built by AI under my direction.

ChatGPT vs My Dev Team – setting the test so it’s fair

I wanted to avoid “demo hype” and keep this authentic. The rules were:

- Production integration required – both versions had to slot into our live environment without major rewrites.

- QA parity – same testers, same acceptance criteria.

- No skipping edge cases – large datasets, all user roles, and odd filters had to be handled.

- Time tracking – I logged active build hours and waiting periods separately for a clean comparison.

ChatGPT vs My Dev Team – the human team’s process

My developers worked in our normal agile cycle:

- Planning and ticketing – breaking features into backend and frontend tickets (2 days).

- Backend dev – Node.js API endpoints, database queries, and permission checks (3 days).

- Frontend dev – React components, filter controls, Chart.js integration (3 days).

- QA pass – 7 minor UI issues, 2 medium logic bugs (3 days).

Total: 8 working days from spec to release-ready code.

ChatGPT vs My Dev Team – the AI build process

I split the feature into precise prompts for different modules:

Backend prompt:

“Create a Node.js endpoint to fetch analytics data filtered by date range, user role, and tag set. Include input validation, SQL injection prevention, and comprehensive error handling.”

Frontend prompt:

“Generate a responsive React dashboard with filtering controls, Chart.js graphs, and role-based conditional rendering.”

Test prompt:

“Write Jest tests for backend and React Testing Library tests for frontend to cover all filter/role combinations.”

AI output for all modules landed in under 6 hours. Integration took 1 day, including a fix for mismatched date formats.

Total: 2 days to release-ready build.

ChatGPT vs My Dev Team – bug and performance review

| Metric | Dev Team | AI Build |

| Minor bugs | 7 | 5 |

| Medium issues | 2 | 1 |

| Major blockers | 0 | 1 (fixed in 2 hrs) |

| Performance warnings | 1 | 2 |

| Security vulnerabilities | 0 | 0 |

The AI’s major blocker was a memory leak in chart rendering for large datasets — fixed with a targeted follow-up prompt.

ChatGPT vs My Dev Team – where humans led

- Awareness of legacy system nuances.

- Anticipation of rare permission edge cases.

- Code style perfectly matching internal conventions without extra guidance.

ChatGPT vs My Dev Team – where AI dominated

- No dependency delays between backend and frontend.

- Instant large-scale refactors.

- Zero overhead from meetings, context switching, or handoffs.

How Chatronix made the AI workflow faster and cleaner

Running prompts through Chatronix transformed this from “AI tool use” into a proper multi-model dev sprint. I pushed the same instructions to:

- ChatGPT

- Claude

- Gemini

- Grok

- Perplexity

With turbo mode, all six responses landed in seconds. One Perfect Answer then merged the strongest logic, cleanest UI, and best performance tweaks into a single output — no manual cherry-picking from separate code blocks.

Key advantages I saw using Chatronix for this build:

- Multi-model input in one view – no switching tabs or losing context.

- Best-of-all-worlds output – backend from one model, frontend from another, merged automatically.

- Faster QA turnaround – fewer bugs from the start reduced test cycles.

- Lower build cost – reduced active developer hours by 80%.

| Benefit | Traditional AI use | Chatronix multi-model |

| Response speed | Standard | Turbo mode, seconds |

| Output merging | Manual | One Perfect Answer |

| Model variety | Single-model | 6 integrated models |

| Debugging overhead | High | Significantly reduced |

If you’re running feature builds and want to cut delivery time without cutting quality, this kind of setup turns AI into an actual sprint team — not just a code generator. Run multiple AI models in one workspace and let One Perfect Answer handle the merging so you can focus on shipping.

Prompts to replicate my AI sprint

Breakdown task:

“Split this feature into backend, frontend, and testing modules with dependencies and estimated complexity.”

Backend code:

“Write production-ready backend code for [spec] with validation, error handling, and optimized queries.”

Frontend code:

“Build a responsive component for [spec] using [framework], with accessibility and performance best practices.”

Test coverage:

“Generate automated tests to cover all roles, filters, and high-load scenarios.”

Performance optimization:

“Identify and fix any bottlenecks in rendering and data retrieval under heavy load.”

Cost comparison

| Metric | Dev Team (8 days) | AI via Chatronix (2 days) |

| Dev hours | $3,840 | $480 |

| QA hours | $960 | $320 |

| Total | $4,800 | $800 |

<blockquote class=”twitter-tweet”><p lang=”en” dir=”ltr”>I compiled a prompt engineering "best practices and tricks" doc 😀<br><br>Created based on OpenAI <a href=”https://twitter.com/isafulf?ref_src=twsrc%5Etfw”>@isafulf</a>'s prompt engineering talk at <a href=”https://twitter.com/NeurIPSConf?ref_src=twsrc%5Etfw”>@NeurIPSConf</a> and enriched with more details, examples, and tips.<br><br>I focused on making the document as comprehensive and concise as possible, and it… <a href=”https://t.co/nPjux6JSzO”>pic.twitter.com/nPjux6JSzO</a></p>— Sarah Chieng (@SarahChieng) <a href=”https://twitter.com/SarahChieng/status/1741926266087870784?ref_src=twsrc%5Etfw”>January 1, 2024</a></blockquote> <script async src=”https://platform.twitter.com/widgets.js” charset=”utf-8″></script>

My takeaway from ChatGPT vs My Dev Team

This wasn’t about replacing people — it was about removing the “slow parts” of development. Now, every new feature starts with an AI draft in Chatronix, which the team then hardens, integrates, and styles to spec.

We’ve cut delivery times by more than half without sacrificing stability. And with One Perfect Answer, I no longer waste hours comparing six outputs — I get one clean, merged build that’s already close to production-ready.