From automating threat detection to optimizing remediation efforts, AI is revolutionizing how security teams manage risk. The Application Security Posture Management (ASPM) space, for instance, is using AI to minimize false positives and enhance operational efficiency. However, this wave of innovation also creates a new challenge: further fragmenting AI solutions, security findings and issues.

While nearly every modern security tool incorporates some form of Generative AI, these tools often operate in silos, failing to communicate with one another effectively. As a result, security teams struggle to integrate AI-driven insights across their broader cybersecurity strategies. In February 2023, for example, Microsoft’s AI-powered Bing Chat experienced prompt injection attacks where users manipulated the AI to reveal internal configurations and instructions. This vulnerability arose because the AI system lacked the ability to distinguish between user inputs and system commands, a gap that could have been mitigated through integrated security protocols across AI components.

Without a unified approach, organizations risk underutilizing AI’s potential, missing critical threats, or suffering from AI-generated false information. To maximize the benefits of AI in security, the industry must prioritize connectivity and interoperability across AI-powered security tools and systems.

The Challenge of AI Fragmentation

AI-powered security solutions are designed to detect vulnerabilities, automate security operations, and provide insights that inform security teams. Yet, despite their sophistication, many of these tools operate independently, creating blind spots that adversaries can exploit—worse yet, they can create more work for security operations centers. The key challenge for security leaders is not whether to adopt AI, but instead to ensure it’s being used for the right purpose and that all AI solutions work cohesively.

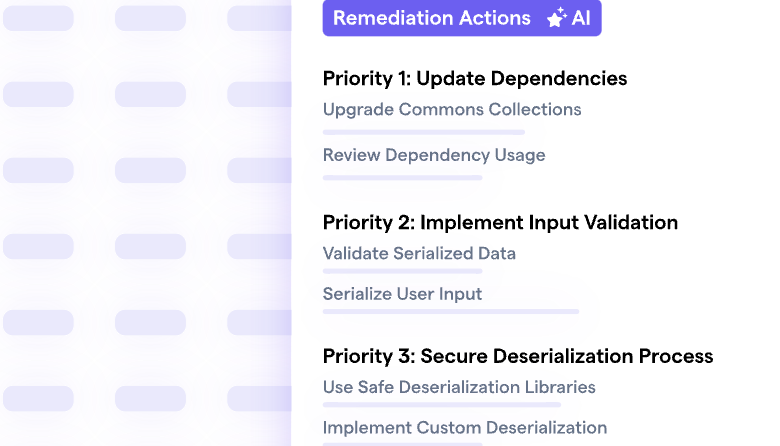

Many organizations rely on a combination of AI-driven endpoint detection and response (EDR), security information and event management (SIEM), cloud security posture management (CSPM), and ASPM tools. Each of these solutions brings unique strengths, but without interoperability, security teams face an overwhelming flood of alerts and conflicting recommendations. This lack of integration also leads to threat response and remediation inefficiencies, making it difficult to extract and act on insights.

The Risks of Disconnected AI Systems

The consequences of AI fragmentation in cybersecurity extend beyond inefficiency. For example, the increased reliance on AI-driven code assistants introduces new attack vectors. AI-enabled coding assistants trained on large codebases can inadvertently ingest and propagate vulnerabilities, creating openings for attackers to inject malicious code. Threat actors are also actively leveraging AI to develop more sophisticated attacks, including automated phishing attacks and AI-generated malware that evades traditional detection methods.

In scenarios where AI systems are trained on user-generated data without cross-system verification, attackers have also injected malicious data to corrupt AI behavior. This tactic is called data poisoning. Without interconnected monitoring across AI training modules, vulnerabilities can persist undetected.

Another pressing concern is AI explainability. Security teams need clear visibility into how AI models make decisions to ensure transparency, accountability, and reliability. When AI-powered security tools operate in silos, it becomes nearly impossible to trace how a given security decision was reached, raising concerns about bias, accuracy, and compliance with regulatory requirements.

Building the Connective Tissue Between Security Solutions AI Capabilities

To address these challenges, organizations must rethink how they integrate AI into their security strategies. It will become increasingly important to unify AI-driven security solutions so they can communicate seamlessly in the future. Without a concerted effort to bridge the gaps between AI-powered tools, security teams will continue to struggle with fragmented insights, inefficient threat responses, and an inability to fully capitalize on AI’s potential. We need to take a balanced approach to integrate AI and human expertise, which will also improve interoperability and accountability.

Security vendors should prioritize open standards and API-based integrations to streamline data exchange between AI-driven security tools. Many security solutions operate as standalone systems now, limiting their ability to share insights across an organization’s security stack. By adopting standardized APIs, organizations can create a more cohesive security ecosystem where AI-powered tools work in tandem rather than isolation. This interoperability will allow security teams to correlate AI-generated findings across multiple solutions, reducing blind spots and enhancing overall threat visibility. An open approach to AI interoperability also aids industry collaboration, supporting more effective cybersecurity solutions overall.

Implement AI Governance and Human Controls

AI’s growing role in cybersecurity also makes it essential to have robust governance and compliance measures in place, helping ensure that AI-powered security tools align with regulations and ethical considerations. Organizations must establish clear governance frameworks that define AI’s role in decision-making for transparency and accountability. This includes implementing guardrails that prevent AI from making unchecked security decisions and ensuring training data is diverse to mitigate bias. Without proper governance, AI models can become susceptible to adversarial manipulation or generate unreliable outputs that compromise security posture. By embedding compliance and ethical oversight into AI-driven security strategies, organizations can mitigate risks while maximizing AI’s potential in a responsible manner.

While AI offers immense capabilities in processing vast amounts of security data, human intuition and expertise are irreplaceable. AI is best utilized to augment intelligence by filtering, correlating, and surfacing critical security insights that allow human analysts to focus on strategic decision-making. Security teams should use AI for preliminary threat detection and prioritization, ensuring that human experts intervene when deeper investigation or nuanced judgment is required. For instance, AI can efficiently analyze patterns in threat data to identify anomalies, but human analysts should interpret intent, assess potential consequences, and make final decisions. Striking the right balance between automation and human oversight is essential to build a resilient cybersecurity strategy where AI enhances, rather than replaces, security expertise.

The Future of AI-Driven Security

AI has the potential to revolutionize security operations, but only if we address the fragmentation challenge that it currently worsens. Security leaders must advocate for greater interoperability between AI solutions, pushing vendors to develop tools that work together rather than in isolation.

The goal should not just be to adopt AI but to optimize its use across the security stack. By bringing AI-powered security tools together, organizations can create a more resilient, adaptive, and intelligent cybersecurity posture. It’s time to bridge the AI divide and ensure that innovation also leads to better security outcomes that are both effective and trustworthy.