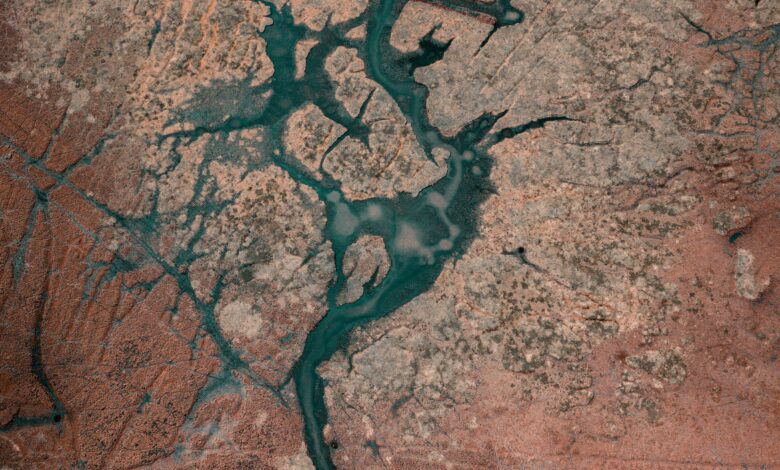

The dazzling promise of AI hides a terrifying truth: it’s draining natural resources at an alarming rate. In Spain’s arid Aragón region, data centers owned by Amazon, Microsoft, and Google have requested up to 48% more water to expand operations, drawing heavily on scarce supplies in one of Europe’s driest areas. Across the continent, the data center industry consumed roughly 62 million cubic meters of water in 2024, nearly 24,000 Olympic pools. These aren’t just corporate campuses, but thirsty industrial complexes built in drought-hit regions with aging infrastructure. As AI-capable servers multiply, local communities compete for every drop.

The AI boom isn’t just a software story, it’s an infrastructure race with massive energy and cooling needs. Power consumption from AI data centers is projected to triple by 2030, driven by rapid growth in model size and use. Training a single large language model, such as GPT-4, can consume over 50,000 MWh of electricity, equivalent to powering over 4,000 households for a year, and inference, the ongoing AI queries, adds even more load. Unlike traditional internet traffic, AI workloads require sustained, intense compute power and sophisticated cooling to prevent overheating. Yet Big Tech keeps expanding data centers, often in regions with limited oversight and little transparency about energy or water use.

While AI’s energy use is well scrutinised, its water footprint remains largely hidden. Water cools the servers training and running AI models, especially during high-intensity tasks and hotter months when demand peaks. Many data centers draw water at night to ease daytime strain, but volumes remain staggering. Cooling systems consume millions of gallons monthly, especially where rising temperatures already stress water infrastructure. Unlike electricity, where renewables are growing, there’s no substitute for water.

This problem is escalating in Europe. Beyond Aragón, data centers are rapidly increasing in drought-prone zones, particularly in southern France, often without public disclosure or oversight. Some projects have been greenlit with limited transparency, sparking backlash from residents competing with hyperscale cloud providers for water. The preference for water-based cooling, due to its low cost and energy efficiency, often drives data centers to locate in water-stressed regions, where water is more affordable. Water has become one of the last considerations for data center operators when choosing locations, with real estate and power costs being the major drivers, risking long-term harm to ecosystems and communities.

Local communities are pushing back. In Colón, Mexico, a Microsoft data center was approved to extract 25 million liters of groundwater annually, almost 24% of the municipality’s water allocation, even as locals face drought, rationing, and crop losses. Residents and environmentalists accused authorities of prioritising foreign tech investment over public needs. Locals organised protests, occupied water infrastructure and launched sit-ins, demanding access to piped water. The government responded with promises of new aqueduct construction alongside commitments to ensure water access for locals. Similar frustrations surfaced in North America’s “Data Center Alley” in Northern Virginia, where water use surged from 1.13 billion gallons in 2019 to 1.85 billion gallons in 2023, a 64% increase, drawing scrutiny amid drought and heat waves. Utilities and regulators globally struggle to meet exploding power demand from AI and data center growth and the result? Strained grids, higher bills and rising anxiety over water and electricity access for rural residents as AI’s footprint expands.

This unchecked growth isn’t accidental – it reflects systemic priorities favoring rapid expansion over sustainability. Governments offer tax breaks and relaxed rules to attract Big Tech, seeing data centers as economic engines. Yet many regions lack policies to assess or limit water and energy use. Data center operations remain blurred and local communities rarely have input during approvals. This regulatory gap lets corporations advance projects with minimal oversight, hiding environmental and social costs.

Without stronger regulation and smarter infrastructure, this growth risks deepening the digital divide, where resource extraction from vulnerable communities fuels global tech expansion. The challenge: balance AI’s promise with environmental care and community resilience.

Addressing this crisis requires urgent coordination. Regulations lag reality and governments must enforce clear limits on water and energy use, especially in high-risk areas. Innovation is critical, too. GPU-intensive AI workloads demand dense compute power and constant cooling, pushing data centers to their limits. Distributed compute networks and regionally adaptive infrastructure can spread demand, easing pressure on stressed systems. Advanced cooling and smarter grid integration must also be part of the solution. Without policy reform and infrastructure innovation, AI’s environmental costs will be unsustainable.

However, signs of progress are emerging. Policymakers in Europe and the U.S. debate stricter disclosure rules for data center water and energy use, plus incentives for greener, modular builds. Community-led activism demands transparency and accountability, challenging the “build now, answer later” approach. But time is short. Without bold structural change, rising AI compute demand will clash with finite planetary resources. A sustainable AI future is possible, only if environmental responsibility guides how we build the systems powering it.