AI infrastructure is entering uncharted territory as enterprises race to scale artificial intelligence products. Unlike traditional cloud services, where demand curves for compute, storage, and networking can be forecast with relative confidence, the arrival of GPU-driven AI workloads has created unprecedented complexity in capacity planning. Performance leaps that outpace Moore’s Law, fragile supply chains, and fast-evolving model efficiency mean the stakes are higher than ever, both financially and operationally.

Few people are as well positioned to address these challenges as Raghav Parthasarathy. A seasoned finance leader in cloud economics and infrastructure strategy, Raghav has shaped GPU forecasting models, pricing frameworks, and investment plans for some of the world’s largest hyperscale platforms. His work bridges finance and engineering to ensure enterprises can deliver high-performance AI services while avoiding the risks of underutilization or shortage. In this conversation with AI Journal, Raghav shares his insights on the future of GPU demand forecasting, lessons from large-scale deployments, and the evolving role of financial and engineering teams in the age of AI.

1. What makes forecasting GPU demand for AI workloads uniquely challenging compared to traditional cloud infrastructure planning?

In traditional cloud infrastructure planning, demand curves, especially for foundational services such as Compute, Storage, and networkin,g are relatively predictable. Growth is generally tied to customer adoption/migration timeline and when you account for peak periods for your key customers to account for seasonality, you get fairly accurate. Now, when it comes to GPU forecasting for AI workloads, we are dealing with a lot more complexities at this stage.

Three factors make it uniquely challenging. First, GPU performance is improving at a pace that outstrips Moore’s Law, which makes past hardware curves unreliable. Second, efficiency gains in newer models mean that the same inference job on a next-generation GPU can require significantly less raw processing power. Third, supply-side constraints impact GPUs since they carry long manufacturing lead times and fragile global supply chains.

So there are more dire consequences of inaccurate forecasting: under-forecasting would result in not having sufficient capacity for paying customers, while over-forecasting risks billions of dollars stranded in underutilized assets that potentially could be replaced in a very short span with the next generation of GPUs.

2. How do you approach aligning infrastructure investments with the unpredictable growth patterns of enterprise AI products?

I’ll add quite a few callouts here. First and foremost, the key is agility. You’ve got to be ready to accept that you will be wrong and decide how often you want to learn why you are wrong and correct the cause of your forecast errors. This leads to my 2nd callout – To be able to learn and re-learn, it is very important to build a robust forecast muscle and develop the systems needed that can allow you to iterate on your forecasts based on new signals. This leads me to my 3nd callout, which is that it is critical to understand the key drivers ,especially for inferencing workloads – be it prompts, tokens, or user counts. Lastly, as alway,s for businesses in the early stage, it is always important to have range-based forecasts or scenario modelling, and most importantl,y to prepare for either extreme end of the forecast.

3. Can you share how demand forecasting models help balance performance needs with cost efficiency when deploying large-scale AI systems?

Forecasting models can be a key tool to achieve both the required performance needs as well as cost efficiency. AI product owners want to guarantee low-latency inference and fast training cycles. Finance leaders want to provide this high performance at the lowest possible cost. Forecasting achieves both. Reducing cost can come in many flavors, and let me just add two. First, accurate forecasting ensures high utilization of assets and hence lower stranded cost. Second, accurate forecasting ensures that the right hardware is purchased and no one is committing to higher quantities of a prior generation, lower-performing GPU when a newer generation is already launched. Forecasting can also help balance performance needs and cost.

For example, if the model suggests potential overcapacity in the near term, engineering teams can design workloads that can increase utilization, offering spot-like tiers whic,h as we know, is now being offered by most major AI infrastructure providers. On the other hand, if the forecast signals higher demand than available supply then capacity can be ring-fenced for mission-critical workloads while other usage can be shaped.

4. In your experience, what are the biggest risks of underestimating GPU requirements and of overestimating them?

Both errors are costly but in different ways. Underestimating demand means customers don’t get access to the capacity they need, which can slow adoption, result in lost revenue, and create reputational risk.

Overestimating demand also comes with its own challenges. GPUs are capital-intensiv,e and their utility is highly time-sensitive given the speed of innovation. Over-procurement leads to idle infrastructure that depreciates quickly. Unlike traditional servers, GPUs don’t retain their value long once the next generation is out.

5. How can forecasting strategies evolve as workloads shift from model training to inference, which often becomes the larger share of long-term cost?

Training workloads are fundamentally different from inference workloads. Training a model requires exposing it to vast datasets and adjusting billions of parameters. It is extremely compute-intensive, often requiring tens of thousands of GPUs running in parallel for days or weeks. Training jobs also come in bursts, they run at a massive scale during experimentation and then may go idle until the next iteration. Importantly, training is not latency sensitive. If a training job takes 12 hours instead of 10, it may slow down research cycles, but it doesn’t directly impact end users.

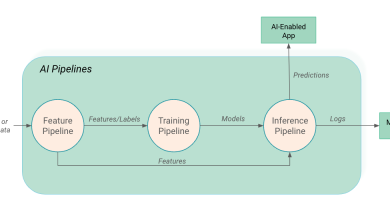

Inferencing, on the other hand, starts serving real-time queries from users, generating text, images, or predictions based on new input. It scales directly with adoption: as more users send prompts, the infrastructure load grows. Unlike training, inference is highly latency sensitive. That responsiveness requires careful provisioning of GPU resources close to demand.

Because of this, forecasting strategies for training and inference must be different. Forecasting inference demand requires a much tighter alignment with product usage signals and efficiency improvements. That means forecasting inference demand requires a tighter connection to product usage statistics – such as active users, prompt frequency, and session duration. These usage signals must be modeled into the forecast so that infrastructure scales in step with customer behavior.

Let us also not forget model efficiency – the number of tokens needed is often lower in newer models, and that needs to be factored in. So to summarize, inference requires a much tighter alignment with product usage.

6. What lessons can enterprises learn from large-scale deployments, like Copilot, about matching GPU supply with dynamic AI workload behavior?

As I mentioned earlier, I cannot emphasize enough the importance of agility. Forecasts will always carry uncertainty. The way to succeed is by pairing initial capacity planning with robust telemetry and agile reallocation. For example, if you have a demand for one product growing much faster than that of another on which you had allocated GPUs, you will need to relocate the GPUs within days, and if not, develop a shared pool for all these workloads.

Another lesson is that early adoption curves can be deceptive. Usage often ramps more slowly than expected at first and then accelerates rapidly once customers integrate AI into their workflows. That means organizations should plan for “S-curve” adoption: start conservative, but be ready to scale fast when adoption grows rapidly.

7. How do you see the role of financial strategy teams and engineering teams converging around infrastructure planning for AI?

In the past, finance managed budgets and engineering managed capacity, often in silos. With GPUs, that separation doesn’t work. The scale of investment and the volatility of demand mean finance must understand workload patterns. This also means there is a greater need for engineering and finance to own shared forecasting models and joint governance forums.

This convergence is actually one of the most exciting shifts in the industry. It forces teams and/or organizations to think holistically. The answers require both technical and financial expertise. As AI becomes core to every enterprise, I see finance and engineering operating almost as one team, making infrastructure decisions together.

8. Looking ahead, what innovations in forecasting tools or methodologies do you think will be most critical for sustaining the next wave of AI growth?

I wish I had a crystal ball for this, but since I don’t ,let me state what I think are going to be crucial. First, continued advancement in forecast methods, be it driver-based models closely aligned to product usage or ML model, is going to be critical.

Second, as we continue to see a rapid increase in performance across GPU hardware generations, it is going to be crucial to cover not just one hardware type but an ecosystem of hardware, each with different performance-cost profiles. The ability to forecast across this heterogeneous environment and optimize placement will be critical.