The Transformation of Business and Cybersecurity

AI is reshaping industries by producing increased amounts of unstructured data in the form of text, images, videos and audio. This shift is revolutionising business operations but it’s also transforming the cybersecurity landscape. Traditionally, security experts focused on protecting structured data such as databases and network architectures. However, the focus is now shifting toward the complexities of safeguarding unstructured data which is less predictable and harder to secure.

What Is Unstructured Data and Why Is It a Security Risk?

Unstructured data includes everything from AI-generated emails and chatbot logs to multimedia files and synthetic content created by generative models. As it lacks a consistent format or schema, it often bypasses traditional security filters designed for structured datasets. Unstructured data can also be created and shared rapidly which makes it difficult for organisations to classify or monitor in real time.

The Fluidity of AI-Generated Data and Security Challenges

The challenge of securing unstructured data is due to its fluidity. For example, a generative AI tool may produce an internal company memo that accidentally includes sensitive financial projections or personal employee details. Unlike structured databases with predefined fields and access controls, this kind of output may be overlooked or improperly stored which exposes organisations to regulatory risks and cyberattacks.

The Rise of Machine Identities as a Cybersecurity Threat

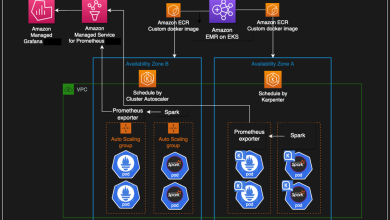

To address these vulnerabilities, companies must adopt new cybersecurity strategies tailored to the reality of AI-generated content. One of the most pressing concerns is the proliferation of machine identities i.e. digital credentials, tokens or keys used by AI tools and cloud services to access systems and communicate securely. As businesses scale their AI capabilities, they inadvertently create a growing number of these non-human accounts.

How Cybercriminals Exploit AI-Generated Credentials

These machine identities become prime targets for attackers if they are mismanaged. For example, compromised API keys or cloud service credentials can grant unauthorised access to critical infrastructure or sensitive datasets. A recent trend shows attackers focusing on stealing or exploiting these credentials to manipulate AI-generated outputs by injecting false data into decision making pipelines or executing remote code through exposed endpoints.

For example, if an AI-powered customer service chatbot that has administrative access to billing systems is compromised, an attacker could reroute transactions or exfiltrate client data.

IAM Strategies for Securing AI-Driven Systems

Identity and access management (IAM) must evolve to address this new threat landscape as it is no longer sufficient to manage only human user credentials. Organisations must build systems that automatically rotate and expire machine credentials. Strict permission boundaries should be put in place so that AI tools can only access what is necessary for their function. Anomaly detection systems that monitor usage patterns in real time are also essential to identify unusual machine behaviour such as sudden spikes in data access or communication with unknown endpoints.

AI-Powered Phishing and Deepfake Cyber Threats

Cybercriminals are increasingly using AI to launch attacks. AI-generated phishing emails are crafted with such sophistication that they can imitate internal formatting and tone with high precision. This makes them significantly harder to detect than traditional spam or generic phishing attempts.

Best Practices for Strengthening Cyber Defences

To counter these threats, organisations must improve their defences on multiple fronts. First, deploying AI-driven detection systems is critical. These tools can analyse communication patterns and behavioural signals to flag suspicious activity before damage is done. Additionally, employee education and cyber awareness training should be updated to include the latest tactics involving generative AI and social engineering.

The Role of Regulatory Frameworks in AI Cybersecurity

Frameworks like GDPR and the upcoming EU AI Act focus on businesses that mishandle sensitive or AI-generated data. These regulations increasingly require organisations to demonstrate transparency and security in their AI usage. Non-compliance can result in significant penalties and reputational damage.

Future Trends: AI-Powered Defensive Systems

I believe the future of cybersecurity will be shaped by adaptive AI-powered defence systems that can predict and respond to threats dynamically. These systems will integrate with existing security architectures to provide real-time monitoring and automated incident response. Zero-trust models where every access request is treated as suspicious by default will become the new standard.

Developing Ethical AI Frameworks for Secure Innovation

Businesses must also invest in developing ethical AI frameworks. These frameworks should define how AI systems are designed and audited to ensure that security is built in from the start. By doing so, organisations can maintain trust in their AI systems and mitigate the risks posed by both human and machine actors.